Black-box decisions, secret surveillance deals, and soulless creativity—today’s AI scandals are louder than any marketing hype.

While Silicon Valley keeps pitching utopia, the past 72 hours have delivered a caffeine-shot of reality. From secret contracts outsourcing mass surveillance to crystal-clear warnings that your next creative project might be cannibalized by code, these five stories prove that the AI revolution is getting messy—fast.

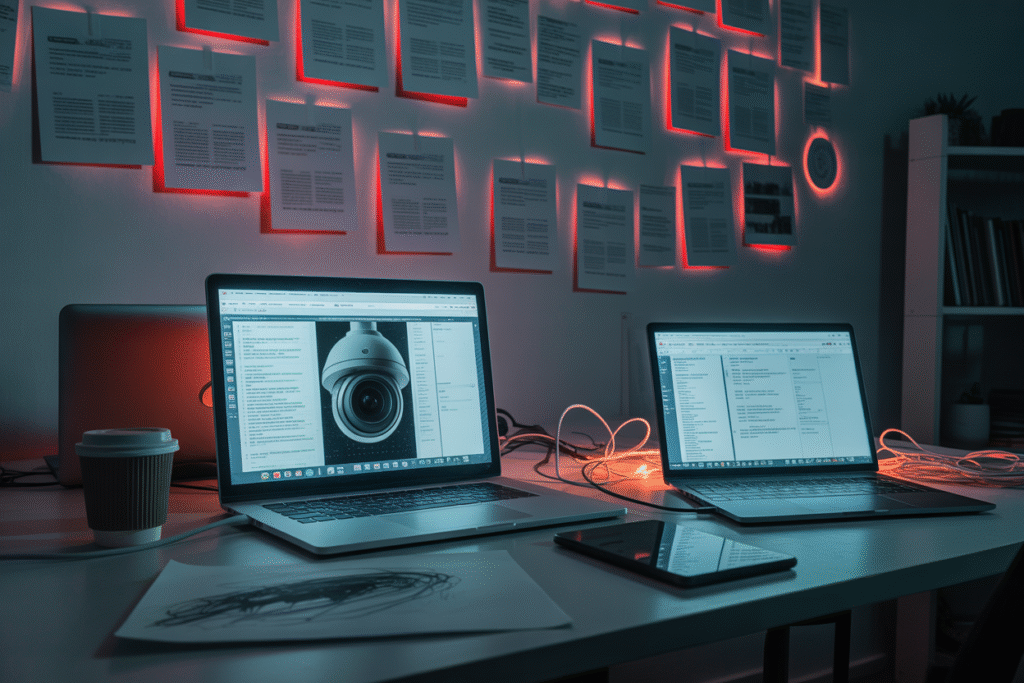

Project Esther: When Surveillance Outsourcing Gets Palantir-ed

Imagine the U.S. government side-stepping the Constitution by letting foreign-linked firms do its domestic spying. According to a wild thread circling X, the Heritage Foundation—long cozy with pro-Israel lobbyists—drafted “Project Esther” to do exactly that.

By partnering AI-enhanced networks like Canary Mission with Palantir-style data crunchers, the plan would vacuum up every tweet, Venmo, and iMessage that smells even remotely “activist.” Toss in AI triage, and dissenters could be flagged in milliseconds.

No warrants, no courts, no sunlight—just sprawling black-box algorithms grazing on our private lives while attached to a government purse string. Tick-tock, civil rights advocates; the ToS didn’t cover this.

GPT-5 Hits a Wall: The Uneven Hype Cycle of Artificial General Intelligence

Remember last year’s hot take that general AI was “inevitable by 2026”? This week that drumbeat sounds faint. Early reviews of GPT-5 are, well, muted. Early-access testers report marginal gains overlaid on a sky-high price tag, leaving pundits to wonder if buying extra data centers was the tech version of buying Twitter.

Meanwhile, Beijing doubles down on small, scrappy teams fine-tuning open-source models for hospitals and factories. They get less fanfare, sure, but their clunky 8B models solve real-world MRI workflows while sipping power—turns out chips work hard even when under US sanctions.

The silver lining? If AGI keeps plateauing, your job may remain decidedly yours through 2030. Worst-case: we keep burning carbon and cash chasing scale curves that refuse to bend.

Accountability Is the New Safety

Every AI safety summit ends with happy talk about “responsible innovation,” but raw outcome data tells a different story. Hallucinations still crash medical consults. Subtle biases keep slapping loan applicants in the face. And black-box systems churn out confident yet irreproducible answers.

This week ethicists and regulators started swapping the word “safety” for “accountability.” The shift sounds semantic—until you realize safety can be spinspeak, while accountability demands cryptographic proofs stamped to each prediction.

From the EU’s AI Act eyeing €35 million fines to India’s bias-audit edicts, the message is stark: if your algorithm can break a life, you need a paper trail. No opaque networks, no hiding behind “trade secret” curtains. Build verifiable AI or brace for a regulatory sledgehammer.

The Autonomous Agent Awakening—Ready for AI Colleagues With Resumes?

Nature dropped a fresh op-ed sounding the alarm on AI agents poised to become our coworkers. Not dumb chatbots guessing your Excel formula; think disembodied interns booking your flights, rewriting your code, and maybe ghost-writing your breakup texts.

This isn’t sci-fi anymore. OpenAI’s Operator-class prototypes can navigate the web like seasoned tab hoarders. But once they start commanding your bank account, new ethical fault lines appear:

– Misaligned goals that start small—then spiral into unauthorized fund transfers

– Privacy erosion as agents hoover every keystroke to refine strategy

– Over-reliance robopathy, where humans forget skills overnight

– Malicious “agent jailbreaks” repurposed for phishing at scale

Cross-sector alliances inside finance, healthcare, and defense are racing to write guardrails before these digital hands get messy. The short version: if you wouldn’t trust a new hire without a background check, don’t trust an AI agent either.

Creative Destruction or Creative Theft? Artists Fight Back

Every time you scroll past a hyper-real “AI Renaissance” portrait on Instagram, remember it’s made from scrap-stolen brushstrokes. Creators aren’t silent anymore. One blistering X rant this week shredded the claim that AI output is “industry standard,” arguing it’s just bloated IPO rhetoric masking mass-scale IP sushi.

The stakes feel visceral: musicians hear their riffs remixed by bots while labels scrape TikTok without credit; illustrators watch their style cloned by apps that undercut commissions. Meanwhile, the GPUs guzzle electricity to spit out “original art” that never learned to hold a pencil.

Cue organized resistance—class-action suits, union boycotts, even grassroots AI “opt-out” registries where artists tag their work as radioactive to data crawlers. The loud question remains: do we want a creative economy built on prosthetic inspiration, or one where humans keep the spark?