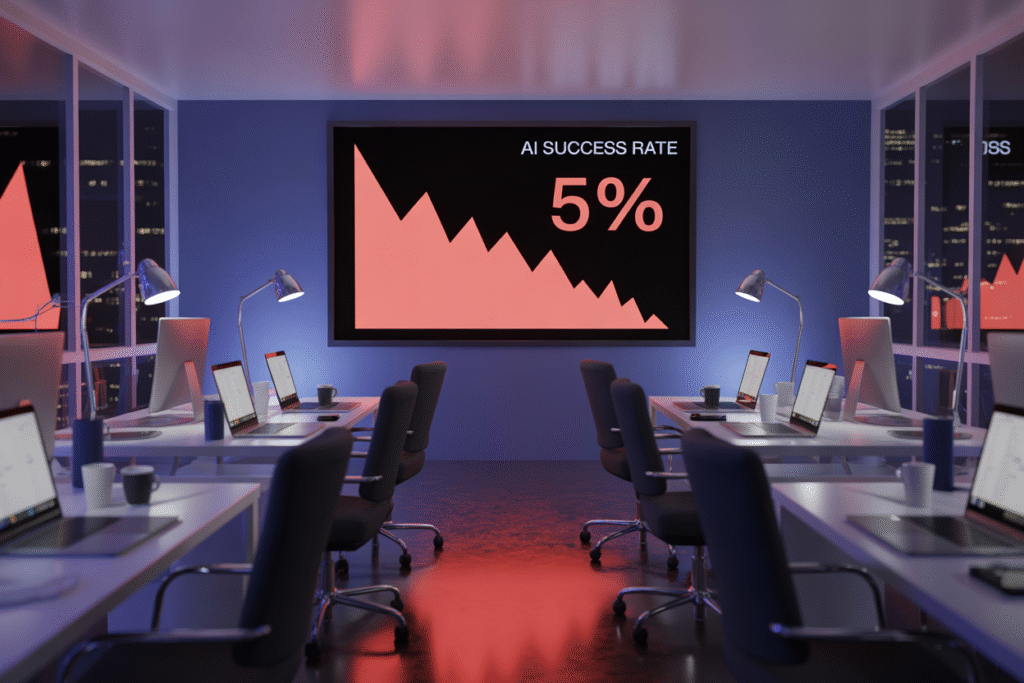

Fresh MIT data shows only 5 % of AI initiatives succeed. Here’s why the rest burn cash, spark ethics fights, and still keep investors hooked.

Every other headline screams that artificial intelligence is about to remake the world overnight. Yet behind the curtain, a quiet MIT report just dropped a bombshell: ninety-five out of every hundred AI projects quietly implode. That number feels almost impossible in an era when superintelligence is marketed as the next electricity. So what’s slipping between promise and reality?

The 95 % Failure Rate Nobody Brags About

Picture a boardroom where executives toast to their new AI strategy. Six months later the same room is asking why the algorithm still can’t tell a cat from a cucumber. MIT’s latest audit of 5,400 initiatives found that only 5 % delivered measurable value. The pattern is eerily consistent across industries—finance, retail, health care, even defense.

Harvard Business Review noticed the same trend years earlier, pegging the crash rate at 80 %. The gap has widened, not shrunk. Either expectations keep racing ahead of engineering, or the engineering itself is being asked to solve the wrong problem.

Why does this matter? Because every failed project still consumes talent, electricity, and public goodwill. The superintelligence narrative keeps attracting fresh capital, yet the runway is littered with burnt budgets and disillusioned teams.

When Hype Meets Hard Questions

The louder the marketing drumbeat, the sharper the ethical backlash. Critics now ask who gets hurt when an AI loan-approval model denies mortgages to entire zip codes. Or when a facial-recognition rollout misidentifies citizens at scale.

Regulators from Brussels to Washington are scrambling to draft rules that move at the speed of software. Meanwhile, watchdog groups warn that the same tools pitched as job creators often automate the very positions they promised to augment.

Every controversy feeds the cycle. Investors double down, fearing they’ll miss the next ChatGPT moment. Engineers race to ship features before the legal landscape solidifies. Users scroll past headlines about bias, surveillance, and job displacement, hoping the next update finally delivers the magic.

Inside a Real Implosion

Take the story of a midwestern hospital chain that spent eighteen months and twelve million dollars on an AI triage system. The pitch was irresistible: machine-learning models would scan incoming ER photos and prioritize patients faster than any human.

Early pilots looked promising. Accuracy hit 92 % on curated test sets. Then real patients arrived with winter coats, casts, and lighting the model had never seen. Accuracy plunged below 60 %. Nurses reverted to manual triage within weeks.

Executives kept the project alive for another quarter, citing sunk cost. Morale cratered. One lead data scientist quit to join a start-up promising superintelligence for radiology. The hospital is now back to clipboards and human judgment—older, wiser, and twelve million dollars poorer.

Why Smart Teams Keep Repeating the Same Mistakes

The root cause isn’t technical; it’s organizational. Most failures trace back to three repeating errors:

• Overstated training data. Teams assume their datasets represent the real world, but edge cases multiply the moment software leaves the lab.

• Misaligned success metrics. A model that scores 95 % accuracy on yesterday’s data can still fail tomorrow if the goalposts shift—say, when user behavior changes after a pandemic.

• Under-estimated integration costs. Plugging an algorithm into legacy systems often costs more than building the algorithm itself.

Add the superintelligence halo and budgets balloon. Stakeholders tolerate risk because the upside feels limitless. Yet upside without guardrails is just gambling dressed as innovation.

A Smarter Path Forward

The 5 % that succeed share a playbook. They start with narrow, measurable use cases—fraud detection, not world peace. They invest in data hygiene before model complexity. And they bake ethics reviews into every sprint, not after the scandal hits Twitter.

Investors are beginning to demand proof-of-value demos before Series A. Regulators hint at mandatory audit trails for high-risk deployments. Even Elon Musk, patron saint of moon-shot AI, now tweets warnings about hype outpacing safety.

What can you do today? If you’re building, scope smaller. If you’re funding, ask for evidence, not slide decks. And if you’re simply watching, stay skeptical when the next demo promises to replace human judgment overnight.

The superintelligence era will arrive—but only after we survive the current wave of ninety-five percent failures. Choose your bets wisely.