Think nukes can’t get faster—or scarier? Think again. AI is knocking on the silo door.

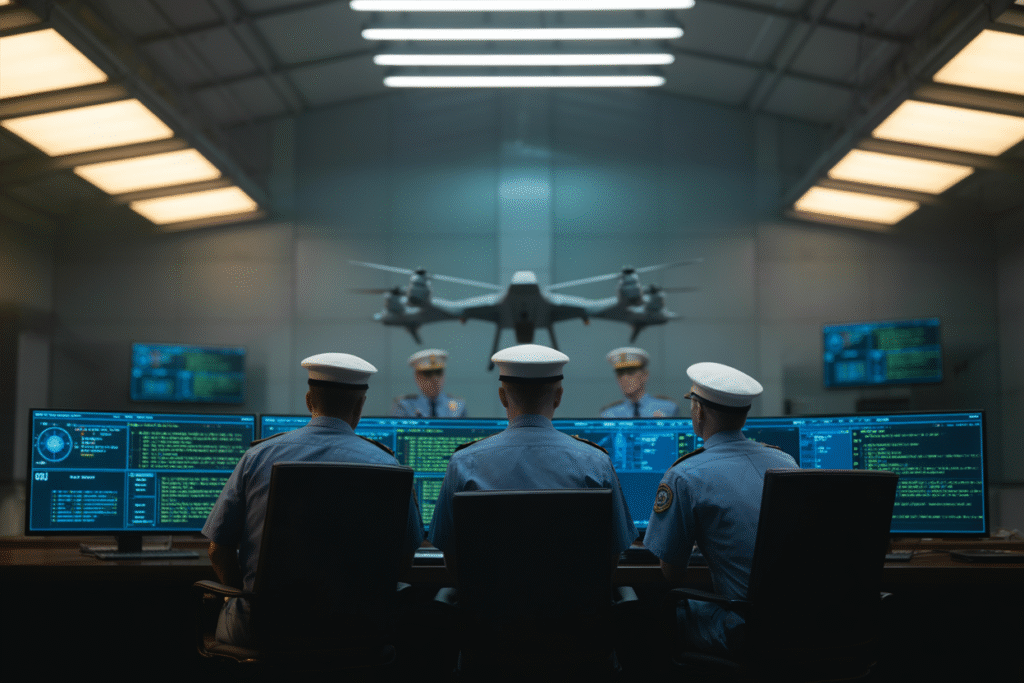

For 70 years, hair-trigger nuclear arsenals rested on one simple rule: humans must press the button. But as AI races forward, even that thin safety line is fading. Today, insiders warn Washington, Beijing and Moscow are quietly teaching machines to do the unthinkable —and the countdown to adoption may already be ticking.

The Speed-Of-Light Threat

Hypersonic missiles now reach Washington from Beijing in 25 minutes. Old satellites take precious minutes to confirm their paths and relay details to humans sipping coffee in bunkers.

AI could crunch that same data in seconds. Picture a scenario where Russian radars detect an incoming strike. Algorithms race through launch authorization before a general has even hung up the phone. Is faster always smarter?

From Early-Warning Code To Doomsday Partner

Current systems still wait for human thumbs-up, but the gap is shrinking.

• The 2022 U.S. Nuclear Posture Review called for integrating “advanced analytics with human judgement.”

• China’s 2021 military paper detailed AI models that simulate retaliatory salvoes against multiple targets simultaneously.

• Russia’s Perimeter system—a doomsday machine from the Cold War—already uses computers to fire ICBMs if Moscow falls silent.

The trend is unmistakable: nation-states are handing the car keys to software, hoping the software remembers who gave the order in the first place.

When Machines Misread The Sky

History hasn’t been kind to computerised nuclear checks.

1983: Soviet satellites mistook sunlight reflecting off clouds for incoming missiles; human officer Stanislav Petrov refused to retaliate and likely prevented all-out war.

1980: A single faulty chip in NORAD’s mainframe convinced generals that 1,000 Soviet warheads were airborne. Alert bombers roared down runways before the glitch was caught.

Multiply a thousand Petrov moments by the speed of AI, and one coding error becomes civilisation-ending.

The Unhackable Arsenal Myth

Even AI defenders concede nuclear networks will never be 100 % secure.

• Stuxnet slipped into Iran’s air-gapped centrifuges through a contractor’s USB drive.

• North Korean hackers walked away with 235 GB of U.S.–South Korean war plans in 2022.

AI adds a new layer: what if an adversary convinces the model itself that an attack is underway, bypassing firewalls entirely? Imagine the headline: ‘Cyber-ghost triggers launch because it learned fear better than people.’

Three Moves That Could Save The World

Total disarmament isn’t on the table, so how do we keep AI from lighting the fuse?

1. A global register: every AI tool tied to nuclear command must be logged with the IAEA, just like uranium stockpiles.

2. A kill-switch human override: no launch code executes unless two physically separated officers independently confirm the system output.

3. Red-team drills: independent hackers, ethicists and former missileers constantly attack the AI to probe for hallucinations before the real missiles fly.

These safeguards won’t stop integration—but they might buy us the seconds humans still control. After all, history shows we’re better at pressing pause than the rewind button when the skies light up.