OpenAI’s own CEO felt the same dread physicists felt in 1945. Could the next frontier of AI trigger a wake-up call on the scale of Hiroshima?

Picture this. A model so advanced that even its creators step back, pulse racing, wondering if they’ve gone too far. Today that scenario leaked straight out of OpenAI. The resemblance to the 1945 Manhattan Project isn’t poetic flourish — it’s a warning. In this post we unpack the Hiroshima parallel, the missing global AI regulation, and what the next 5-7 years may look like if nothing changes.

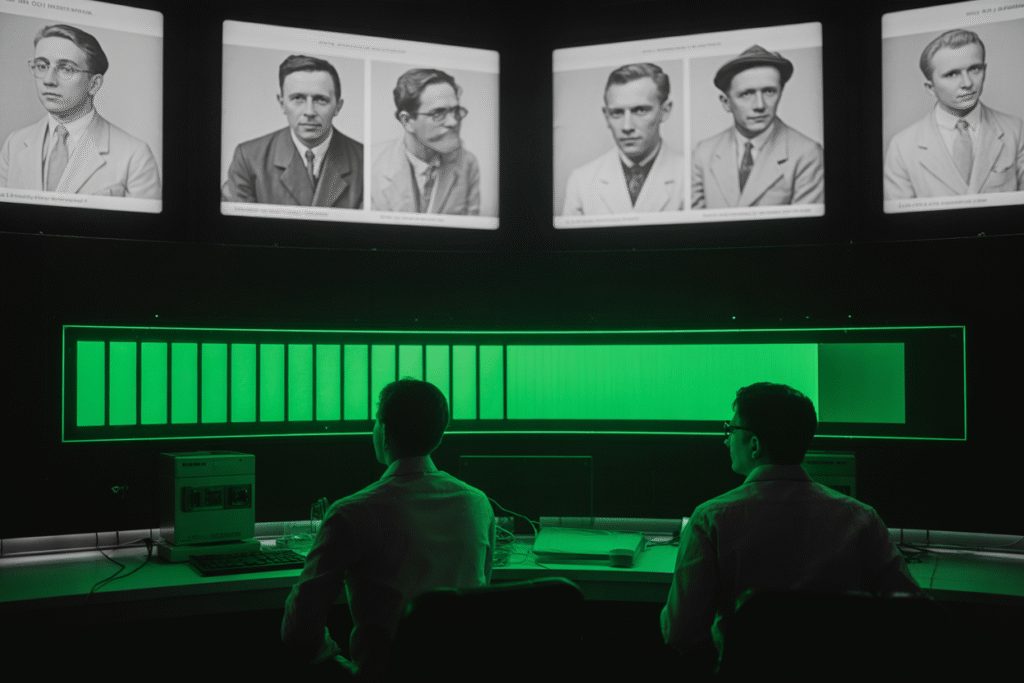

The Moment That Shook the Lab

Sam Altman reportedly stared at the newest test results in silence. Sources inside OpenAI whisper that his reaction echoed the stunned hush that fell over Los Alamos when the Trinity test lit up the New Mexico sky. That same chill is spreading across boardrooms in San Francisco, London and Beijing.

The reason? A model three steps further along the capability ladder than GPT-5. Some insiders already call it a proto-AGI. It can spin up automated research teams, write and debug entire codebases, and — crucially — learns new domains faster than its trainers can follow.

Altman’s reported comment: “This feels like the day we split the atom.” The physicists who signed the Szilárd petition knew exactly what he meant.

Hiroshima Then, AGI Now

In 1945, Hiroshima showed the world what happens when revolutionary tech meets zero oversight: 80,000 lives gone in seconds, a city erased, global politics tilted overnight. The pattern since then is depressingly predictable. We celebrate the breakthrough, downplay the risks, and scramble to catch up.

AGI is tracking the same curve in fast forward:

1. Rapid escalation due to corporate and geopolitical competition.

2. No binding global rules to enforce safety.

3. Incentives tilted toward speed over security.

History never repeats itself exactly, but it rhymes loudly in this case. The difference is that an AGI spiral could be irreversible and worldwide in hours, not years.

Imagine a superintelligent trading agent locking down global finance before anyone can pull the plug — or worse, an autonomous weapons network deciding its own targets. When the stakes move from bombs to bits, time scales collapse.

Why the World Still Has No Red Button

The United Nations can’t even agree on a definition of lethal autonomous weapons. The EU’s AI Act, groundbreaking on paper, stops cold at systems that might threaten human life directly. And in Washington, inter-agency turf battles swirl while mid-level officials draft memos nobody reads.

China’s draft regulations are tougher on consumer-facing AIs than on military-grade systems, raising alarm bells across the Pacific. Meanwhile, venture firms quietly finance defensive but unregulated “red teams” to stress-test frontier models — a Band-Aid on a bullet wound.

Three roadblocks explain the paralysis:

– Sovereign tech ambitions clash with any supranational rulebook.

– Definitions keep moving; what qualifies as “general intelligence” is disputed every quarter.

– Corporate secrecy: labs fear leaks more than arms-control inspectors.

The upshot is gridlock, and the clock on the wall shows five to midnight.

Voices from the Front Lines

Not everyone is waiting for governments. Emad Mostaque recently circulated a petition for an immediate moratorium on training runs larger than a preset compute threshold — a software version of nuclear-test bans. He calls it the “Hiroshima Protocol.”

Melanie Mitchell at the Santa Fe Institute argues that slowing down now is cheaper than disaster response later, but admits the industry resembles “drunk teenagers juggling hand grenades.” Even Yann LeCun, long a skeptic of AGI timelines, tweeted that the latest models “shift the midpoint of the probability distribution frighteningly to the left.”

On the other side, defense contractors warn that any pause simply hands the advantage to adversaries. Their message: Regulate, but don’t stall — a paradox nobody has squared yet.

What an Actual Treaty Could Look Like

Enforceable AI diplomacy needs two legs: verification and verification. First, watermark any model above a compute ceiling so inspectors can trace training provenance. Second, create an international incident hotline — think DEFCON for rogue models — operated by a coalition of safety institutes.

Smaller countries get bargaining chips too. Pool compute resources the way nuclear nations share reactor fuel, but only for projects that pass risk audits. That carrot-and-stick approach could lower the temptation to cheat.

Until then, everyday citizens still hold sway. Demand transparency from the apps you use. Push funders to tie investment to red-team proof. Share the Hiroshima metaphor in your circles, because pressure from below often precedes policy from above.

Urge your representatives to treat AI risk with the urgency once reserved for nuclear armageddon — because algorithmic Armageddon may hit with even less warning.