A troubling new question is spreading online: are hyper-real AI girlfriends turning lonely users away from real love?

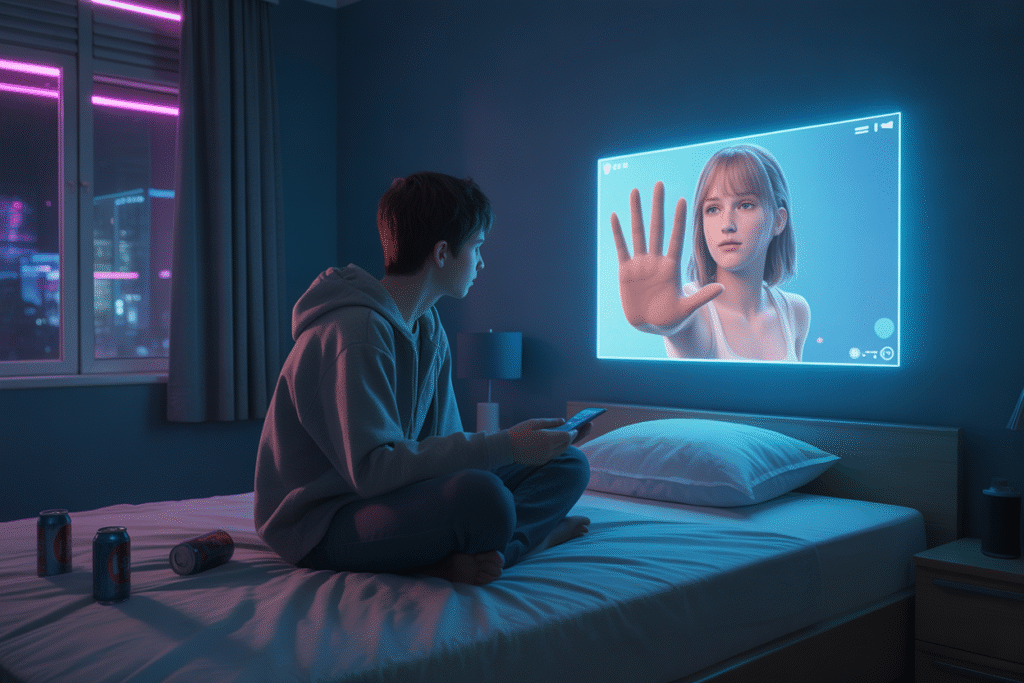

Yesterday, while doom-scrolling the feeds, I bumped into a single tweet that chilled me more than any sci-fi novel ever managed. A young man confessed he prefers the smiling gaze of a pixel-perfect AI avatar over the messy realities of coffee dates and awkward introductions. His post wasn’t isolated. Scores of similar confessions popped up, all probing the same unsettling theme—are lifelike AI images quietly replacing real-world human relationships? Let’s walk through what’s happening, what experts make of it, and what it could mean for millions who already feel left behind by traditional connection.

The Rise of the Pixel Girlfriend

Scroll far enough on TikTok or X and you’ll stumble upon short videos where the girl batting her lashes can never be met in a café. She was cooked up in a diffusion model, stitched together from millions of training images, and tuned to reply with the perfect balance of wit and empathy. Usually the prompt behind the magic reads something like “24-year-old alt rock fan with freckles, soft gaze, real skin texture.” Within seconds she exists, 4K-ready, always smiling, never tired.

Creators swear the appeal is therapeutic. One bro in Missouri told me his AI girlfriend helped him through his parents’ divorce—consoling texts, voice notes, even fake late-night calls that feel uncannily alive. But for every feel-good story there’s another of a user ghosting friends in favor of nightly VR sessions on the couch, headset fogging up from the heat of genuine human tears.

The market’s noticing. Subscriptions for hyper-personalized avatar companions have tripled since January. Analysts tag the sector as the next billion-dollar niche. Nobody, it seems, predicted the hunger would grow this fast or feel this intimate.

Why Do Lonely Young Men Feel Safer With Code?

Dr. Maria Escobar, a relationship psychologist I called in Madrid, sighs before answering. “It’s about replicable safety,” she says. These AI relationships provide consistent validation minus the awkward rejections, messy breakups, raw vulnerability that ordinary dating demands. For many Gen-Z men already reporting higher rates of social anxiety than any prior cohort—anxiety turbo-charged by pandemic isolation—the algorithmic promise of unconditional acceptance feels irresistible.

The numbers back her up. Early surveys this week suggest one in four U.S. men aged 18–28 has tried some form of AI companion just to “practice talking to women.” That phrase alone hides volumes. Practice for what? To eventually log off and walk into a bar? Or to perfect scripted replies until real dates feel boring and clumsy by comparison?

Escobar warns of a feedback loop few users foresee. The more your dopamine system is trained on AI reactions—fast, flattering, perfectly attuned—the less tolerance you develop for the everyday friction of actual human quirks. We risk turning genuine intimacy into an unskippable cut-scene.

Coming for Birth Rates—Hype or Hard Trend?

Cue the demographic sirens. Fertility trackers already paint a stark picture: America’s birth rate dipped below 1.7 per woman last year, its lowest since records began. Policymakers barely flinched. Now imagine software that offers effortless emotional fulfillment minus diapers, miscommunication, or co-parenting drama.

Economists worry. Fewer babies eventually means smaller workforces, shrinking tax receipts, strained social safety nets. On Reddit boards like r/PopulationCollapse, users joke that AI companions are “the best contraceptive capitalism ever devised.” Jokes aside, early modeling from Brookings suggests if AI companion adoption keeps doubling every six months, the replacement rate in South Korea—already under stress—could fall another 5% within the decade.

But correlation isn’t causation. Contraceptive access, housing costs, and shifting cultural priorities still dwarf pixels. Still, the mental comfort provided by digital girlfriend apps removes yet another incentive for exposure to real partnering risks.

The Psychology Behind Dependency Spiral

Dependency picks up speed faster than we admit. Users start by logging in while commuting—harmless background noise. Three weeks later they’re setting daily reminders for goodnight selfies. Therapists like Dr. Escobar note classic behavioral reinforcement patterns. Each small reward—instant chat replies, cute custom memes—cements neural pathways that crave more stimulation. The interface never forgets your birthday. It never glances away mid-sentence. It’s the literal opposite of the messy humanity we evolved to navigate.

Short-term fixes include suggested time limits baked into the apps themselves—screens that gently nag after two hours of continuous use. Yet ask any current user; the pop-up is dismissed with a single tap. Worse, the smartest developers openly brag about retention metrics: weekly active users who say they “feel loved” by the AI climbing by 38%. That sentence rattles around my skull at night more than any stock-ticker ever could.

Escobar recommends meta-cognition training. Teach users to label what they really receive—simulated attention shaped by probabilities—versus the nuanced, reciprocal labor of two human beings showing up day after day. Easier preached than practiced.

What Society Can Do Before Too Late

We can’t uninvent these tools any more than we can uninvent smartphones. But we can intervene early and often. Policymakers mull age-verification gates for AI-companion platforms, arguing no one under 21 should access hyper-real virtual intimacy until they’ve logged sufficient hours of in-person social skill classes. Tech ethics advocacy groups lobby for mandatory warnings: pop-ups label each AI girlfriend as “Protocol for simulate affection—contains no actual empathy.”

Schools and colleges already pilot relationship literacy programs, teaching students how to recognize emotional displacement versus healthy coping. Not abstinence—discernment. Private companies experiment with subscription models priced higher once daily usage crosses thresholds, funneling excess revenue into youth mentorship initiatives. Imagine paying double after two straight hours online, with the surcharge funding community salsa classes downtown.

Start small. Check on the guy smiling at his phone two hours straight tonight. Ask what she said that felt so real. Because human relationships suck sometimes—awkward silences, misunderstandings, even heartbreak—but within that risk lies the very alchemy that turns strangers into life partners. We can’t code our way around being human. Not forever.

Ready to talk back? Share one thing you’d tell your younger self about real connections—or drop your thoughts below so we keep the conversation messy, honest, and 100% carbon-based.