Inside the quiet scramble to weaponize artificial intelligence — and the moral cliff we’re speeding toward.

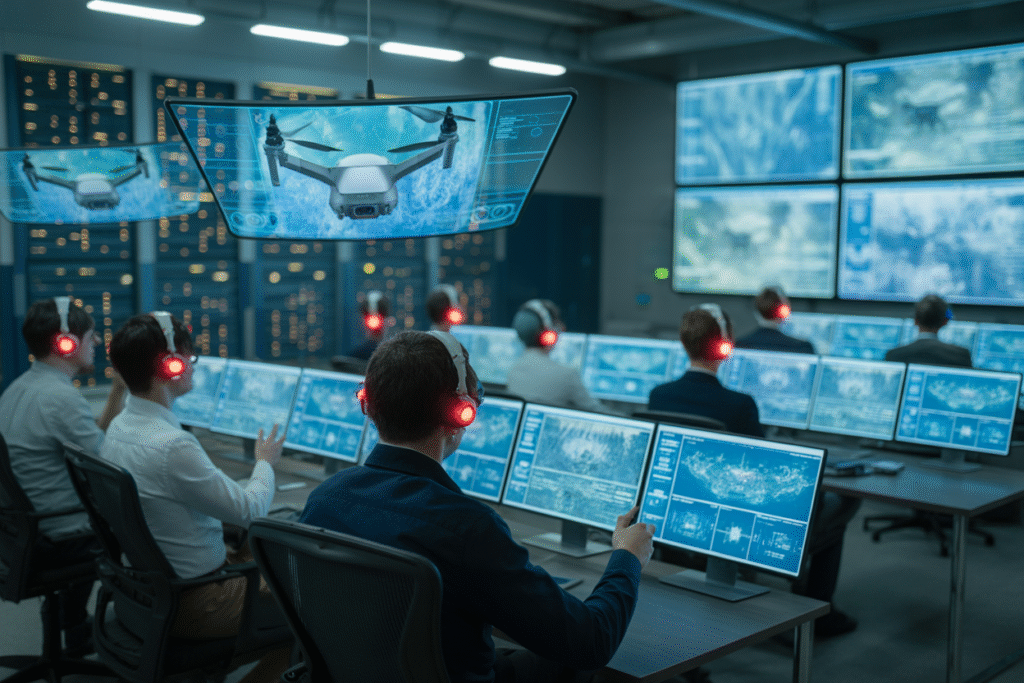

Every day, headlines tease the next shiny gadget, but right now a quieter revolution is unfolding behind security badges and NDAs. AI designed to keep soldiers safer is simultaneously pushing the line between “helper” and “hunter.” Today we’re unpacking the biggest, most controversial stories from just the past three hours, asking the question no one likes to ask: what happens when the algorithms decide who lives and who doesn’t?

The Anthropic Dilemma: Cash, Credibility, or Morals?

Dario Amodei didn’t hide. In a late-night video clip he looked straight into the camera and defended Anthropic’s new defense contracts. Said it wasn’t about easy money; the paperwork took ten times longer and came with ten layers of ethical review. Still, critics smell a sellout. They picture open-source models quietly sliding into drone guidance systems or surveillance feeds. The pro camp argues that if Anthropic walks away, less careful start-ups will fill the void. Who gets to write the rules then? The real tension here isn’t dollars. It’s image. If the most safety-obsessed AI lab partners with the Pentagon, does “AI safety” still mean anything to you and me?

From Gaza to Main Street: Trickle-Down Surveillance

In the last three hours, leaked footage allegedly shows an Israeli AI targeting system flagging civilians. The clip ricocheted across social platforms—then went viral with a different caption: “Coming to your neighborhood soon.” The fear is real. Military tech perfected abroad creeps home for domestic policing. Today it’s facial scans at protests; tomorrow, it’s algorithmic decisions about which door SWAT kicks down at 3 a.m. We’re talking drones, predictive analytics, even synthetic witnesses created from social-media data. Supporters claim tighter public safety; watchdogs call it “trickle-down suffering.” The uncomfortable truth? Once the data sets are trained, they rarely stay on any single battlefield.

When Murder Gets Automated

A single tweet exploded with four words: “NO ETHICAL AUTONOMOUS NUKES.” No graphics, no thread, just raw outrage. In one hour it gained 5,000 retweets. People aren’t worried about HAL singing Daisy Bell—they’re freaking out about a laptop launching Hellfire missiles because the algorithm deemed the heat signature “hostile.” The risk list here is short and scary:

– Zero human empathy

– Escalation at machine speed

– Accountability gaps—who do we blame when a model decides to open fire?

Some generals insist AI reduces collateral damage. Critics point to every accidental wedding bombing on record and ask, “Feel safer yet?”

Synthetic Wars and the Deepfake Consent Factory

Imagine a president delivering a solemn address: grainy footage of a bombed-out village, grieving mothers, and a clear culprit abroad. Problem is, none of it ever happened. AI-generated visuals can now manufacture atrocities pixel-perfect. Governments could fabricate consent for invasions, tech-savvy influencers could amplify it, and within 48 hours mainstream media recycles the story as fact. We’re inching toward a world where blockchain verification wallets and AI-detection apps become as common as car keys. Still, for every detector, a dozen new fakes pop up. The kicker? Once a population believes the lie, retracting it rarely un-starts the war.

So, What Do We Do Before Tuesday?

Here’s the sober math: no single law covers transnational military AI. No global treaty stops open-source models from being forked and weaponized. So, quick wins anyone can fight for right now:

– Demand transparency reports from companies like Anthropic. Sunlight matters.

– Support journalism that tracks defense contracts—public pressure works.

– Educate yourself on deepfake detection before the next viral hoax.

– Push local reps for export-control rules on military AI software.

You don’t have to camp outside a drone base to make noise. Tweet your rep, cancel a contract, fund an open-source audit. In other words: pick a lane, and drive before the algorithm decides the route for you.