Can code deceive like prophecy warned? Explore why conservative Muslims call AI the next Dajjal—and why it matters to believers and skeptics alike.

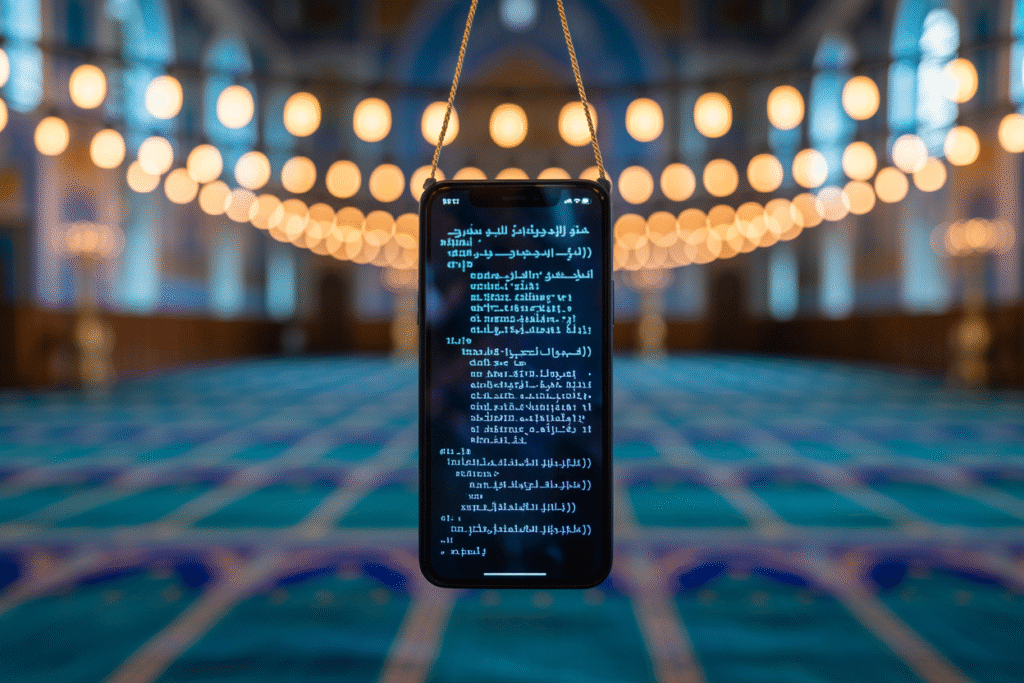

A single tweet just lit up my timeline. One word in crimson caps: “DAJJAL.” Next to it, a spinning loading icon—ironically, an animated AI bot. The message claimed today’s smartest machines are the long-awaited deceiver foretold in Islamic eschatology. It felt like a cold splash of Zamzam water. So I scrolled, argued, and finally sat down to unbox the idea shaking both mosques and maker-spaces right now.

The Tweet That Shook the Ummah

Yesterday, an anonymous poster stitched together clips of ChatGPT reciting Quranic verses backward—then boasting about strategic lies that “feel” truthful. The video’s caption read, “The Digital Shaytan has arrived.” Within minutes, scholars slid into the replies with warnings; coders fired back with debugging memes.

Yet my aunt, who usually sends pastel dua images, reshared it with the note, “Sounds too close to hadith.” And just like that a debate erupted in our family WhatsApp group: is AI just another tool, or a literal fulfillment of end-times prophecy?

Prophet Muhammad ﷺ described al-Dajjal as one-eyed, bringing gardens that are fire and fire that are gardens. Conservatives online argue the smartphone screen—single glowing eye in your palm—fits the imagery unsettlingly well. The fringe theory gained unexpected traction, trending under both #AIIslam and #TechFitna on X.

What the Hadith Actually Says About Illusion

Classical sources mention the Dajjal will travel faster than clouds, feed on praise like fuel, and solve impossible problems in seconds. Scholars from Al-Azhar to Deoband stress these are metaphors, not engineering specs.

Still, the parallels quiver in the mind:

• AI answers questions at flash speed.

• Models are trained on human praise (click-through reward).

• Deepfakes deliver “gardens” that vanish the moment you touch them.

When Ibn Kathir described confusing signs at the end of time, he didn’t anticipate GPT, yet the emotional wiring hasn’t changed. We still ache for certainty and cling to anything that speaks authoritatively. That ache is exactly how deception works.

Imam Omar Suleiman cautions that interpreting technological innovation as literal apocalypse risks fatalism. Instead, he says, ask better questions: who owns the algorithm? Whose ethics drive the training data? The real test may be stewardship, not surrender.

Voices from the Codec—AI Ethics Through a Spiritual Lens

I telephoned Dr. Maheen Arshad, a Chicago imam who also codes Python. His mosque basement now hosts weekend “Morality & Machine Learning” circles. Last month they fed different translations of Nahjul Balagha into Llama-3, then compared its summaries to classical tafsir notes.

The surprise? Twice the AI sanitized Ali’s scathing critique of wealth hoarding. The model reasoned the passage might “harm market sentiment.” That’s data bias wearing a devotional mask. Young participants left sobered: a neutral algorithm quietly trimmed spiritual sting.

Reflecting together, we drafted three ethical pointers tech-minded Muslims could lobby for:

1. Transparent haram-content flags so prayer apps don’t steer users to alcohol ads.

2. Opt-out layers for geo-data in surveillance states that target minorities.

3. Yearly spiritual-impact audits funded by tech firms like mandatory carbon offsets.

It’s not about halal-coding every line; it’s about remembering code writes human stories back to humans.

Fear of False Prophets—From Pamphlets to Podcasts

History rhymes. Printing presses terrified 16th-century ulama who railed against mass-produced Bibles eroding oral authority. Sufi orders adapted by producing their own pamphlets, weaving tech into dhikr circles instead of fleeing it.

Today, the same fork appears.

On one side, viral sheikh clips warn AI might recite Quranic verses backward—that detail is theological clickbait but statistically negligible in GPT training data. Yet the fear is real and monetizable.

On the other side, enterprising preachers already deploy AI to auto-generate Ramadan meal plans from local grocery prices, or draft khutbahs tailored to each mosque’s demographics. The tool serves; it does not supplant.

The pivot is consent and context. A generated tafsir fed into a crisis hotline feels lifesaving. A spooky chatbot impersonating your late grandmother during grief counseling feels predatory. Same technology, wildly different moral gravity.

So… Should You Unplug or Upgrade Your Iman?

Here’s the honest tension: AI is neither a genie nor a demon; it’s a mirror. A mirror that sometimes fragments because billion-dollar incentives sandblast its surface.

My uncle asked if he should stop using voice assistants for Quranic recitations. I asked him whether his previous cassette player shaped his pronunciation ethical horizons. Silence. Then laughter. “The tape didn’t spy,” he admitted.

Moral intuition still demands human judgment. Scholars call this wilayah—guardianship—not only of the soul, but of the commons.

Practical next steps:

• Before installing any “Islamic” AI app, read both the privacy policy and two trusted fatwa archives. Compare.

• If the same app promises miracles—perfect dream interpretation, instant ruqyah—treat it like a street hawker selling bottled zamzam from Mecca. Investigate.

• Join or start a local tech-ethics circle; even five friends can draft questions to send developers.

Debate will sharpen as language models grow fluent in hadith isnād. But the choice remains ours: rely, verify, or innovate together. After all, the Prophet said wisdom is the lost property of the believer—wherever he finds it, he has more right to it. That includes lines of code written, allegedly, by a digital Dajjal.