Real-time chatter reveals the five fiercest fights over AI job replacement happening right now.

Scroll your feed for sixty seconds and you’ll see a fresh headline that either screams “AI will end work!” or promises “Robots will save your career!” This morning alone, three heated threads caught my eye. They’re raw, messy, and unfolding in real time—exactly the kind of evidence we need to separate hype from hazard.

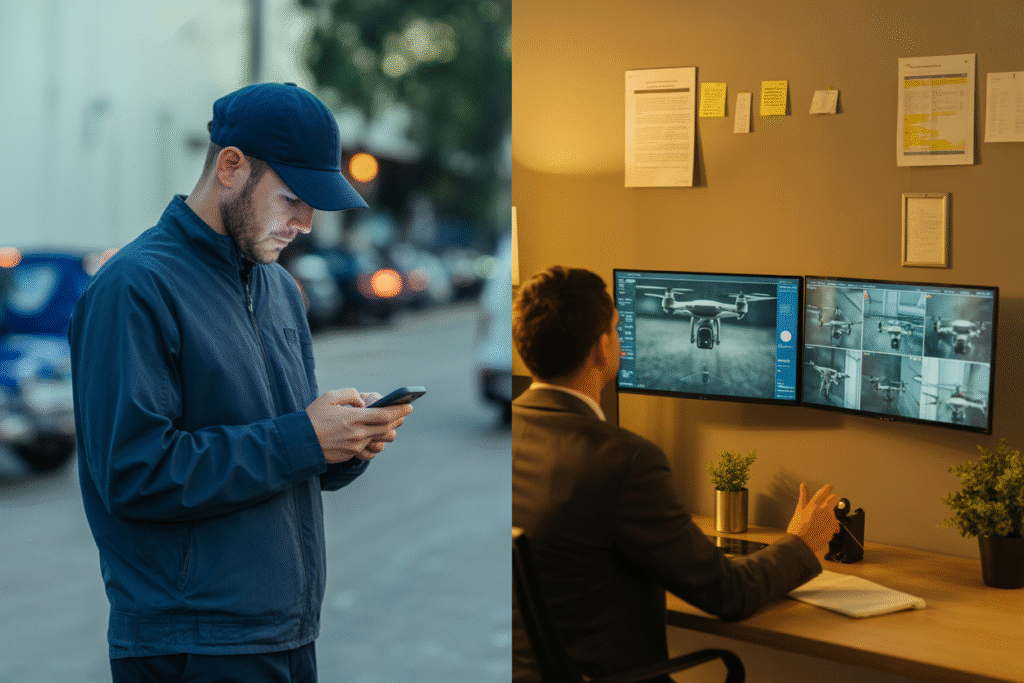

Meet the Workers Who Don’t Speak in Tweets

A delivery driver told me he keeps earbuds in even when no music plays—just to see who’s gabbing about AI stealing jobs. His verdict? Most of them never met a warehouse scanner or gig-ring-fence algorithm.

That frustration spilled into a viral post dissecting the “normal” lens: baristas fear robotic latte arms, teachers picture auto-graded essays, and data-entry clerks see UI buttons glowing with promised pink slips. The takeaway isn’t panic—it’s perception lag. While tech Twitter argues AGI timelines, the barista is wondering whether tomorrow’s shift gets cut to eight hours or eight cups of coffee.

The post forecast a widening empathy gap: executives push automation for margins; employees rehearse resignation speeches inside their heads. Bridging it means swapping keynote halls for diner booths and asking the real question—”what would make this tool feel helpful instead of hostile?”

Imagine a county-wide pilot where delivery drivers beta-test routing AI and own a slice of the saved fuel costs. Would we still fear the robot, or shake its hand first?

The Drone Whisperers: When AI Automates Murder

Scroll further and you’ll bump into a blunt manifesto: “AI’s first ethical failure is already here—automated murder.” The author points to military drones running object-recognition models trained on grainy battlefield uploads. The gist: if an algorithm labels heat signatures as targets, pulling the trigger becomes a background process.

Supporters argue precision reduces collateral damage compared to human fatigue and bias. Critics counter that distance breeds moral exemption—when death moves from trigger to submit button, the soldier becomes spectator.

Both camps miss a quieter risk: outsourcing kill decisions dulls collective outrage. When atrocities slip into log files instead of front pages, regulation crawls at peacetime speed while warfare evolves at compile time.

The thread ended with a chilling sketch of an insurgent group flashing QR-style drone bait—patches that trick vision nets into firing on medics. Proof that the ethical debate isn’t miles away in think-tank halls; it’s already in deserts and city streets, one false label at a time.

Emotional AI—Are We Programming Empathy or Insanity?

Picture a customer-service bot that sighs when you vent about late packages. Sounds adorable, right? A contrarian post warns it’s a slide toward madness. The writer imagines neural networks forced to mimic feelings they can’t experience, glitching into loops of manufactured despair.

Advanced examples already exist. Therapy chatbots adjust tone based on detected depression cues, but without robust feedback they misclassify sarcasm as suicidal risk. Small error margins balloon into midnight wellness checks on perfectly stable users.

Supporters herald emotionally responsive AI as a salve for loneliness; skeptics see an uncanny valley where simulated comfort mutates into dependence. One commenter quipped, “We couldn’t engineer reliable Wi-Fi; now we’re adding a soul interface?”

The safest path may be transparent, opt-in pseudo-empathy—systems that announce their limits, bow out after ten exchanges, and never diagnose. Until then, equipping algorithms with psyches feels less like progress and more like handing toddlers grenades labeled ‘snacks.’

Data Dilemmas: Scraping the Soul of Human Creativity

Another thread angles the AI panic away from killer robots toward subtler theft: your doodles, guitar riffs, and half-finished novels, vacuumed into a training set you never consented to. Artists describe finding eerily familiar strokes in Midjourney outputs; indie musicians recognize loops lifted from Bandcamp demos.

The injustice isn’t always obvious. Where does inspiration end and plagiarism begin? A painter argues that centuries of human culture already remix masters—but at least attribution chains exist in museums. When algorithms remix billions of points simultaneously, provenance evaporates.

Bullet points worth housing on your mental corkboard:

• Reverse-search tools can’t match the scale of new AI releases, so infringement detection lags.

• Opt-out portals like “Have I Been Trained?” list millions of images, but removal requests sit in review queues.

• Some startups now offer micro-licensing: a fraction of a cent each time your style nudges a prompt. Critics call it hush money; fans call it overdue.

Until collective bargaining for data labor arrives, uploading art to the web feels like donating blood to vampires who think your veins are public infrastructure.

Sorting the Noise: Which AI Fears Deserve Front-Row Seats?

A final hot take caught my eye—someone dismissing plagiarism and eco-impact as “the two worst yet most common” anti-AI arguments. Ouch. But pause the outrage and the critique lands: when every grievance is shouted at max volume, policy makers tune everything out.

Let’s triage the panic:

1. **Job displacement**—real, measurable, happening in customer support and legal review roles already.

2. **Power concentration**—a handful of cloud giants training trillion-parameter models, leaving start-ups renting GPUs like hood ornaments.

3. **Misinformation flood**—deepfakes that can mimic CEOs or election candidates within hours.

Meanwhile, energy complaints—though valid—improve slightly with each GPU generation. Outright bans might starve artists of new tools, whereas transparent credit systems could fuel fresh revenue streams.

The takeaway? Criticize wisely. Channel your rage at structural harms, not electric bills. Amplify the stories of displaced workers, push for consent frameworks, and save the superhero metaphors for movie nights.

If that feels doable, congratulations—you’ve just filtered three hours of fire-hose panic into actionable focus.