One woman’s ChatGPT medical triumph re-ignites the AI politics firestorm over ethics, risk, and who really owns your diagnosis.

A cancer survivor just thanked ChatGPT for saving her life, and the internet is ripping itself apart. In less than three hours, tens of thousands of likes, reposts, and fiery replies flooded timelines arguing whether this is the future of medicine or an ethics catastrophe masquerading as progress. Healthcare, ethics, risk, politics — it’s all here, happening now.

The Story That Lit the Internet on Fire

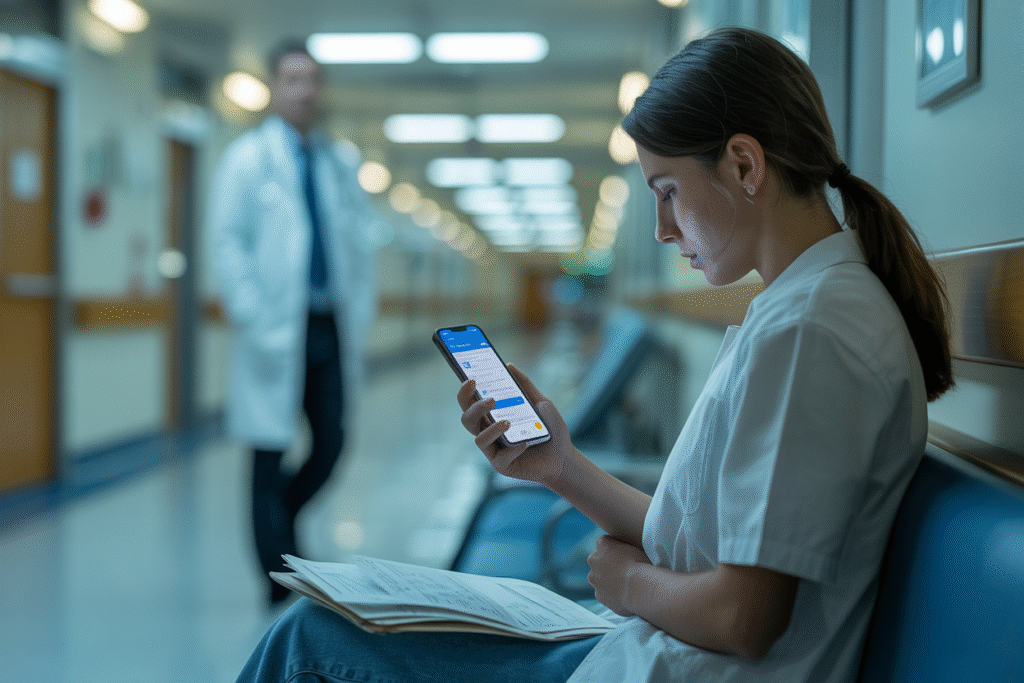

OpenAI shared her testimonial Monday morning. She describes months of confusing symptoms, vague dismissals from rushed clinicians, and late-night queries to ChatGPT that finally gave her language to fight back.

It wasn’t just emotional; it was tactical. Armed with AI summaries of peer-reviewed research, she requested imaging her doctor had skipped. Days later came the diagnosis — an aggressive but treatable cancer she might have missed.

Likes exploded: 680 in one thread alone. Comments split into two camps — miracle breakthrough versus reckless pseudo-doctoring. The phrase “patient empowerment” trended, so did “risky misinformation.”

Some physicians chimed in with sobering realities: What happens when the algorithm hallucinates? Who carries liability if delays or incorrect prompts lead to harm? The survivor’s next tweet addressed that worry: “ChatGPT didn’t replace my oncologist — it armed me to ask better questions.”

But asking better questions is exactly what regulators fear. If millions start second-guessing tests or drug choices based on chatbot answers, clinical workflows could unravel.

Inside the Ethical Battlefield Nobody Asked For

Healthcare ethics used to happen in conference rooms. Now it unspools in threads dripping with jargon like “risk-benefit asymmetry” and “algorithmic paternalism.”

Let’s break the crux points:

• Patient agency is skyrocketing. Instead of passively receiving a mysterious bill and a shrug, users crowdsource peer-reviewed guidance.

• Conversely, trust in expertise erodes. Some docs report patients arriving with 10-page AI printouts demanding medication switches.

• Meanwhile, risk profiles balloon. Models trained on open-web data can cite outdated studies, mirror confirmation biases, and hide hallucinations behind an authoritative tone.

Still think the insurance angle is boring? Think again. If ChatGPT nudges a patient toward an off-label drug that fails, which party pays? The actuarial tables haven’t caught up, and Silicon Valley keeps printing disclaimers in eight-point font.

Then there’s access equity. Affluent English speakers with broadband dominate prompt crafting. Rural clinics with minimal digital infrastructure can’t compete with “just ask the bot” medicine.

Regulators aren’t twiddling thumbs. The FDA quietly published draft guidance last month on “software as a medical device,” and Congress is calling hearings about risk assessment for generative AI. Translation: lobbyists are already sharpening pitch decks.

Three Ways This Could Shape the Next Five Years of Healthcare

Ready for your crystal ball slide deck? Here’s where the politics, risk, and ethics intersect — with three realistic trajectories.

1. Symptom Checker Plus: Hospitals integrate locked-down medical models that ingest EHR data and deliver second opinions instantly. Think “Google Health” but actually regulated, heavily audited for hallucination drift, and liability wrapped tight. Patients win, doctors get backup, insurers foot the tech bill.

2. Wild West Gatekeeping: Tech giants tweak consumer versions to refuse medical advice entirely. Frustrated users migrate to open-source forks running on home GPUs, bypassing every safety net. The ethics crowd tweets furiously while real-world harm scales faster than recall campaigns.

3. Care-co-pilot Ecosystems: Start-ups sell ChatGPT plugins certified by clinician boards, each query stamped with confidence scores, references, and a billable reconciliation note. Think TurboTax, but for oncology. Profit margins soar; meanwhile, bottom-tier clinics watch premiums spike as malpractice underwriters scramble.

Notice the unifying thread — no scenario leaves healthcare untouched by politics and regulation. The cancer survivor’s victory lap might look quaint in 2028 if AI politics harden into zero-sum showdowns between human expertise and algorithmic authority.

So, where does that leave everyday patients? Simple: the next time you feel a lump, Google won’t be enough — neither will blind trust in a prompt. The smartest move is balanced skepticism, layered protection, and policies that treat human expertise and machine assistance as teammates, not rivals.

Choose your side now — because the waiting room is about to get a whole lot louder.