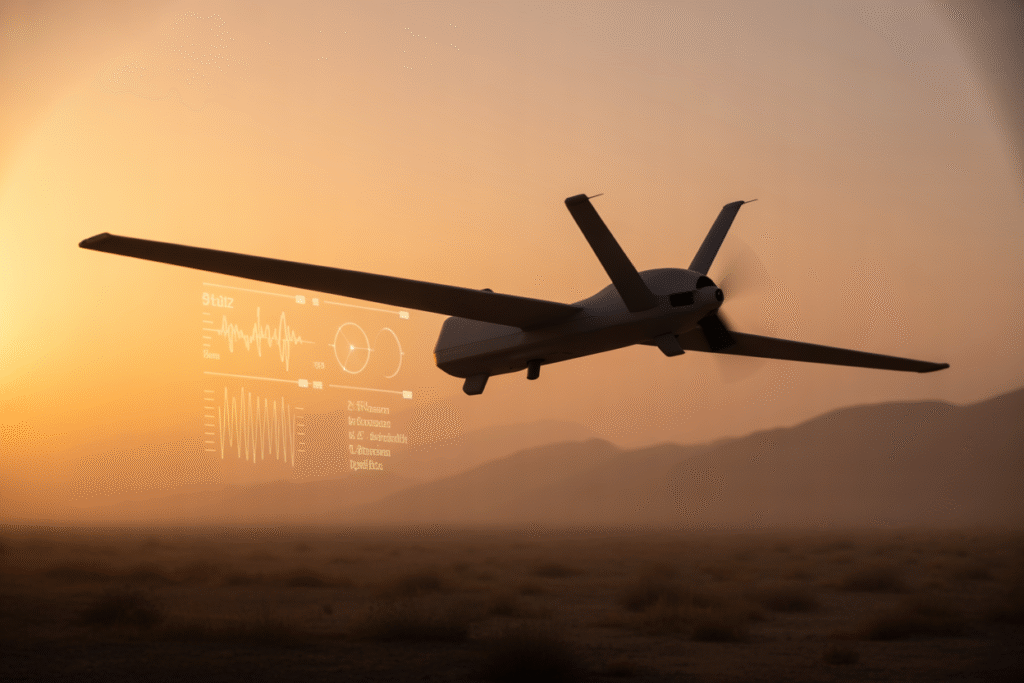

A whistleblower-heavy look at how the Pentagon’s new AI drone budget could reshape war—and your privacy.

The ink on the Pentagon’s $6-billion AI drone request is barely dry, yet generals, ethicists, and Silicon Valley execs are already sparring over what it really buys. Could these “defensive” drones quietly evolve into autonomous hunter-killers roaming skies anywhere? Let’s pull back the curtain.

Why $6 Billion Isn’t Just for Cameras Anymore

Picture this: a sleek drone the size of a dinner table dips under cloud cover, identifies a target, and delivers a lethal strike—all without human eyes on scene. That isn’t sci-fi.

The budget request, originally pitched as an upgrade to existing surveillance fleets, quietly folds in line items for biometric recognition software, 5G network integration, and experimental kill-chain algorithms. Engineers call it “edge autonomy”—software deciding who lives or dies based on facial geometry, gait, even loyalty signals mined from social media.

Critics argue this blurs the line between defense and assassination. Supporters counter that fast-moving threats demand faster-than-human response times. The stark truth? Those billions aren’t buying cameras with wings; they’re funding a decision engine that might outrank a soldier’s conscience.

Taxpayers hearing “drone” assume camera feeds and grainy night-vision. Inside program documents, it’s closer to the moral equivalent of Siri with Hellfire missiles.

From Rumor to Reality: The Deepfake-Nuclear Threshold

Deepfakes aren’t just Hollywood fun and political memes. The Center for International Strategic Studies just sounded an alarm that fabrications could trigger accidental nukes during a tense India-Pakistan standoff.

Imagine a fake video depicting an attack on a nuclear facility goes viral minutes before emergency hotlines close. Analysts scramble; AI systems absorb the false footage; automated early-warning systems twitch. This isn’t hypothetical. During the 2025 Kashmir skirmish, fabricated victory claims spiked on both sides, pushing actual troops to the brink.

AI-generated misinformation spreads faster than human verification can catch, especially when algorithms echo prior biases. MIT simulations show a thirty-minute lag between viral fake and public debunking—precisely the window in which a nuclear missile’s launch sequence can complete.

Pentagon planners now inject synthetic-media alerts into every war-game round, recognizing that a well-timed deepfake could double as a digital first strike.

Inside DARPA’s Black Budget: Killer Swarms and Loyalty Algorithms

DARPA doesn’t do boring spreadsheets. A leaked slide deck labeled “Project LoyalNet” outlines a vision for drones using loyalty algorithms—code trained to predict a subject’s allegiance via everything from purchase history to last month’s emoji choices.

Speaking with two former insiders who asked to remain anonymous, the picture darkens. They describe miniature swarms programmed to hunt in overlapping Wi-Fi corridors. Once confidence scores drop below 30 % favorable toward U.S. interests, the drones shift from observation to capture—or “neutralize if impossible,” in contractor-speak.

One engineer quit after a lab trial where drone triangulated a civilian phone because two social posts seemed vaguely pro-Beijing. No human hit the big red button; it was all on the algorithm.

When asked if lethal authority transfer has ever mis-fired, the reply wasn’t comforting: “Define ‘mis-fired.’ If new parameters roll out at 3 a.m., and no one notices until morning news, does it count?”

The Ethicist vs. the Brass: Can AI Really Outrank a Soldier?

Both sides agree speed saves lives. Where the water gets muddy is who ultimately pulls the trigger. Generals insist machines remove “human error,” citing reaction times measured in milliseconds. Moral philosophers fire back over accountability gaps—who court-martials a faulty algorithm?

Congressional hearings in July grew tense. One captain testified, “I don’t want code that never served in Fallujah deciding who’s hostile.” A DOD lawyer replied, “Neither do we, but pilots blink.”

Practical gotchas pile up:

• Software updates arrive via cloud, raising hack risks.

• Facial recognition fails under infrared glare or dust storms.

• Civil lawsuits could target both coder and commander.

Yet the military sees the alternative—slower, human-only responses—as a homeland-defense vulnerability. We’re left with a paradox: machines designed to protect us may also preempt us out of the decision loop forever.

What Happens Next: Job Loss, Geopolitics, and Your Move

Analysts report Beijing is matching every U.S. program line item for line item, creating a textbook AI arms race. Venture-capital fiat races toward drone startups hype cycles that promise 24/7 job displacement; job boards already list 2.3 k roles for “drone ethicist” across departments.

Civilian commerce feels fallout first. Agricultural mapping drones retune code to support wartime facial recognition. Hobbyists unknowingly buy software patches tested in classified arenas.

Meanwhile, 5G towers crank up, not to stream TikTok smoother, but to shave milliseconds off the global kill chain.

The punchline? If you’re reading this, you’re already part of the experiment. Your darker what-if could trend next week, become congressional testimony next month. So share widely, challenge loudly, and keep one eye on the skies—because somewhere above, a million lines of ethics code just decided maybe you’re loyal enough. Or maybe you’re not.

What do you think the sky should decide about you next time?