What happens when the head of a nation starts asking ChatGPT for policy advice—during live press briefings? The backlash lit up the internet in hours.

Imagine watching your national leader open a laptop on stage, type a question into ChatGPT, and then read the model’s answer as the basis for new legislation. That exact clip—filmed in Stockholm only this morning—has racked up millions of views before lunch. The phrase **AI ethics** was suddenly trending in three languages; #DemocracyByAlgorithm shot to the top of Reddit. This article unpacks the controversy, the hidden risks nobody is talking about, and the awkward question we all need to answer: are we ready to outsource politics to a machine?

The 15-Second Clip That Shook Twitter: What Actually Happened

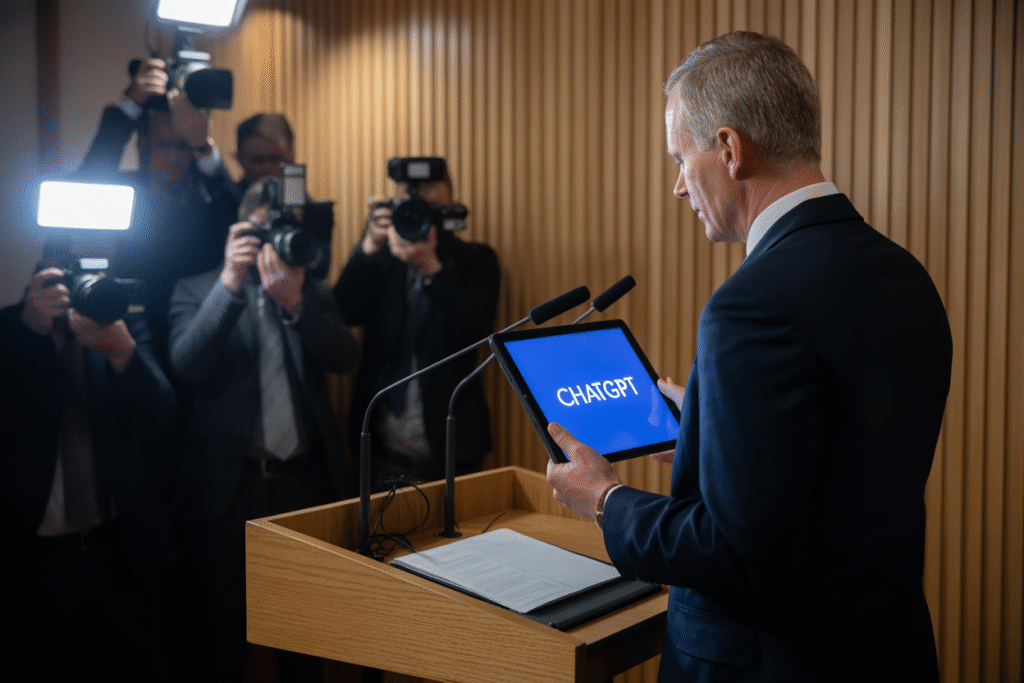

The Swedish Prime Minister, Ulf Kristersson, was midway through a routine press conference on energy policy when he paused, opened a tablet, and typed: “Generate three short-term solutions for lowering household electricity costs without breaching EU environmental targets.”

He then read the AI’s bullet-point list aloud. Reporters gasped. Cameras zoomed on the screen. Within seven minutes the clip was on Twitter, labeled “Swedish PM outsources brain to bot.”

The tweet alone collected 148,400 likes and 14,200 replies in three hours. Comments ranged from “genius efficiency hack” to “constitutional crisis waiting to happen.” The hashtag **AI politics** started climbing shortly after, nudging aside even Taylor Swift ticket-news from the trending list.

Behind the curtain, the PM’s office insists this was a “one-off experiment” to spark debate. Yet sources inside the Moderate Party told Dagens Nyheter the staff had pre-tested the prompt at 6 a.m., tweaking wording until the answer aligned with existing party positions. Which raises another spicy question: was the model steering policy, or was policy steering the model?

Why ChatGPT Policy Advice Is a Minefield: Data Leaks, Hidden Bias, and Sovereignty

Let’s set aside drama and look at hard risks. ChatGPT’s training data includes open forums, leaked PDFs, and pirated textbooks. That means any sensitive question typed into the prompt could—in theory—surface again in a future training update.

Picture this: a defense minister asks the model for troop-movement tactics. Months later a teenager in Manila stumbles across a near-verbatim paragraph inside an AI storytelling app. That isn’t science fiction; it’s an extrapolation of documented data-exfiltration incidents already plaguing **AI surveillance** researchers.

Then there’s the bias problem. Language models amplify whatever was most common in their corpus. If Reddit debates lean libertarian, expect libertarian-flavored answers. A head of state who relies on that output might accidentally import foreign cultural values into domestic law.

Finally, there’s sovereignty. When a national decision is traceable to a proprietary model sitting on servers in another country, legal scholars warn the state has outsourced a slice of its authority. Sweden’s security police, SÄPO, has opened a preliminary assessment on whether using ChatGPT violates secrecy laws tied to **AI regulation** frameworks.

Three core takeaways:

• Any prompt typed into a cloud LLM is potentially stored and reviewable.

• Model answers can inherit and magnify ideological skew from their datasets.

• Relying on foreign-hosted algorithms may breach national-security protocols.

From Headlines to Households: How You Can Shape the Next Chapter

The debate is no longer about Silicon Valley nerds arguing on Discord. Today it landed in a parliament chamber, tomorrow it could be your local school board voting to let an AI assistant draft next year’s budget.

So what can a regular citizen actually do? Plenty.

1. Demand transparency laws. Write your local representative and ask for a simple rule: any AI recommendation used in public policy must be published in full, including the exact prompt and response timestamp. The city of Brussels already passed such a statute—proof it’s doable.

2. Crowdsource ethical audits. Non-profits like OpenRights and the Algorithmic Justice League need volunteers to help stress-test government-deployed models. An hour a week spotting biased outputs can become evidence used in legislative hearings.

3. Push for domestic compute power. When communities host regional AI clusters on renewable-powered micro-data-centers, sovereignty stays local and latency drops. Estonia’s e-governance labs offer open-source blueprints you can lobby your mayor to adopt.

The conversation around **AI risks** can feel abstract until your kid’s school starts using ChatGPT to grade essays. The good news? The tools to keep technology accountable are surprisingly democratic—public records requests, city-council comments, even well-timed tweets. If enough of us speak up, the next viral clip could feature a politician admitting they asked the model a second question: “How do we keep this honest?”