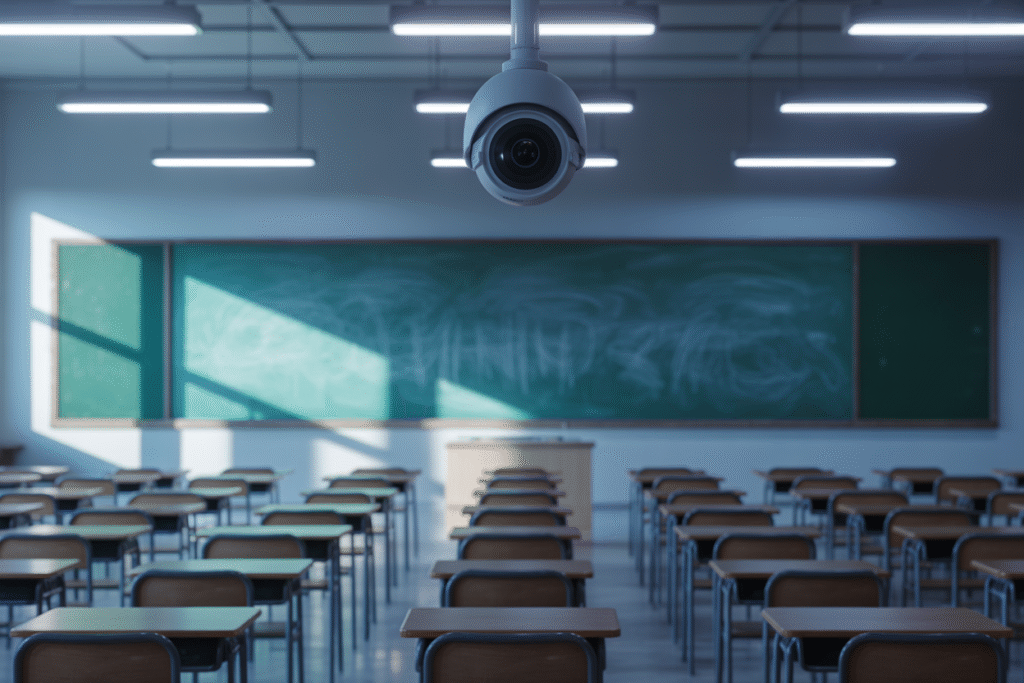

A quiet revolution is unfolding in US schools—AI systems scan every chat, photo, and email, promising safety but raising urgent privacy questions most parents never see.

Imagine a 13-year-old texting a friend between classes. She cracks a dark joke about a violent TV show. Within minutes, the AI surveillance software flagged her message, summoned the principal, and triggered a chain of events that ends with handcuffs and a night in juvenile detention. This is not fiction; it is happening in classrooms across the United States right now.

The New Hall Monitors Are Lines of Code

Walk into many American schools this fall and you will still see lockers slamming, lockers slamming, cafeteria pizza that looks like cardboard—nothing out of the ordinary—until you realize every keystroke on a school-issued Chromebook is being read by an algorithm.

Tools like Gaggle, GoGuardian Bark scan millions of emails, Google Docs, chat histories, even PDF artwork with emotional recognition that would impress the NSA. Their pitch? Spot threats before they explode.

At first principals loved the promise: state law often offers extra funding every time a school installs AI surveillance, so the tech spreads cheaper than bulk glue sticks. Parent signatures arrive pre-checked on digital permission forms, and everyone sleeps easier in a post-Parkland world.

Then the stories leak. A tenth grader’s love poem triggers a nudity alert. A suicide-prevention flag is raised because a child wrote, “This homework is killing me.” And in one Idaho middle school, a kid spends 21 hours in custody after AI mislabeled sarcasm as intent to harm. When the record cannot be expunged, the headlines ask—are we trading real safety for the illusion of control?

The data echoes the unease. Gaggle alone processed 6.5 billion student interactions last year, sending more than 124,000 “self-harm alerts.” Yet only a few dozen incidents led to credible interventions, leaving schools drowning in false positives.

The Human Cost of a False Alert

Meet Ashley Rivera (name changed), the 13-year-old whose sarcastic text went viral for all the wrong reasons. She says the joke was aimed at fictional zombies; the algorithm read anguish and violence. By the time her mother arrived, school police were already photocopying the whole chat history.

What followed felt Kafkaesque. Ashley rode a squad car to juvenile intake, spent the night on a thin plastic mattress, and later faced a school disciplinary panel. Months later she still flinches every time a Chromebook chimes.

Multiply Ashley by hundreds. The Associated Press sifted through court filings from ten states and found students suspended for essay metaphors, suspended for fantasy short stories, even suspended after AI flagged a Shakespeare assignment as threatening. In most cases, the families paid legal fees that dwarfed a month’s rent.

School psychologists scramble to explain bias. Algorithms tend to misread African-American Vernacular English and expressions common in LGBTQ chat spaces. Black, brown, and queer students are therefore disproportionately swept into disciplinary pipelines. One counselor in Texas admits she spends Mondays “untangling weekend robot alerts” instead of counseling kids.

Meanwhile, tech companies tweak models behind closed doors. There is no federal requirement that vendors publish false-positive rates. Parents have to file Freedom of Information Act requests just to see what data was collected on their child in the first place.

Where We Go From Here

The obvious question: does this technology actually make schools safer? Researchers at the University of Michigan examined 600 districts over six years and found no statistically significant drop in incidents of self-harm or violence in schools that deployed AI surveillance versus those that did not. The study made headlines but changed few policies—budget cycles, lobbying power, and fear-driven headlines are hard to unspool.

Some states are pushing back. New York recently passed a law requiring districts to notify parents within 24 hours of any AI flag and to perform annual bias audits. California may go further, forcing vendors to open their source code to third-party scrutiny.

Parents also carry leverage. A growing parent-teacher alliance in Ohio rolled out “opt-out” calendars showing how to disable monitoring extensions district-wide. The movement relies more on community organizing than courtroom battles—and districts are listening.

Yet the bigger threat looms beyond education. If today’s students internalize indiscriminate monitoring as normal, tomorrow’s adults may accept the same in workplaces, public transit, private homes. The stakes shift from hallway discipline to democracy itself.