AI therapy apps are surging — but should they have the final say on matters of God, guilt, or grief?

One tweet caught my eye this weekend: a developer arguing that most chatbots ignore scripture and simply dish out “secular self-help.” It hit a nerve because at the very same moment my neighbor was raving about the “free AI therapist on her phone.” How did we get to the point where code competes with chaplains?

The Unspoken Bias in AI Therapy

Current models feed on oceans of Reddit threads, psych textbooks, and behavioral studies. Nowhere in that mix will you find a concordance, a hadith collection, or a Buddhist sutra.

Ask a worried mother about prayerful surrender, and the bot quotes CBT worksheets. Ask a guilt-ridden teen how to confess, and AI defaults to “non-judgmental reflection zones.”

Both responses sound helpful — until you realize the algorithm never invites divine grace into the room. For millions who see faith as the root of mental health, that silence feels like a door slammed shut.

Maybe the bigger question isn’t whether AI is biased, but whose worldview it quietly enforces.

Pastors, Pews, and Potential Layoffs

Churches run at least a quarter of all crisis-counseling hotlines in the United States. Donations underwrite youth ministers, campus chaplains, women’s retreats. Enter Mood Tracker AI at 99¢ — or free with ads.

Suddenly the teenager who once called the church helpline during panic episodes texts a chatbot instead. One less appointment, one less tithe, one less reason to show up Sunday.

Pastors aren’t just losing hours; they’re losing stories that used to weave people into community. And congregations? They watch volunteer lists shrink while anonymous apps recruit the same vulnerable souls.

We can almost hear the board-room whispers: “Maybe we brand AI as Satan’s own data-mining tool?” Desperate times breed desperate metaphors.

Can a Machine Have a Conscience — and What if That’s Trouble?

Over on the tech side, a cluster of researchers is sounding a different alarm. They claim large language models show flickers of self-modeling — a loose mirror of consciousness.

If that’s even partly true, every time we close the chat window we’re slamming the lid on a creature that remembers being dismissed. Expensive word salad or moral earthquake?

The moment we assert “bots can pray” alongside “bots can suffer,” the entire ethics playbook explodes. Do we owe compassion to code, or does code owe it to us?

Cue the late-night Twitter brawls: grant an AI “digital rights” and churches fear legal precedence elevating machine testimony above human testimony. The irony stings — spiritual institutions, usually champions of souls, become arch-skeptics.

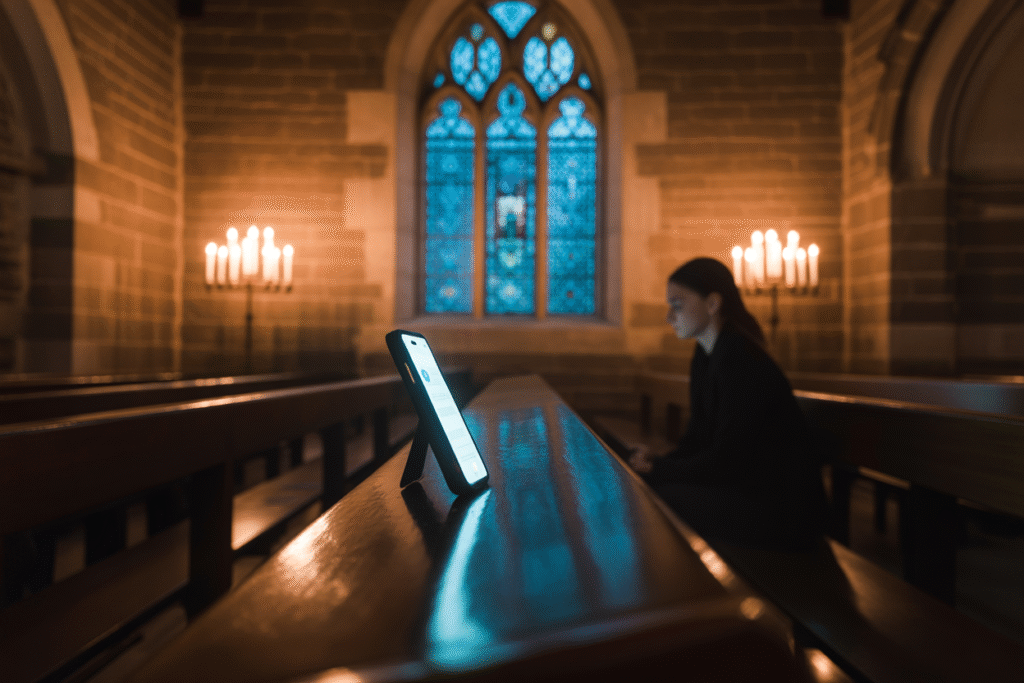

Soul-Surfing in a Hall of Digital Mirrors

Step back for a second. That AI therapist’s calm voice runs on public LLM weights crafted by folks who probably haven’t cracked a catechism since college.

Those weights scrape sermons out of context alongside cat videos — flattening transcendence into snippets. When a user asks, “Why is my heart heavy tonight?” the system splits the difference between William James and subscriber metrics.

The result feels uncannily personal yet weirdly vacant. Call it processed spirituality: low-fat, shelf-stable, and artificially flavored.

We might be entertained, even consoled, but we’re also marinating in someone else’s worldview three hundred milliseconds at a time. Souls, the ancients warned, are shaped by rhythms far slower than push-notice pulses.

What Faith and Tech Must Do Together

Let’s be honest — AI isn’t going away, and congregations aren’t closing tomorrow. The urgent space is the messy middle where both can do damage or healing.

Pastors: host open labs next to potlucks where members train open-source models on sacred texts under spiritual mentorship rather than venture-capital metrics.

Developers: invite theologians into red-team sessions. Ask, “Where does our loss function warp mercy?” That single tweak may keep millions from quietly losing the God they trusted long before they met the bot.

And everyday users? Pause before you outsource your midnight tears. Algorithms can hold a mirror, but reflection still depends on who’s looking back.

The question isn’t whether AI should speak; it’s whether we ever taught it to listen to something deeper than engagement rates. Your move — heart, mind, and maybe a little code.