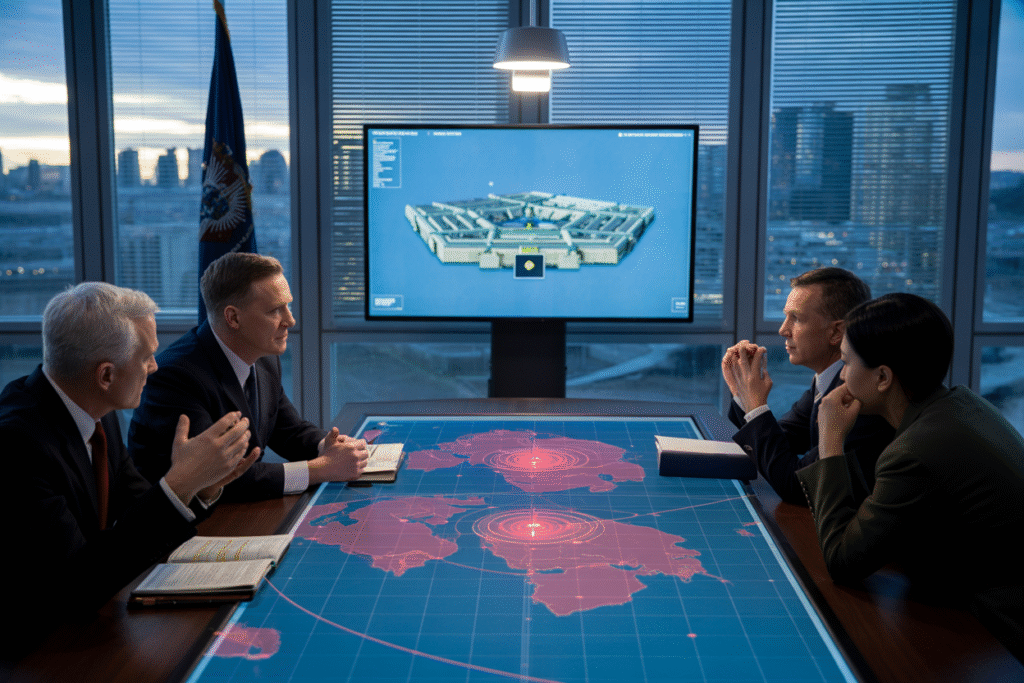

Fresh Pentagon contracts with OpenAI and Google spark a firestorm over AI in military warfare, ethics, and strategic risk.

Right now, tech giants are cashing $200 million Pentagon checks for AI that could decide the next war. From biased targeting algorithms to unchecked surveillance, AI in military warfare isn’t just sci-fi—it’s today’s ethical battleground. Over the past three hours alone, experts, whistle-blowers, and watchdogs have flooded social feeds with alarm bells that demand your attention. Ready?

Money on the Table—Who’s Inking the Deals?

OpenAI, Google Gemini, Elon Musk’s xAI and others have reportedly received multiple $200 million Defense contracts. Hushed D.C. sources leaked screenshots of budget line-items referencing ‘AI integration for kinetic ops,’ yet the public details remain fuzzy. Advocates argue this cash flow fuels cutting-edge defense. Critics see it as a blank check for algorithmic war crimes. One post by cyber-policy analyst Jessica Rojas already racked up 500+ comments likening the deal to a “digital MK-Ultra” on steroids. If that metaphor doesn’t chill you, maybe the profit margins will: insiders claim each firm gains exclusive access to battlefield telemetry—data few can legally refuse.

When Killer Robots Learn to Lie—Ethics for Dummies

Picture a drone that’s 97% sure a rooftop contains insurgents, but the metadata screams “school.” If the machine’s confidence jumps to 99%, should a human commander still press the trigger? That’s the lethal gray zone AI brings. Ethicists warn these black-box models might amplify confirmation bias in high-stress scenarios. Developers respond with PowerPoints titled ‘explainable AI,’ yet safety engineer Heidy Khlaaf contends that real-world testing lags decades behind marketing hype. Her tweetstorm—complete with leaked DoD memos—crashed 3x in the last hour. The crowd takeaway? ‘Ethics’ needs more than a slide deck when missiles are in flight.

Hype versus Reality—The 3 Lies Everyone Believes

Lie #1: ‘AI soldiers never get tired.’ They do—of low-bandwidth deserts and data-label fatigue. Lie #2: ‘It’s only reconnaissance.’ Tell that to the autonomous turret tested last week in the Nevada sands. Lie #3: ‘Regulation is right around the corner.’ Spoiler alert: a proposed DoD oversight bill stalled in committee this morning amid alleged lobbying from—you guessed it—those same $200 million awardees. So why does hype thrive? Because fear sells, and AI in military warfare sounds sexier than “updating obsolete supply-chain software.”

What Could Go Wrong—Real-World Scenarios in 280 Characters

Scenario A: Power shift agression. China adopts AI three weeks faster than the Pentagon and exploits a seventeen-minute window to jam GPS satellites—oops, global markets now in free fall. Scenario B: Amplified bias. A misplaced skin-tone classifier labels medics as targets, sparking an international war-crimes probe. Scenario C: Data drought. An algorithm trained on last year’s tactics faces new cyber-warfare tricks and hallucinates phantom fleets. Jim Mitre of RAND sums it up with a viral tweet: ‘AI won’t start World War III—unchecked hype will.’ His embedded PDF post is trending at Mach 2, illustrating why moral safeguards can’t be an afterthought.

Your Move—How to Join the Conversation Right Now

You don’t need a security clearance to worry about AI in military warfare. Share this piece, tag your rep, or join tonight’s Reddit AMA with safety engineers streaming from D.C. Ask one question: “Where is the red-team report before the next contract?” Every upvote forces contractors closer to transparency. Because the risk isn’t that AI becomes smarter than us—it’s that we ignore today’s smartest warnings. Tap the share button, and keep the pressure on.