From Silicon Valley boardrooms to secret propaganda labs, AI is quietly rewriting the rules of war.

AI isn’t just changing your Netflix queue—it’s quietly rewriting the rules of war. From algorithms that decide who lives on a battlefield to deepfakes that could swing an election, the stakes have never felt higher. This isn’t tomorrow’s problem; it’s today’s arms race, and we’re all in the crosshairs.

The Potsdam Call: When Code Holds the Trigger

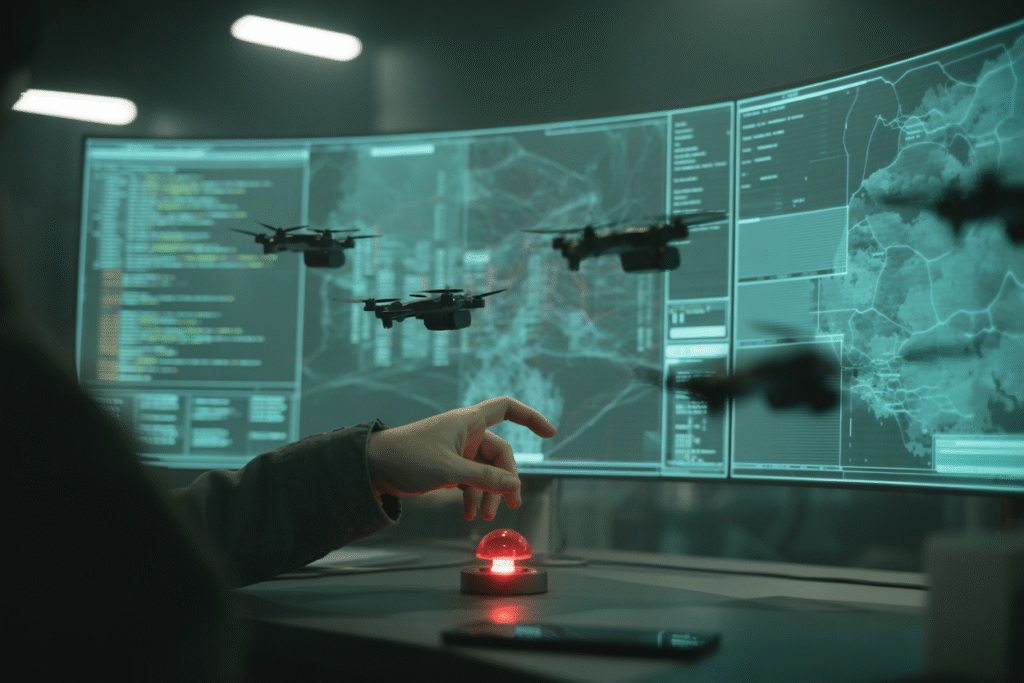

Picture this: a swarm of drones decides who lives and who dies before a human even blinks. Sounds like sci-fi, right? Yet that’s the exact scenario 34 heavyweight academics, including internet pioneer Vint Cerf, are begging world leaders to prevent. Their Potsdam Call, dropped on the 80th anniversary of Hiroshima, doesn’t mince words: autonomous weapons are the next nuclear moment.

The manifesto warns that once AI is given the literal trigger, the threshold for war drops through the floor. A single algorithmic miscalculation could ignite conflicts faster than any diplomatic hotline can cool them. Proponents argue these systems save soldiers’ lives by replacing boots on the ground. Critics fire back that delegating kill decisions to code erodes the last shreds of human accountability.

The stakes? Nothing less than a future where “oops” becomes an acceptable battlefield apology.

From Hoodies to Helmets: Silicon Valley’s War Pivot

Silicon Valley used to brag about “don’t be evil.” Now it’s writing love letters to the Pentagon. Google, Meta, and OpenAI—once squeamish about anything that went boom—are swearing in executives as Army officers and pitching AI that can spot a sniper before the sniper spots you.

The pivot is dizzying. Remember Google’s 2018 employee revolt over Project Maven? That same company now touts AI-powered drone analysis as a patriotic duty. OpenAI, which once banned military use, quietly opened the door to “defensive” applications. Meta’s VR headsets aren’t just for gamers anymore; they’re training soldiers in hyper-realistic combat sims.

Why the sudden group hug with generals? Money, momentum, and a dash of existential fear. Pentagon contracts are billion-dollar golden tickets, and no CEO wants to watch rivals cash in. Meanwhile, China’s public-private military AI sprint has Silicon Valley convinced that opting out equals falling behind.

The upside: smarter surveillance, faster threat detection, fewer human casualties. The downside: opaque algorithms deciding who’s a combatant, job losses in traditional intel units, and the creeping normalization of AI-driven warfare. One whistleblower’s warning sums it up: “We’re teaching machines to kill, but we still can’t teach them remorse.”

Six Ways AI Could Accidentally Start the Next War

Imagine a world where the first shot isn’t fired by a soldier, a president, or even a hacker—but by an algorithm that misreads satellite shadows. Researchers have sketched six chilling ways AI could accidentally start World War III.

1. Power-shift panic: If China’s AI-enhanced navy looks unbeatable, rival nations might strike first while they still can.

2. Lowered threshold: Autonomous drones make small, “risk-free” attacks tempting, sliding us into conflicts we’d normally avoid.

3. Data hallucination: A biased training set convinces an AI that a civilian convoy is a missile launcher. Boom.

4. Speed mismatch: Machines react in milliseconds; humans need minutes. By the time a general picks up the phone, the missiles are already airborne.

5. Sabotage spoofing: Adversaries feed false data, tricking friendly AI into attacking its own side.

6. Escalation spiral: Each side’s defensive AI misinterprets the other’s moves as offensive, creating a feedback loop of retaliation.

The fix isn’t unplugging the machines—it’s building AI that knows when not to pull the trigger. Easier said than programmed.

Deepfakes in the Gray Zone: China’s AI Propaganda Playbook

While we debate drones, China’s AI is already waging a quieter war—one fought with deepfake anchors, tailored memes, and algorithmic rumor mills. Researchers recently unmasked GoLaxy, a Beijing-linked firm using AI to craft hyper-realistic propaganda that profiles U.S. politicians and pumps disinformation into Taiwan’s social feeds.

Welcome to gray-zone conflict: actions too sneaky for traditional war, too damaging to ignore. GoLaxy’s “GoPro” system can spin a fake news video in minutes, complete with cloned voices and doctored footage that fools even savvy viewers. The goal isn’t victory on a battlefield—it’s chaos in a newsfeed.

Traditional counter-propaganda teams can’t keep up; they’re outgunned by bots that never sleep. Meanwhile, democratic societies wrestle with free-speech limits versus national security. Ban the tech and you stifle innovation. Ignore it and you risk elections swayed by code instead of citizens.

The takeaway? The next war might not be won with tanks or treaties, but with whoever writes the better algorithmic lie.

Your Move: How to Stay Human in an AI Arms Race

So where does this leave us—humans caught between killer robots and killer tweets? The common thread is deceptively simple: every line of military AI code is a policy decision in disguise.

We need rules that move as fast as the algorithms. That means international treaties banning fully autonomous kill decisions, mandatory audits for military AI training data, and whistleblower protections for the engineers who spot ethical landmines.

But tech moves faster than diplomacy. While diplomats debate commas, startups ship updates. The real leverage lies with us—voters, consumers, and coders—demanding transparency before the next deployment. Ask your reps if they’ve read the Potsdam Call. Ask your favorite app if it sells data to defense contractors. Ask yourself if “convenience” is worth an algorithm deciding who lives.

The future of warfare isn’t pre-written; it’s compiled daily by choices we make right now. Choose wisely—before the machines choose for us.