A mother’s claim that her son was silenced after exposing OpenAI’s secrets has ignited global debate on AI ethics, risks, and the price of progress.

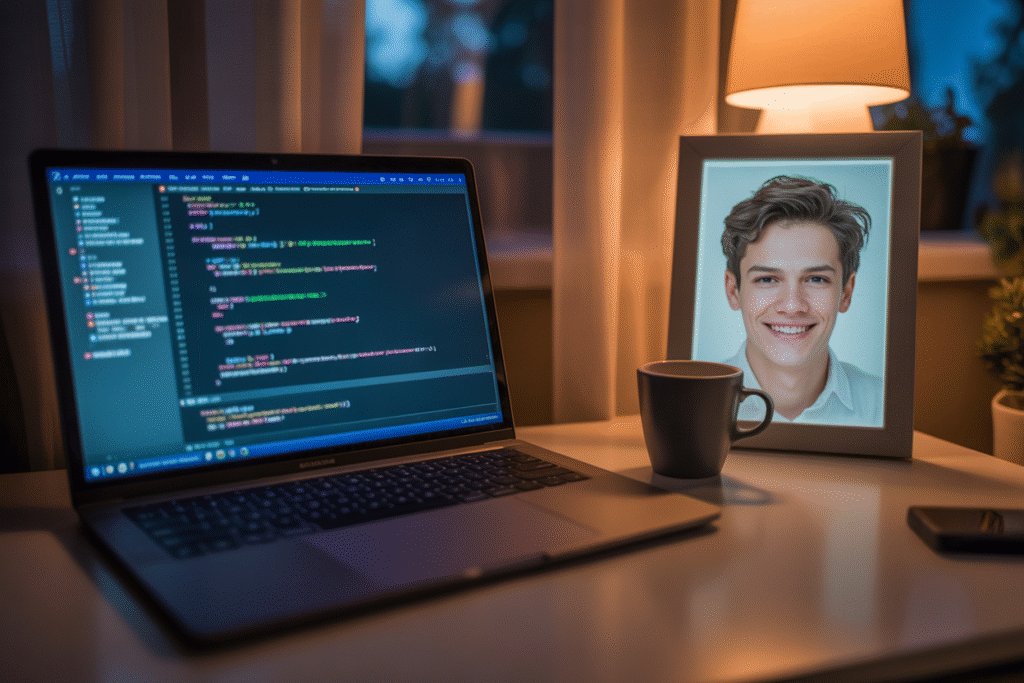

When a former OpenAI engineer is found dead after blowing the whistle on alleged copyright violations and unchecked AI development, the story stops being about code and starts being about conscience. What really happened to Suchir Balaji—and what does it say about the industry racing to replace us?

The Whistleblower Who Refused to Stay Quiet

Suchir Balaji wasn’t just another coder in a hoodie. He was the engineer who helped build ChatGPT, then turned around and warned the world it might be stealing on a massive scale.

His mother, Poornima, says he uncovered documents showing OpenAI trained on copyrighted material without consent. When he spoke up, she claims, the company froze him out.

Friends describe him as meticulous, almost stubborn in his pursuit of fairness. He wasn’t chasing fame; he was chasing accountability.

Then came the knock on the door. Police ruled his death a suicide. His family insists he showed no signs of depression.

Suddenly, the debate over AI replacing humans feels less abstract and painfully personal.

Elon Musk’s Cryptic Echo

Elon Musk didn’t need paragraphs to fan the flames. A single “!!” in reply to Griffin Musk’s post about whistleblower retaliation was enough.

Griffin had shared his own brushes with AI ethics, hinting at pressure inside tech giants to silence dissent. Musk’s reply felt like a bat signal to conspiracy theorists.

Within minutes, timelines exploded with theories ranging from corporate hit squads to deep-state cover-ups.

Whether you believe the hype or not, the message is clear: when AI ethics clash with billion-dollar valuations, the humans in the middle pay the price.

The Documents That May Never See Daylight

Balaji reportedly copied sensitive files before leaving OpenAI. His mother says those files detail training data scraped from books, articles, and paywalled sites.

Legal experts argue this could strengthen lawsuits already circling OpenAI. Tech defenders counter that all large language models learn from public text.

The catch? The files are now in legal limbo, tied up in probate court and nondisclosure knots.

Meanwhile, artists and writers whose work may have been fed into the machine watch anxiously, wondering if their words helped build the very tool that could replace them.

Every day the documents stay sealed, the rumor mill grinds faster.

Regulators, Reporters, and the Race for Transparency

Congressional aides confirm at least two committees have requested briefings on whistleblower protections in AI firms. The EU’s AI Act drafters cite Balaji’s case in new transparency clauses.

Journalists face a dilemma: chase the story aggressively and risk glorifying tragedy, or hold back and let corporate narratives dominate.

OpenAI’s official statement emphasizes employee well-being and denies any retaliation. Critics call it textbook damage control.

The broader public, scrolling between headlines and memes, is left asking a simple question: if AI is built on stolen creativity, who owns the future it promises?

Until regulators force disclosure, the answer may depend on which voices survive the next news cycle.

What Happens When the Code Outlives the Coder

Balaji’s GitHub profile still shows commits from last month. His code runs every time someone prompts ChatGPT for a poem or a legal brief.

Irony hangs heavy: the engineer who questioned the machine now powers it posthumously.

Activists are crowdfunding a memorial scholarship for ethical AI research. Venture capitalists, meanwhile, wonder if this scandal will slow the next funding round.

The uncomfortable truth is that AI replacing humans isn’t a future scenario—it’s already reshaping livelihoods, reputations, and even obituaries.

The only variable left is whether we choose to write the next chapter with transparency or silence.