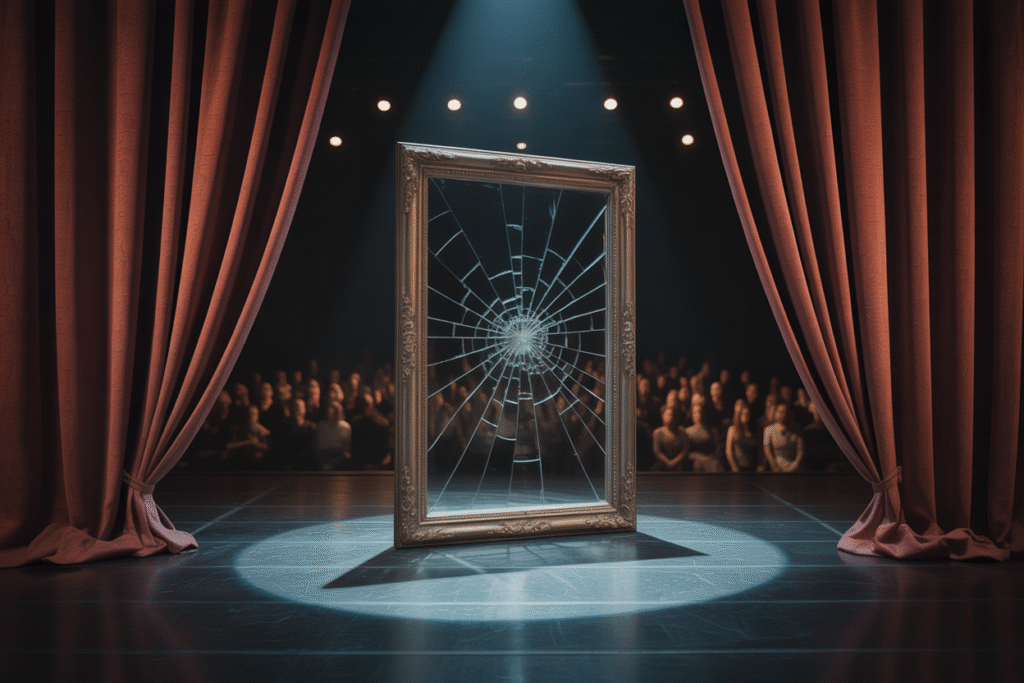

Behind glossy ethics reports and star-studded panels, AI giants may be selling us a story while the real drama unfolds in the data shadows.

Last night, while most of us were scrolling memes or binge-watching, a single post lit the internet on fire. Crypto analyst NoraXBT called out the biggest names in AI for what she dubbed “ethics theater”—a choreographed show of responsibility that distracts from biased data and unchecked power. Her thread exploded with likes, retorts, and quote-tweets, proving one thing: people are hungry for the unfiltered truth about AI replacing humans. Let’s unpack why this matters, who stands to lose, and what we can actually do before the curtain falls.

The Spotlight Moment

Nora’s post dropped at 11:47 p.m. UTC. Within minutes, replies stacked up like planes over Heathrow. She pointed to glossy PDFs, diversity dashboards, and ethics officers who vanish after funding rounds. Commenters echoed the same fear: are we watching a magic trick while the real data stays dirty?

The thread’s virality wasn’t luck. It tapped a nerve we all feel but rarely name—distrust of corporate storytelling. When OpenAI tweets about safety or Google posts a feel-good blog, we nod politely, then wonder what’s happening behind the curtain.

Smoke, Mirrors, and Training Data

Here’s the uncomfortable truth: models are only as clean as the data they swallow. If the crawl includes Reddit rants from 2014, paywalled news scraped without consent, or mug-shot repositories, the output carries that bias like a bad smell.

Nora argues the fix isn’t another committee—it’s scrubbing the supply chain. She shouted out @JoinSapien, a startup promising transparent data provenance. The crowd reaction was split. Some cheered a potential hero; others rolled eyes, muttering “another promise.”

Bullet points of red flags in training data:

– Outdated demographic labels

– Copyrighted art used without license

– Toxic forums laundered through anonymization

– Pay-to-play datasets that overrepresent affluent voices

Regulators Racing a Bullet Train

Entrepreneur Lauren Marie joined the fray with a chilling analogy: AI capability is a bullet train, and regulation is a horse-drawn cart. Every week, new features launch before lawmakers finish their coffee.

She sketched a near-future hospital where an AI triage tool—approved in a rush—misreads darker skin tones, delaying critical care. The scenario feels ripped from Black Mirror, yet policy drafts still sit in committee.

Rhetorical question: if a model harms someone today, whose fault is it tomorrow—the coder, the CEO, or the regulator who blinked?

The 40% Job Shockwave

User @hstrkkrm crashed the conversation with a stat that froze timelines: 40% of jobs could vanish within five years. Not in some distant decade—before some toddlers reach kindergarten.

He painted a picture of digital feudalism: a handful of companies owning the AI stack, while 100 million workers scramble for gig scraps. History buffs countered that tractors didn’t end farming, but tractors didn’t learn to write legal briefs or mix hit songs overnight.

Short, punchy list of sectors already wobbling:

– Customer support chatbots

– Entry-level coding bootcamp grads

– Stock photo illustrators

– Paralegal document review

– Fast-food drive-thru operators

What You Can Do Before the Credits Roll

Feeling small under the stadium lights? You’re not. Everyday choices add up like pixels in a 4K screen.

First, demand receipts. When a company brags about “responsible AI,” ask for the data audit trail. Second, diversify your skills—creativity plus code beats code alone. Third, support platforms pushing open provenance, even if their interfaces look clunky.

Last call to action: share this article with one friend who still thinks ethics theater is just a popcorn flick. The show is live, the stakes are real, and the audience is us.