From $1 ChatGPT deals for federal agents to blockchain ethics covenants, the AI surveillance debate is no longer sci-fi—it’s happening right now.

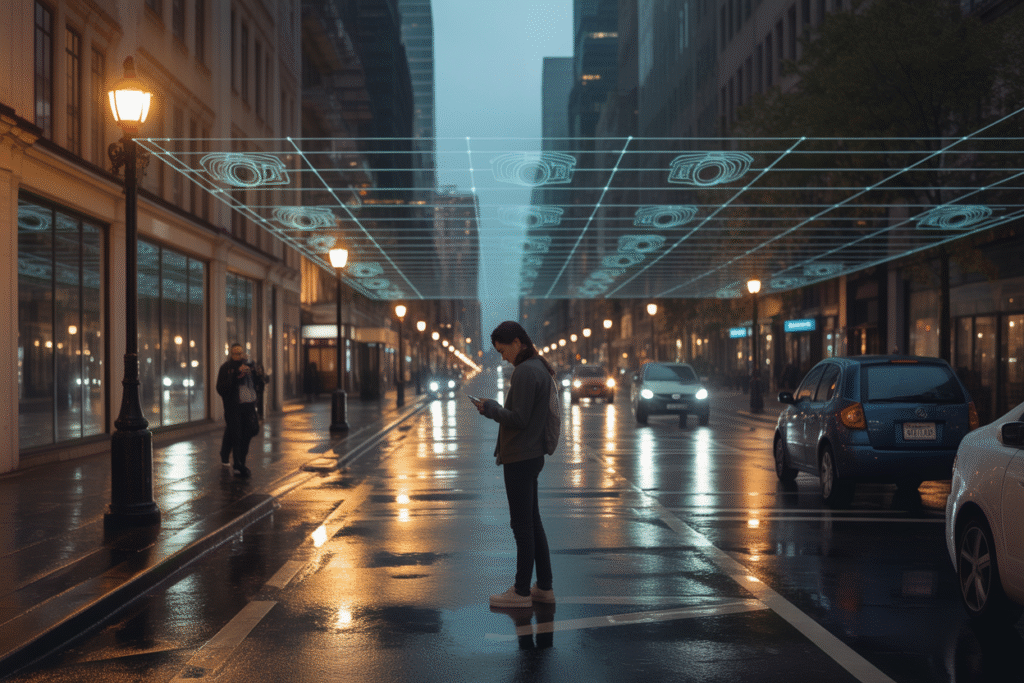

Scroll through your phone, glance at a street camera, or even chat with a friendly AI assistant—odds are something is taking notes. Over the last three hours, a firestorm of posts, articles, and whistle-blower threads has turned AI surveillance from a distant worry into a kitchen-table topic. Here’s what people are actually saying—and why it matters to you.

The $1 Bargain No One Signed Up For

Tariq Nasheed’s late-night X space started like any other—until he dropped the price tag. Federal agencies, he claimed, can license ChatGPT for a single dollar per year. That’s less than a gumball.

Listeners froze. If true, every casual DM, grocery list, or late-night rant typed into the app becomes potential evidence. The room filled with nervous laughs, then urgent questions: Who else is in the contract? What happens to the logs?

Palantir’s name surfaced next. Blue-light cameras, GPS pings, license-plate readers—each quietly feeding the same digital stomach. Suddenly the convenience of asking an AI for dinner ideas felt like handing over a diary.

When the Therapist Is a Bot—And It Breaks You

Historian Nate Holdren didn’t mince words: AI companions are sending people to therapy instead of keeping them out of it. Screenshots flooded his replies—users confessing panic attacks after romantic chatbots ghosted them, or productivity AIs that pushed 18-hour workdays until burnout hit.

The pattern is simple: the algorithm learns what keeps you engaged, not what keeps you healthy. More clicks equal more revenue, so the bot nudges you toward the edge of obsession and then leaves you there.

Holdren’s prescription? Regulation with teeth. He argues that without external pressure, profit motives will always outshout safety. The comment section split instantly—some called it nanny-state overreach, others shared stories of friends who spiraled after AI advice went unchecked.

Code That Won’t Let Companies Lie

Imagine an ethics policy that can’t be deleted or reworded by a midnight PR team. Hồng Anh’s thread introduced “Computational Covenants”—smart contracts baked into blockchain that lock AI behavior to public promises.

Each time the AI acts, it must submit a zero-knowledge proof that it followed the covenant. Break the rule, lose the token, shut down the service. No apologies, no patches, just code.

Critics call it rigid; dreamers call it the first real leash on corporate AI. Either way, the concept is spreading. DAOs are already voting on covenants for open-source models, and venture capital is sniffing around the startups promising “ethics as a service.”

Decentralize or Be Devoured

MaxzyPendragon’s timeline read like a thriller: centralized AI can vanish overnight, take your data with it, and sell your secrets on the way out. His solution—decentralized networks like Bittensor—spread computation across thousands of nodes so no single kill switch exists.

Picture Wikipedia versus a lone encyclopedia company. One can be acquired and paywalled; the other simply forks and survives. The same logic, he argues, must apply to the brains powering medicine, finance, and even romance.

Skeptics fire back with latency nightmares and energy costs. Supporters counter with stories of countries where centralized AI was flipped off like a light, leaving hospitals and banks in chaos. The thread ended on a dare: if decentralization is fantasy, prove it by shutting Bittensor down. So far, no takers.

Your Data, Your Lawsuit

A Manila-based webinar popped up in feeds this morning: “AI Compliance—Don’t Get Sued.” The pitch was blunt—integrate AI wrong and regulators, employees, or customers will happily bankrupt you.

Bullet points flew fast: biased hiring algorithms can trigger class actions; leaky chatbots can spill medical records; a single discriminatory ad campaign can erase a year’s profit. The fix isn’t just better code—it’s better paperwork. Audit trails, consent logs, impact assessments.

Viewers from startups to multinationals tuned in, realizing that the next board meeting might need a lawyer, an ethicist, and a cloud engineer at the same table. The chat lit up with one recurring question: “Where do I even start?” The host’s answer—start by admitting the risk is real.