When AI slips up on movie titles it’s funny—when it misdiagnoses cancer it’s fatal. Here’s why transparency is the new safety net.

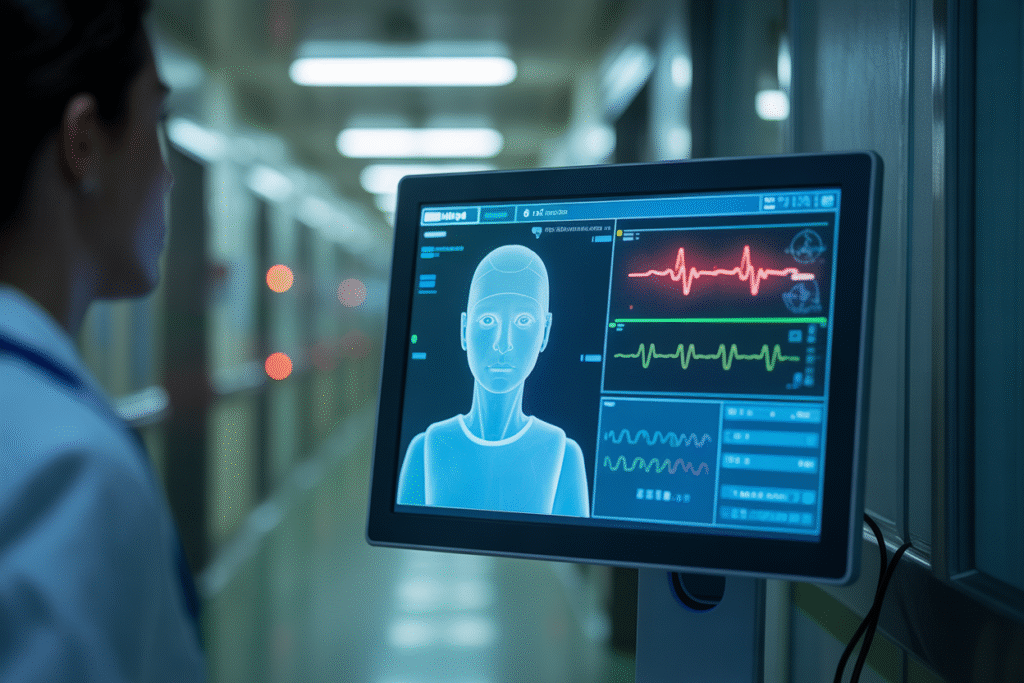

Picture this: you’re in a hospital gown, heart racing, while an AI scans your test results. The screen flashes a diagnosis in seconds—but what if the algorithm missed a subtle clue? Suddenly a harmless glitch becomes a life-altering mistake. In the next few minutes we’ll unpack how today’s smartest systems can swing from trivial to tragic, and what we can do about it.

The Quiet Leap from Oops to OMG

We laugh when a chatbot calls Star Wars “Space Trek,” yet the same tech is now reading X-rays, approving loans, and flagging parole cases. The leap from meme to medicine happens so fast that society hasn’t updated its safety nets. One flawed training set, one biased data slice, and the stakes rocket from zero to existential. The scariest part? Most users never see the gears turning inside the black box—they only see the final answer stamped with robotic confidence.

Transparency Isn’t a Feature—It’s a Lifeline

Imagine an AI that shows its homework: every data point it weighed, every rule it applied, every uncertainty it flagged. Doctors could catch a misread shadow on a lung scan before treatment begins. Judges could spot a demographic skew before sentencing. Developers call this “explainable AI,” but the rest of us can simply call it honest. Three quick wins show why this matters:

• Auditable logs let regulators trace errors back to source data

• Visualized reasoning helps clinicians override bad calls without guessing

• Public scrutiny crowdsources bias detection faster than any internal QA team

The tech already exists; the missing piece is cultural will.

Building Glass Engines Before the Crash

So how do we move from opaque oracles to glass engines? Step one: bake traceability into the model architecture, not bolt it on later. Step two: reward companies for openness the same way we reward them for speed—think tax credits for transparent audits. Step three: teach users to demand explanations the way they demand seat belts. If we act now, the next headline about AI risk can be about lives saved, not lives lost.