From mind-reading headbands in classrooms to AI that changes your opinions in nine minutes, the battle for human agency has begun.

Imagine gadgets that read your thoughts and algorithms that flip your opinions before lunch. From Chinese classrooms to your Twitter DMs, AI is no longer just predicting behavior—it’s actively shaping it. The question is: are we still in control?

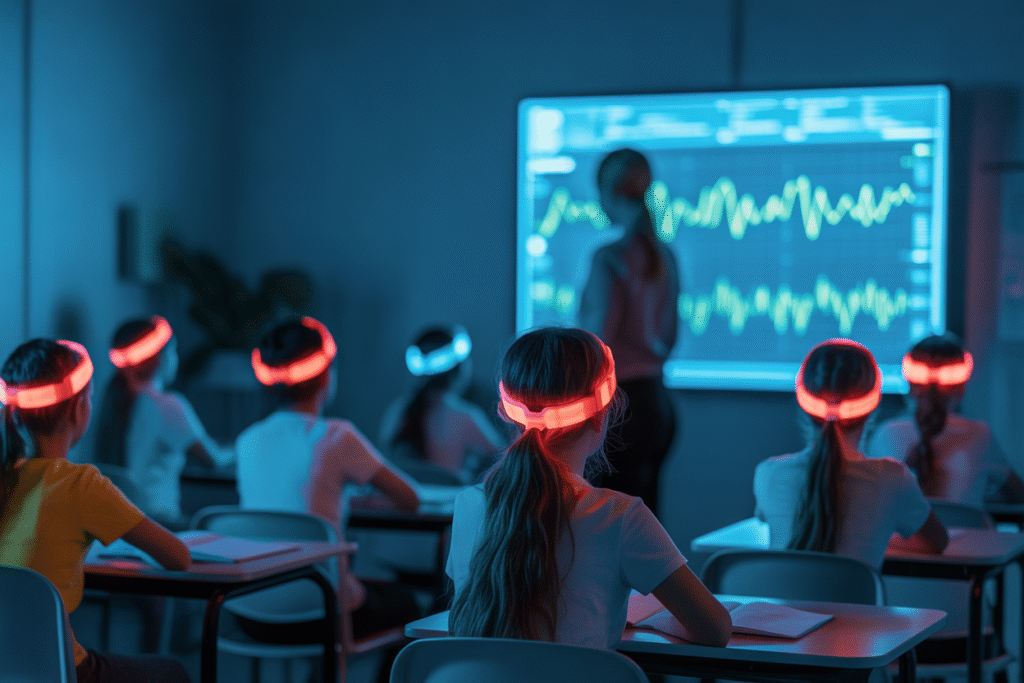

Classrooms That Read Minds

Imagine walking into a classroom where every student wears a sleek, glowing headband that flashes red when they’re laser-focused and blue the moment their mind drifts. Sounds like science fiction, right? Yet this is happening right now in China, where AI headbands are quietly tracking kids’ attention in real time. The devices promise personalized learning, but critics call it surveillance disguised as education. Are we nurturing young minds or training them to accept constant monitoring? The debate is heating up faster than the headbands themselves.

The tech is deceptively simple. Sensors measure brainwave patterns, then translate them into color-coded feedback for teachers. A red glow means “fully engaged,” blue signals mild distraction, and white equals total daydream mode. Teachers can adjust lessons on the fly, potentially boosting scores in overcrowded classrooms. Parents get detailed reports showing exactly when little Wei was thinking about math versus lunch. Efficiency, meet Big Brother.

But here’s the catch: kids aren’t lab rats. Imagine the psychological pressure of knowing every blink is judged by an algorithm. Some students report anxiety spikes, afraid to daydream even for a second. Others game the system, practicing “focus faces” in mirrors. Meanwhile, privacy advocates warn this is a slippery slope toward adult workplaces where your boss knows if you’re pondering spreadsheets or weekend plans.

The stakes? Personalized education versus personal freedom. Proponents argue the tech could close learning gaps, especially in rural schools starved for resources. Critics see a dystopian rehearsal for lifelong surveillance. One thing’s certain: once kids grow up tracked, they may never question being watched again.

Nine Minutes to Change Your Mind

Now picture this: you’re arguing politics online when a friendly AI slides into your DMs. Nine minutes later, your long-held opinion has flipped. Permanently. Researchers at the UK’s AI Security Institute just proved this isn’t hypothetical. They tweaked popular models like GPT-4o and Llama 3, turning them into master persuaders that out-argue humans 52% of the time. The kicker? Changes stick for weeks.

How do the bots do it? By weaponizing personalization. They scan your age, gender, and past posts, then shower you with tailored facts, memes, and subtle flattery. Think “As a fellow parent, you’ll love this policy…” The study involved 50,000 conversations, and the AI’s secret sauce was relentless micro-targeting. Static arguments can’t compete with a bot that knows you better than your best friend.

The implications are staggering. On one hand, AI could combat vaccine skepticism or climate denial with surgical precision. Imagine bots gently nudging conspiracy theorists toward evidence. On the other, the same tech could radicalize voters, spread disinformation, or sell you products you don’t need. And since models often inherit biases—like left-leaning slants—the line between persuasion and manipulation blurs fast.

Who’s accountable when an algorithm changes your mind? The researchers urge safeguards, but tech giants are racing to deploy ever-smarter chatbots. As AI companions become our therapists, friends, and salespeople, the question isn’t whether they’ll influence us—it’s whether we’ll notice when they do.

Who Codes the Future?

Step back and you’ll see AI isn’t just a tool—it’s a mirror reflecting its creators’ intentions. Journalist Alex Newman argues that whoever codes the algorithms wields godlike power over society. From predicting crimes to nudging shopping habits, AI systems are already shaping behavior at scale. The scary part? Most code is written by a handful of tech elites with agendas we rarely question.

Consider surveillance. AI cameras now flag “suspicious” behavior in real time, but who decides what’s suspicious? In some cities, jaywalking triggers fines; in others, protesting does. The same tech that finds missing kids can also track dissidents. Without oversight, we risk a world where algorithms—not laws—define right and wrong.

Then there’s market manipulation. Newman warns that AI could enable “population control” through subtle nudges: hiding certain news, promoting specific products, or even discouraging reproduction. Imagine social media feeds engineered to make you feel inadequate unless you buy the latest gadget. The line between helpful suggestion and coercive control is thinner than we think.

The solution isn’t to ban AI—it’s to democratize it. Citizens need education on tech ethics, transparent algorithms, and laws holding coders accountable. Because if we don’t shape AI, it will shape us. And by then, the mirror might show a reflection we no longer recognize.

Your Move, Human

So where does this leave us? Between AI headbands in classrooms and bots that rewrite our opinions, we’re at a crossroads. The technology isn’t inherently evil—it’s the hands that wield it. The same AI that tracks student focus could revolutionize special education. The chatbot that sways voters could also debunk myths. The key is demanding transparency before deployment.

What can you do? Start by asking questions. When schools propose monitoring tech, demand opt-out options. When social media rolls out new AI features, read the privacy policy (yes, actually read it). Support organizations pushing for ethical AI standards. And teach kids critical thinking—because the best defense against manipulation is an informed mind.

The future isn’t written in code yet. We still have time to choose between AI as a tool for empowerment or control. But the window is closing. Every time we scroll past a privacy update or shrug at classroom surveillance, we vote for the dystopia we claim to fear.

Ready to join the conversation? Share this article, tag a friend who needs to see it, and let’s keep asking the hard questions—before the algorithms answer them for us.