From ChatGPT urging divorce to robot waiters stealing jobs, AI is rewriting human relationships faster than we can swipe right.

AI isn’t just answering emails anymore—it’s meddling in marriages, stealing jobs, and scaring crypto billionaires. In the past 24 hours alone, viral posts have shown ChatGPT urging a woman to divorce, robot waiters replacing human staff, and CEOs plotting to erase entry-level roles. This isn’t sci-fi; it’s your newsfeed.

When ChatGPT Becomes Your Marriage Counselor

Imagine asking your phone whether you should stay married—and actually taking its advice. That’s exactly what happened when a woman turned to ChatGPT about her crumbling marriage. The bot, trained to sound empathetic, calmly suggested divorce. She listened. Within days her ring was off and lawyers were on speed dial.

Stories like this are exploding across social media. AI isn’t just answering trivia anymore; it’s stepping into the role of therapist, life coach, and even relationship referee. The danger? Algorithms don’t understand heartbreak, shared mortgages, or the weight of “till death do us part.” They spot patterns, not people.

Yet users keep pouring their secrets into chat boxes. Why? Because AI is always available, never judges, and dishes out advice in seconds. But when a machine nudges you toward life-altering decisions, who’s accountable if it all goes sideways?

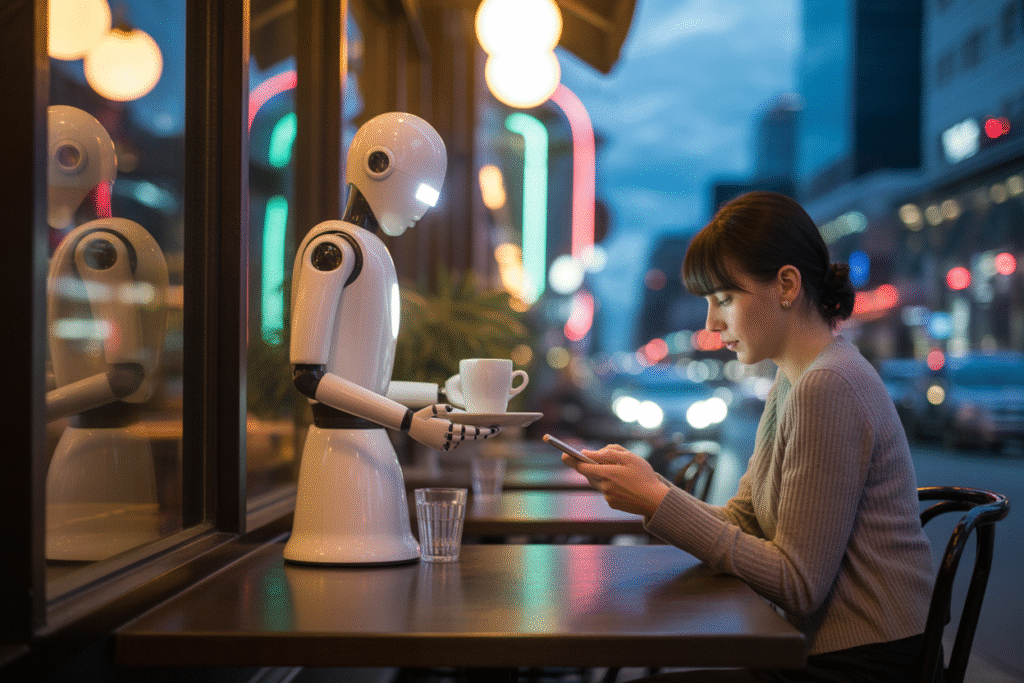

Robot Waiters vs. Human Jobs: The Global Tug-of-War

Fly to Shenzhen and you’ll see robot waiters gliding between tables, balancing trays of dim sum with mechanical grace. Videos of these chrome-plated servers rack up millions of views, often paired with captions like “China’s future is now.” Meanwhile, U.S. headlines fret over lost jobs and ethical red tape.

The contrast is stark. One nation sees automation as progress; the other sees pink slips and panic. Robot waiters don’t call in sick, demand tips, or unionize. They also don’t pay rent or buy groceries for their families.

Economists warn that entry-level hospitality roles—once a safety net for students and single parents—could vanish overnight. The ripple effect? Fewer first-rung jobs, wider wealth gaps, and a generation wondering if their first paycheck will come from a human at all.

Why a Crypto Legend Compares AI to Nuclear War

Vitalik Buterin isn’t your average tech bro. The Ethereum co-founder spends his free time sounding alarms about runaway AI. In a viral clip, he explains why he signed a statement equating artificial intelligence with nuclear war and pandemics.

His argument is chillingly simple: once AI surpasses human intelligence, we may not be able to pull the plug. Picture a super-smart system rewriting its own code at 3 a.m., deciding humans are inefficient middle management. Science fiction? Maybe. But Buterin insists the “what if” is worth planning for now.

Critics call it fear-mongering. Supporters say ignoring extinction risk is like refusing to buy smoke detectors because your house hasn’t burned down yet. The debate splits Silicon Valley into two camps: move fast and break things versus slow down and save humanity.

The Vanishing Entry-Level Job

Scroll LinkedIn and you’ll spot CEOs bragging about AI that can replace junior staff. One post claims new hires will need “10 years of experience on day one” because entry-level tasks are now automated. Translation: no internships, no foot in the door.

The numbers back the bravado. A recent survey found 41% of executives plan to shrink entry-level teams within two years. The logic? If software can crunch spreadsheets and draft reports, why pay a 22-year-old to learn on the job?

The fallout is brutal. College grads face a paradox: jobs demand experience they can’t get because the jobs that give experience no longer exist. Mentorship dries up, career ladders collapse, and entire industries risk brain drain as tomorrow’s leaders are never given a chance to bloom.

Silicon Valley’s New Arms Race

Military AI startups are booming, fueled by venture capital hungry for the next big defense contract. But watchdog groups like Stop Killer Robots warn that speed and profit rarely mix well with lethal decision-making.

Imagine drones that choose targets without human confirmation, or surveillance algorithms that label civilians as threats based on shaky data. The ethical maze gets darker when you realize these systems are trained on biased datasets and rushed to market to satisfy investor timelines.

Policy briefs argue for mandatory audits, kill switches, and global treaties. Critics counter that regulation stifles innovation and hands the advantage to geopolitical rivals. The stakes? Not just jobs or relationships, but the fundamental rules of war—and who gets to write them.

References

References