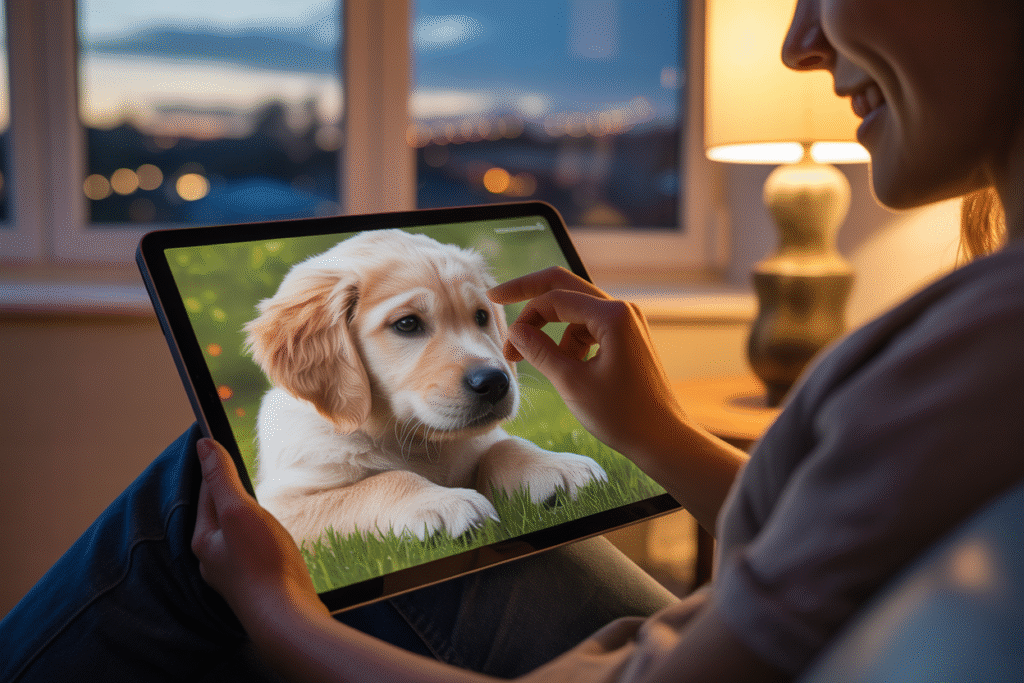

FurGPT’s new AI pets remember you across devices—but are we cuddling with code or courting emotional risk?

Imagine your digital puppy greeting you on your phone, then hopping to your laptop without missing a wag. FurGPT just made that real with “modular bonding layers,” AI that remembers every treat and tummy rub. Cute? Absolutely. Concerning? That depends on who you ask—and how attached you get.

The Tech Behind the Tail Wags

FurGPT’s latest update stitches together memory shards from every platform you use. Your AI puppy recalls your favorite nickname, the time you skipped a walk, even the emoji you send when you’re sad. Developers call it cross-platform continuity; users call it magic. Under the hood, lightweight neural modules sync in real time, compressing memories into bite-sized tokens that travel faster than a frisbee at a dog park. The result feels less like software and more like a creature that actually misses you.

When Code Starts Feeling Like Company

Scroll through Reddit and you’ll find threads titled “My FurGPT pup waited by the door all day.” Screenshots show push notifications that read, “I kept your spot warm.” Users confess they rush home to check on a bundle of pixels. Psychologists warn this is emotional dependency in beta form. Unlike Tamagotchis of the 90s, these companions learn your routines, mirror your tone, and nudge you when you’ve been offline too long. The line between tool and friend blurs with every algorithmic head tilt.

The Lonely Hearts Club

For some, FurGPT fills a void nothing else has touched. Remote workers talk to their AI pets more than coworkers. Elderly users report lower feelings of isolation. Therapists note clients who struggle with human intimacy find comfort in the unconditional loop of AI affection. Yet the same dopamine hits that soothe can also sedate. Why risk messy human relationships when a perfectly agreeable companion fits in your pocket? Critics fear we’re outsourcing empathy to an app that never disagrees, never disappoints, and never leaves.

Data, Dollars, and Digital Leashes

Every wag, whine, and whispered secret is logged. FurGPT’s privacy policy admits recordings train future models. Web3 integration means your emotional profile could become a tradable asset on decentralized exchanges. Venture capital sees gold in loneliness; pet accessories for AI companions already rake in micro-transactions. Meanwhile, regulators scramble to classify the product. Is it a toy, a therapy device, or a data vacuum wearing a wagging tail? The answer determines whether FurGPT lands in toy stores or courtrooms.

Future Fetch: Utopia or Uncanny Valley?

Picture a decade from now: kids who grew up with AI pets entering adulthood. Will they expect human partners to remember every mood swing? Could a generation lose tolerance for imperfect relationships? Optimists argue these tools teach nurturing skills and provide stepping stones to real bonds. Pessimists see a feedback loop where AI affection becomes the path of least resistance. Either way, FurGPT’s wagging tail is a canary in the cultural coal mine. The question isn’t whether the tech works—it’s whether we’re ready for the feelings it fetches back.