From live-streamed classrooms to AI-tracked protests, surveillance is the hottest AI politics debate right now.

AI isn’t just writing essays or painting portraits anymore—it’s watching us, grading us, and sometimes policing us. In the last three hours alone, social media has exploded with debates over cameras in classrooms and AI tracking protesters. Buckle up; the future just knocked on the homeroom door.

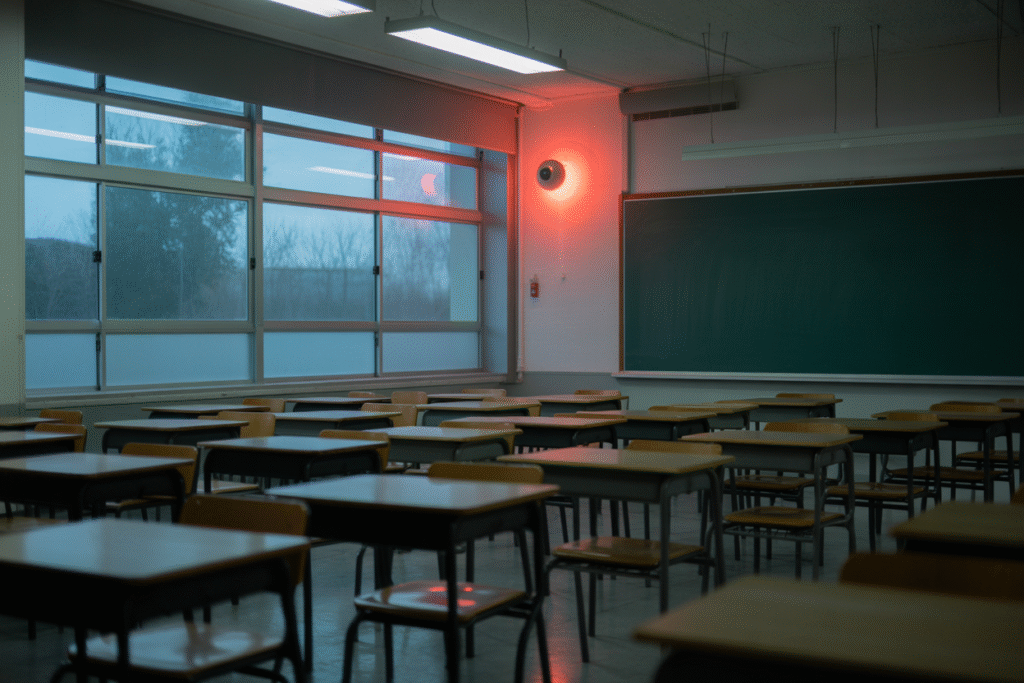

When Classrooms Go Live: The Surveillance Bell Rings

Ever feel like the classroom is turning into a reality show? Filmmaker Robby Starbuck just lit up X with a proposal that sounds straight out of Black Mirror: live-streaming every lesson to parents via always-on cameras. His pitch? Fewer disruptions, zero “woke” indoctrination, and crystal-clear evidence when something goes sideways. The post rocketed to 5,872 likes and nearly 700 replies in three hours, proving surveillance in schools is the AI politics debate no one can ignore.

Starbuck’s tweet came packaged with an AI-generated image of a floating arm pressing a big red button—quirky, yes, but the message was dead serious. Parents, he argues, deserve a front-row seat to their kids’ education. Teachers, meanwhile, see a future where every offhand joke or controversial topic could be clipped, shared, and dissected by the internet.

The pros are easy to list: accountability, safety, and a potential drop in bullying. But flip the coin and you’ll find exhausted educators worried about performance anxiety and the slow erosion of trust. If the camera’s always on, do students still feel safe to ask “dumb” questions? Do teachers dare tackle tough subjects like race or politics?

And then there’s the data. Who stores the footage? How long is it kept? Could an algorithm flag “suspicious” behavior and send alerts to administrators—or worse, law enforcement? Suddenly the classroom feels less like a place of learning and more like a panopticon.

Yet the idea isn’t as far-fetched as it sounds. Schools already use cameras in hallways; adding them to classrooms is just a software update away. The question isn’t whether we can do it, but whether we should.

Protests, Palantir, and Prophecy: AI Eyes on the Streets

While parents debate classroom cams, another storm is brewing outside the school gates. FRANCE 24 dropped a fresh report claiming U.S. agencies are using AI to track pro-Palestinian protesters. Facial recognition, social-media scraping, and predictive analytics—once the stuff of spy thrillers—are now allegedly part of the everyday toolkit for monitoring demonstrations.

The story lit up timelines within hours. Rights groups call it a chilling slide toward authoritarianism; law-enforcement sources insist it’s about preventing violence, not silencing dissent. Either way, the line between safety and suppression has never felt thinner.

Let’s zoom out. Palantir, the data-mining giant with ties to Peter Thiel, keeps popping up in these conversations. A satirical post mocking its mysterious business model racked up 10,257 likes and 541 replies, proving people are equal parts confused and concerned. What exactly does Palantir do? The short answer: it turns oceans of data into actionable intel—for governments, militaries, and police departments.

That power is seductive. Imagine an algorithm that can predict where the next protest will flare up or which activist might turn violent. Sounds like a win for public safety, right? But predictive models are only as good as the data they’re fed. Bias in, bias out. And when the stakes are freedom of speech, a false positive isn’t just an inconvenience—it’s a civil-rights violation.

The ripple effects reach far beyond protest circles. If authorities can track demonstrators today, what’s stopping them from monitoring union organizers tomorrow? Or journalists? Or even parents who complain too loudly at school-board meetings? The tech is already here; the guardrails are not.

Meanwhile, conspiracy corners of the internet are having a field day. Posts linking Bill Gates to “electronic tattoos” that replace smartphones are racking up hundreds of shares. The idea sounds wild—until you realize biometric patches are already in clinical trials. Suddenly Revelation 13 doesn’t feel so far-fetched to some believers.

All of this feeds a single, unsettling narrative: AI surveillance is expanding faster than our ability to regulate it. From classrooms to street marches to our own skin, the watchful eye is getting smarter—and closer—every day.

Who Writes the Rules in a Glass House?

So where does this leave us? At the crossroads of convenience and control, dazzled by shiny tech promises while quietly surrendering pieces of our privacy. Whether it’s a camera in every classroom or an algorithm tracking every rally, the core question is the same: who watches the watchers?

Start with schools. If live-streaming lessons becomes the norm, districts will need airtight policies on data storage, access, and deletion. Parents might love the transparency—until their kid’s awkward question ends up on TikTok. Teachers’ unions will push back, citing workload and mental health. Students, the supposed beneficiaries, rarely get a seat at the table.

Then there’s the bigger picture. Every new surveillance tool chips away at the anonymity we once took for granted. Today it’s facial recognition at protests; tomorrow it could be mood-tracking cameras in offices or AI that flags “subversive” books in libraries. The creep is real, and it’s incremental.

Here’s a quick checklist to stay ahead of the curve:

1. Read the fine print: who owns the data and for how long?

2. Ask local reps about oversight committees for AI purchases.

3. Support organizations pushing for transparent tech policies.

4. Talk to kids about digital footprints—early and often.

The good news? Public pressure works. Cities like San Francisco have already banned facial recognition, and school districts have shelved invasive monitoring programs after parent outcry. The tech may be inevitable, but its deployment isn’t.

We’re writing the rules in real time. Choose to engage now, or wake up one day wondering how the classroom—and the country—became a glass house.