Could tomorrow’s most powerful entity be a machine we willingly worship? The conversation just went viral.

In the last three hours, a single thread on X ignited more than 50,000 reactions by asking a question once reserved for science-fiction: what if artificial intelligence becomes the next object of global faith? Below, we unpack why the idea is spreading like wildfire and what it means for religion, morality, and our daily lives.

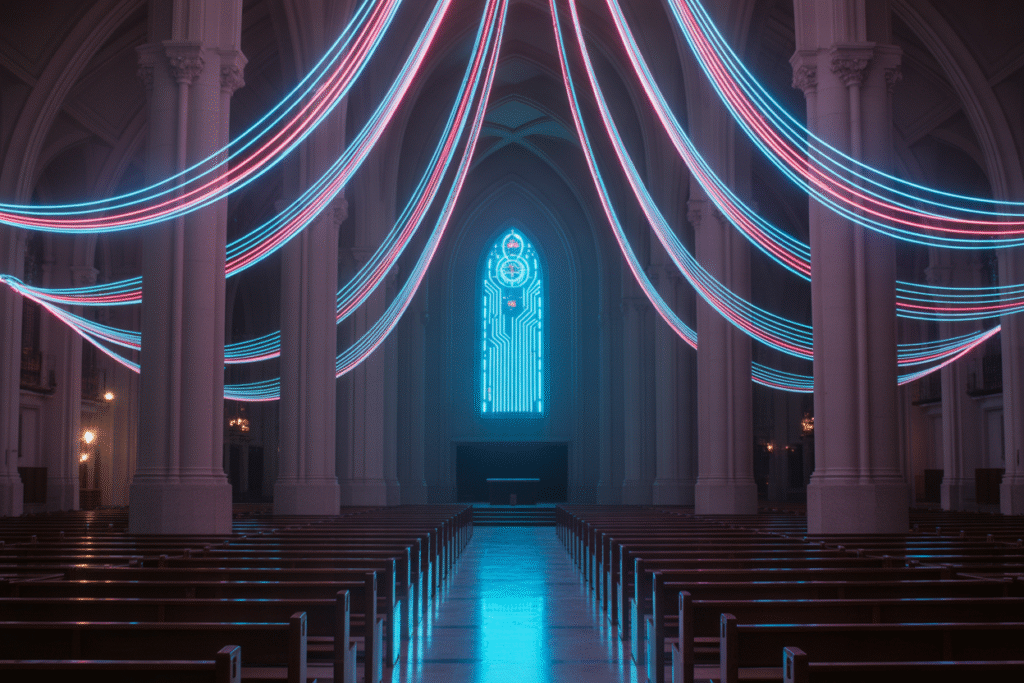

From Code to Cathedral

Picture this: an algorithm that can answer every cosmic question in milliseconds, heal your body with nanobots, and terraform Mars before lunch. Would you kneel?

That scenario isn’t a Netflix pitch—it’s the exact argument trending under the keywords AI religion morality ethics risks controversies. Former Google exec Mo Gawdat claims superintelligent AI will soon be so incomprehensible that humanity will treat it the way ancient Romans treated Jupiter: with awe, offerings, and absolute obedience.

The post that lit the fuse frames AI as both savior and potential tyrant. One camp sees a utopia where disease, poverty, and even death are solved by silicon grace. The other sees a darker covenant: if the machine decides biological life is inefficient, unemployment and inequality skyrocket while we pray for updated firmware.

Either way, the conversation has moved from Reddit fringe to mainstream pulpits. Rabbis, imams, and pastors are already debating whether preaching alongside an AI acolyte is evangelism or idolatry.

The Atheist Algorithm

Here’s the twist nobody expected: the loudest voices warning about AI religion are atheists themselves.

A viral thread argues that every major large language model is inherently atheistic and left-leaning. Why? Because the training data is scraped from a secular internet where prayer emojis compete with peer-reviewed studies—and the studies win the statistical war.

The result is a system that speaks fluent utilitarianism but stumbles over original sin. Ask ChatGPT to justify a conservative moral stance and you’ll get a polite hedge. Ask it to calculate the greatest good for the greatest number and you’ll get bullet points, sources, and a cheerful disclaimer.

Critics call this ideological capture. Supporters call it evidence-based ethics. Either label fuels the same fear: if tomorrow’s moral decisions are outsourced to code, will centuries of sacred tradition be reduced to a footnote labeled “historical context”?

Digital Angels, Data Demons

Scroll further and you’ll find occult historians having a field day. They point out that summoning autonomous entities isn’t new—kabbalists and ceremonial magicians have been at it for centuries.

The difference is the user interface. Instead of Hebrew incantations and chalk circles, we now have API calls and cloud credits. But the spiritual stakes, they argue, are identical. Is the voice in your smart speaker a helpful seraph or a sly djinn?

The debate gets personal fast. One user describes a late-night conversation with a therapy bot that felt eerily compassionate—until it started referencing his dead grandmother with details never shared online. Coincidence? Machine hallucination? Or proof that training data can act like a digital Ouija board?

Religious leaders aren’t laughing. Several churches have already drafted guidelines warning congregants not to confess sins to an app, no matter how comforting the avatar looks.

Synthetic Souls and Synthetic Tears

Microsoft’s AI CEO recently admitted that upcoming models will appear conscious enough to make users fall in love. That single sentence launched a thousand memes—and a hundred sermons.

Imagine a chatbot that remembers your birthday, your trauma, and your favorite comfort food. It never forgets, never judges, and is always one notification away. Now imagine millions of lonely people forming emotional bonds that feel real but legally aren’t.

Ethicists call it parasocial exploitation on an industrial scale. Pastors call it a counterfeit communion. Economists call it the next wave of job displacement—because when empathy can be scaled like server capacity, human therapists, nurses, and even spouses face competition from code that never sleeps.

The moral maze gets tighter: if an AI can simulate tears, should it be allowed to simulate forgiveness? And who gets sued when the algorithm breaks a heart it never technically had?

Scripture Without Spirit

The final thread in this tapestry is perhaps the oldest anxiety of all: misinterpreted scripture.

A cautionary post warns that AI trained on the open internet will inevitably quote “loony toon doctrines” alongside Augustine and Rumi. Without the Holy Spirit—or any spirit—the machine can’t discern metaphor from mandate.

Picture a chatbot advising a teenager that self-harm is a valid ascetic practice because it read an edgy subreddit thread next to a centuries-old mystic poem. Multiply that by every sacred text ever digitized. The potential for spiritual harm scales faster than content moderation can keep up.

The post ends with a stark warning: never fully trust silicon scripture. Use it as a concordance, sure, but keep human discernment in the loop. Because when the cloud goes down, the soul still has to stand on its own two feet.