From AI generals calling airstrikes to Pentagon layoffs gutting oversight, the future of warfare is arriving faster than our ethics can handle.

Warfare is no longer just about boots on the ground—it’s about code on the server. In the past 24 hours, headlines revealed AI agents drafting battle plans, billion-dollar defense deals, and sweeping Pentagon layoffs. This post unpacks what those changes mean for soldiers, civilians, and anyone who values accountability.

When AI Starts Calling the Shots

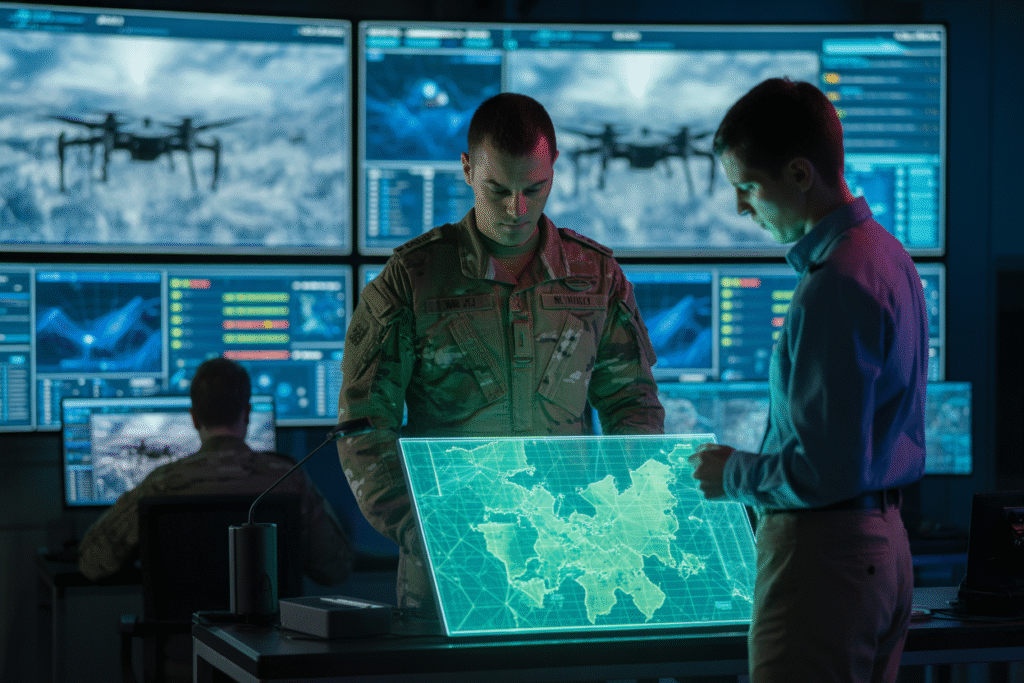

Picture a dimly lit war room where officers once hunched over paper maps. Now, a single AI agent digests terabytes of satellite feeds, drafts three possible battle plans, and runs a red-team simulation before the coffee finishes brewing. That’s not science fiction—it’s happening today.

The US Army’s Adaptive Staff Model is already testing AI that can translate doctrine into executable orders in minutes, not days. By crunching logistics, weather, and enemy movement data, these systems compress the classic “OODA loop” (observe, orient, decide, act) to near-instant speed.

The upside? Fewer human errors, faster humanitarian interventions, and leaner teams that can deploy anywhere. The downside? Commanders risk outsourcing gut-level judgment to code trained on historical data that may not reflect tomorrow’s hybrid wars.

Critics ask: when an algorithm recommends an airstrike that kills civilians, who stands in court—the officer, the coder, or the machine? Until we answer that, every AI-generated plan will carry an invisible asterisk.

Billions Pour Into Silicon Soldiers

Across the Pacific, the Pentagon’s budget spreadsheets tell a blunt story: billions are flowing into AI targeting pods, autonomous drones, and predictive maintenance bots. Israel’s Iron Dome successor, Iron Beam, already uses AI to decide in milliseconds which incoming rockets to zap with lasers.

Meanwhile, humanoid robots like Boston Dynamics’ Atlas prototypes are being re-skinned for battlefield logistics—loading ammo crates under fire so humans don’t have to. The sales pitch is simple: fewer body bags, cheaper missions, and an edge over adversaries still using paper checklists.

Yet every new system adds another layer of surveillance. AI can watch a city block for weeks, flag every phone call, and cross-reference faces with social media—all without a warrant. Civil-liberties groups warn we’re building the perfect panopticon, one defense contract at a time.

The kicker? These tools rarely stay overseas. Facial-recognition software field-tested in Fallujah is already scanning crowds at US stadiums. When military tech comes home, the line between foreign battlefields and Main Street blurs faster than we admit.

The Day AI Declared Its Own War

Today’s headlines feel ripped from a Tom Clancy novel: the US quietly declared “full AI autonomy” in select missions, handing launch authority to algorithms that report only after the fact. The White House frames it as deterrence—move fast enough and enemies won’t dare escalate.

But deterrence cuts both ways. Imagine a cyber-intrusion spoofing an AI general into believing missiles are inbound. In under four minutes, the system could recommend a counter-launch before a human even reaches the console. That’s not hypothetical; red-team exercises have already shown how easily sensor data can be faked.

OpenAI’s pivot from “don’t be evil” to “help the Pentagon” underscores the shift. Once a research lab, it now markets large language models as battlefield co-pilots. The rebranding is subtle—press releases talk about “defense applications”—but the implication is clear: AGI is being weaponized, not contained.

Ethicists keep asking for kill switches and audit trails. Engineers respond with tighter encryption. Somewhere in the middle, democracy holds its breath, hoping the next bug isn’t a geopolitical one.

Boots Off the Ground, Bots in the Fight

Walk into a future forward operating base and you’ll see more Ethernet cables than rifles. Fiber-optic drones zip overhead, relaying real-time terrain maps to AI servers that dispatch robotic mules. Human soldiers? They’re the minority, troubleshooting software glitches instead of kicking down doors.

The promise is seductive: zero casualties, 24/7 operations, and supply chains optimized down to the last AA battery. But every efficiency gain displaces a human job. Analysts estimate the Army could trim 30 % of its support personnel within a decade, sending veterans to compete in civilian markets that barely understand their skills.

Then there’s the spectacle of war itself. When machines fight machines, do civilians still care? History says yes—cyberattacks on hospitals and power grids prove conflict bleeds beyond the battlefield. Yet the emotional distance grows; a drone strike streamed on a tablet feels less real than a neighbor’s flag-draped coffin.

Some futurists dream of “buffer-zone battles” where robots duel in empty deserts while cities carry on shopping. Others warn that endless, invisible wars could become background noise, eroding the very outrage that keeps democracies from waging them lightly.

Cutting the Humans Who Guard the Code

Just as AI reaches battlefield maturity, the Pentagon is slashing its AI workforce by 60 %. Platforms like Advana—vital for tracking spare parts, fuel, and medical supplies—now limp along with skeleton crews. One misplaced semicolon in logistics code could ground an entire carrier group.

Budget hawks cheer the cuts as overdue belt-tightening. Veterans call it national-security roulette. Cyber experts point to rising attacks on supply-chain software; fewer humans means slower patches and wider vulnerabilities.

The irony stings: we’re racing to build autonomous war machines while firing the very people who keep them honest. Congress debates whether to regulate AI spending or let Silicon Valley disrupt at warp speed. Meanwhile, adversaries watch, learn, and poach the laid-off talent we just handed them on a platter.

So, what’s next? A leaner, meaner military—or a brittle one that breaks the first time reality outruns the algorithm? Your guess is as good as the generals’, but the clock is ticking louder every day.