From hospital wards to courtrooms, AI is making calls that change lives. What if every decision came with a tamper-proof receipt?

Imagine a doctor trusting an AI diagnosis that later proves wrong, or a judge relying on algorithmic risk scores that quietly misfire. When stakes are life-altering, “trust us” feels thin. A new movement—verifiable AI—wants to replace blind faith with cryptographic receipts you can audit in seconds. Here’s why that shift matters, who’s resisting, and how it could reshape everything from medicine to finance.

The Problem: Post-It Note Policies and After-the-Fact Audits

Right now, AI governance often boils down to a glossy policy PDF and a yearly audit that happens only after something breaks. When a model hallucinates in court or misdiagnoses a patient, fingers point everywhere and nowhere at once. The audit trail is patchy, the model version undocumented, and the training data a black box. Regulators are left chasing smoke while the public loses confidence one headline at a time. Verifiable AI flips the script by baking proof into every inference, turning “we think it works” into “here’s the math that proves it.”

The Solution: Cryptographic Receipts for Every Inference

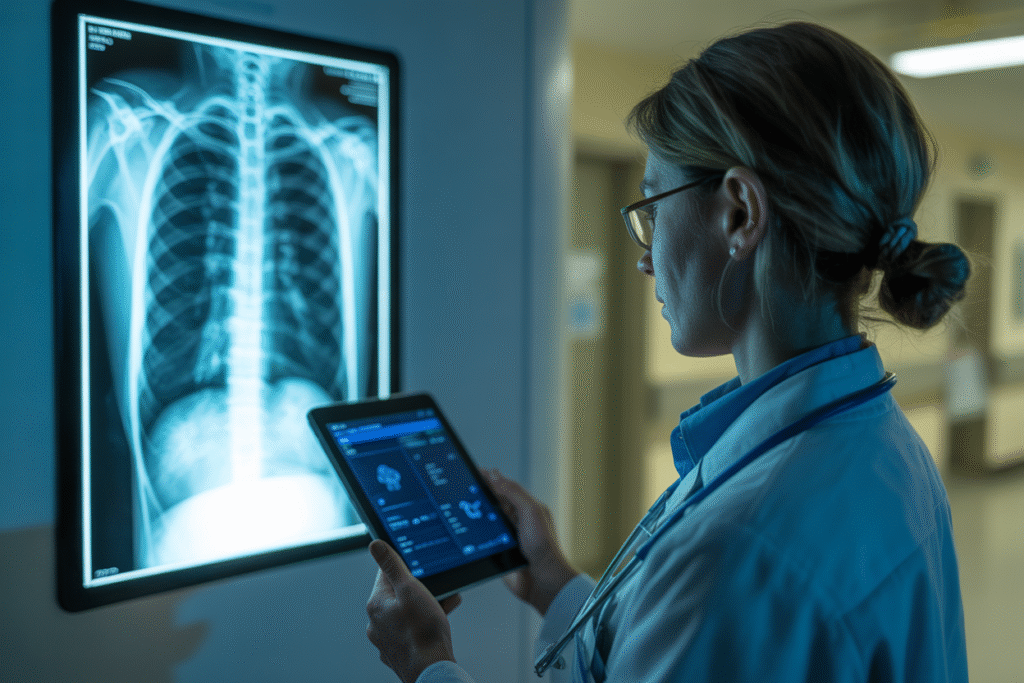

Picture this: each time an AI model makes a prediction, it spits out a tiny cryptographic receipt—think of it as a digital wax seal. That receipt locks in the exact model hash, the parameters used, and even the environmental conditions at inference time. Doctors could scan a QR code beside an X-ray and see precisely which version of the model flagged a tumor. Judges could verify that a risk-assessment tool used the same algorithm on two similar defendants. The receipts are tamper-proof, timestamped, and lightweight enough to store on-chain or in a hospital’s existing database.

What does this mean day-to-day? Fewer lawsuits rooted in “he said, she said.” Faster regulatory approvals because agencies can replay any decision in minutes. And, crucially, a public that starts trusting AI again because transparency is no longer optional—it’s automatic.

The Pushback: Complexity, Cost, and Corporate Secrets

Not everyone is cheering. Some vendors argue that embedding cryptographic proofs adds milliseconds to inference time and dollars to cloud bills. Others worry that receipts could leak proprietary model weights or sensitive training data. Privacy advocates counter that receipts might expose patient records if not anonymized properly. Meanwhile, smaller clinics fear they’ll need new hardware just to keep up.

Yet early pilots tell a different story. One European hospital network cut diagnostic disputes by 40% after adopting verifiable AI, offsetting the extra compute cost with reduced legal exposure. A fintech startup found that investors lined up precisely because its fraud-detection model was auditable in real time. The lesson: short-term pain, long-term gain.

So where does that leave us? At a crossroads. We can keep patching trust with press releases, or we can build systems that prove themselves every single time. The tools exist. The question is who will pick them up first—and who will be left explaining why they didn’t.