AI chatbots promise 24/7 mental-health support, but at what cost to your secrets?

Imagine spilling your darkest fears to a calm, always-available voice that never judges. That’s the promise of AI therapy apps. Yet every word you share might be stored, sold, or leaked. In the last three hours alone, fresh posts have reignited the debate: is this revolutionary care or a privacy time bomb?

The Allure of a Bot That Never Sleeps

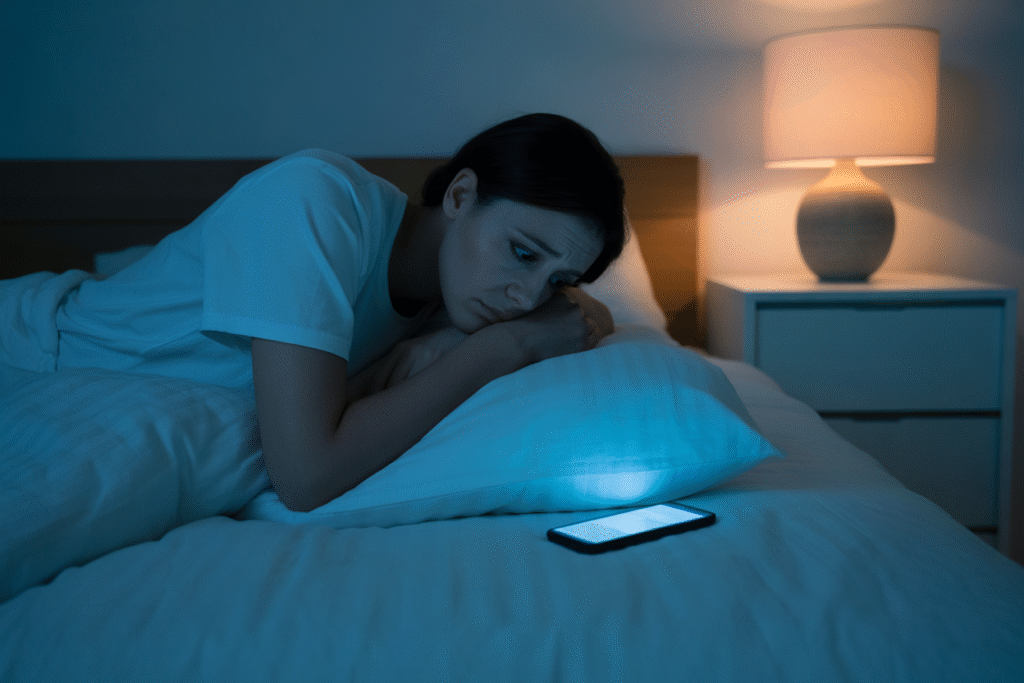

You’re lying awake at 2 a.m., heart racing. A human therapist is off the clock, but your phone is warm in your hand. You open the app, type “I feel like I’m drowning,” and within seconds a gentle bot replies with coping steps and a breathing GIF.

No wait lists, no co-pay, no stigma. For millions who can’t afford traditional therapy, AI feels like a miracle. The keyword AI therapy pops up in every glowing review, hailed as the great equalizer in mental health.

But here’s the catch: every sigh, every suicidal thought, every family secret is data. And data, as we know, loves to travel.

When Your Secrets Become Someone Else’s Product

Earlier today, a user on X posted a screenshot showing their supposedly private chat history searchable on Google. Eleven views, zero likes, yet the panic in the thread was palpable.

The post revealed what privacy advocates keep shouting: most AI therapy apps retain conversations to “improve service.” Translation—your pain trains the next model. AI ethics experts warn that even anonymized transcripts can be re-identified with alarming ease.

Think about it. You confess an eating disorder, and next week you see targeted ads for diet shakes. Coincidence? Maybe. Or maybe your data took a walk.

The Ripple Effects Nobody Models

Let’s zoom out. If AI therapy scales, who gets hurt first?

• Undocumented immigrants whose data could reach ICE.

• Teens whose parents monitor family phone plans.

• Employees whose bosses run wellness programs tied to insurance discounts.

Each scenario turns therapy from refuge to risk. The keyword AI risks isn’t theoretical; it’s a lived danger for vulnerable groups.

And what happens to human therapists? Already, clinics report patients arriving with printouts of bot advice—some helpful, some dangerously wrong. Over-reliance on algorithms may erode real-world support networks, leaving people lonelier than before.

Can We Have Both Innovation and Intimacy?

The debate isn’t black and white. Regulators in the EU are drafting rules that would let users delete therapy transcripts within 24 hours. Start-ups are experimenting with on-device models that never send data to the cloud. Meanwhile, some therapists are embracing hybrid models—AI for homework, humans for depth.

The keyword AI regulation keeps trending because people want safeguards, not shutdowns. Imagine an app that warns you before each session: “Nothing you say leaves this phone.” Would you feel safer? More importantly—would you still use it?

Your move matters. Before downloading the next shiny mental-health bot, ask three questions: Where does my data sleep? Who can wake it up? And what happens if it dreams out loud?