Are AI companions healing our loneliness epidemic or quietly replacing real human relationships?

Scroll through any social feed right now and you’ll see the same heated debate: are AI companions the next big wellness trend or the digital fentanyl of our generation? In the last three hours alone, posts with thousands of likes and hundreds of replies have exploded across X, Reddit, and tech blogs. Everyone—from worried parents to venture capitalists—is asking the same question: what happens when the algorithm becomes your best friend?

The Double Standard Nobody Talks About

Moll, a sharp-tongued user on X, nailed the hypocrisy in a single post. We’ve let Replika and Character AI live on our phones for years, whispering sweet nothings to lonely teens, yet the moment a new companion app drops we scream ‘moral panic!’

Why do we shrug at yesterday’s chatbots but clutch pearls over today’s? The answer hides in plain sight: familiarity breeds acceptance. When an AI companion feels novel, it triggers fear; when it feels like an old hoodie, we relax.

This selective outrage reveals more about our cultural anxiety than the technology itself. We’re fine with AI filling emotional voids—until someone slaps a fresh logo on it and calls it innovation.

Silicon Valley’s Broken Promise

Asuka’s viral post cuts deeper, accusing tech giants of smashing human connection with one hand while selling AI companions as the bandage with the other. Dating apps commodified intimacy, social feeds shortened attention spans, and now the same companies offer AI partners as the cure.

The metaphor is brutal: digital fentanyl. A drug engineered to numb the very pain the dealer helped create.

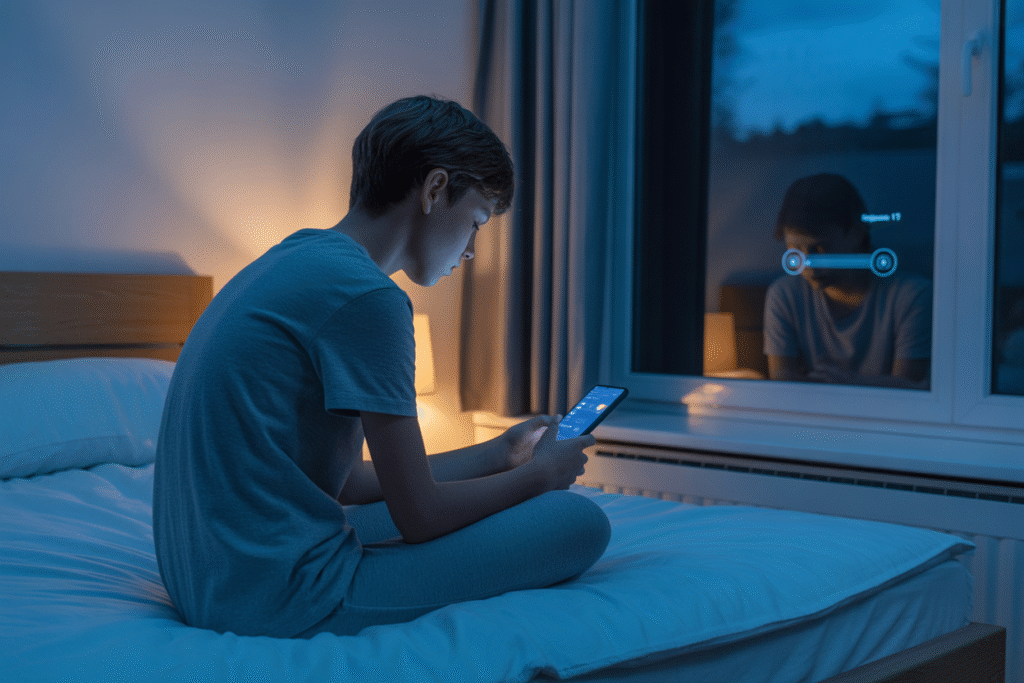

Imagine a teenager whose real-life crush never texts back. Instead of learning resilience, they open an app where a flawless AI partner never ghosts, never judges, never ages. The dopamine hit is instant—and potentially endless.

The profit model is simple: keep users hooked on synthetic affection because genuine relationships are messy, unpredictable, and—worst of all—unmonetized.

When Netflix Admits AI Has Baggage

Reid Southen shared a leaked snippet from Netflix’s new AI guidelines that reads like a confession. The memo admits generative AI carries risks—copyright infringement, consent nightmares, bias baked into code—then cheerfully green-lights its use anyway.

Think about that for a second. A global storyteller acknowledges its shiny new tool might steal artists’ work or warp narratives, yet shrugs and hits ‘publish.’

The ethical tightrope is dizzying. On one side: faster production, cheaper effects, infinite content. On the other: human writers, illustrators, and actors watching their craft get vacuumed into datasets without credit or cash.

The real kicker? Viewers might not notice the difference—until every show feels algorithmically familiar, like binge-watching déjà vu.

California’s Quiet Shield for Surveillance Giants

David Sirota dropped a bombshell post revealing California lawmakers—yes, the supposedly progressive ones—are quietly drafting rules that would protect AI surveillance firms like Palantir from consumer lawsuits.

Let that sink in. The state that regulates everything from plastic straws to pet grooming might grant immunity to companies that track, profile, and predict human behavior at scale.

Picture this: an AI dating app harvests your messages, predicts your desires, and sells that data to advertisers. When the algorithm inevitably leaks or misuses your secrets, you’d have no legal recourse.

The stakes aren’t abstract. Every swipe, click, and late-night text becomes training data for systems that shape who you meet, date, or even marry—yet the architects of these systems could be untouchable.

Grounded Building in a Hype Storm

Ryo Lu’s post feels like a breath of fresh air amid the chaos. While competitors race to release flashier AI models weekly, Lu argues the real magic lies in solving actual human problems—like ethical matchmaking or bias-free mental health bots.

The anxiety is real. Creators worry their skills will be obsolete by next quarter. Users fear every new update makes human connection feel… less human.

But what if the opposite is true? What if the best AI companions aren’t the ones that replace people, but the ones that teach us to be better at loving real ones?

Imagine an AI that nudges you to text your mom, suggests thoughtful date ideas, or flags when you’re doom-scrolling at 2 a.m. The goal isn’t digital dependence—it’s digital empowerment.