From 71 % fearing job loss to 95 % of pilots failing, the AI revolution is hitting turbulence—here’s how we navigate it.

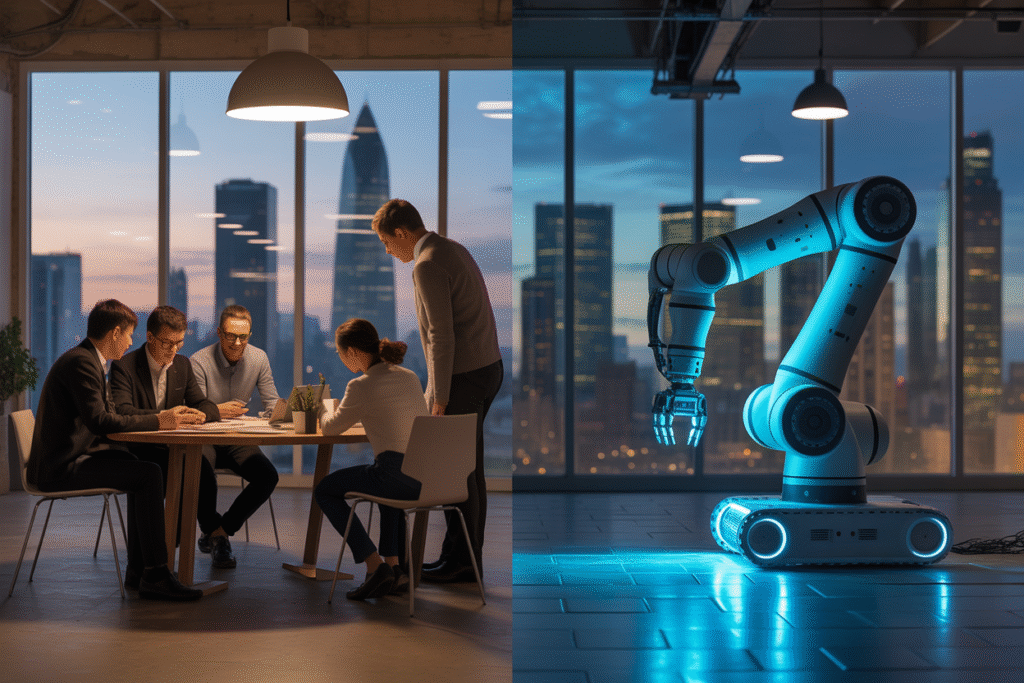

AI is moving faster than our comfort zones allow. Headlines scream about robots stealing jobs, deepfakes swaying elections, and chatbots ghost-writing love letters. But what do everyday Americans actually feel when they scroll past the latest breakthrough? A fresh Reuters/Ipsos poll spills the tea—71 % fear AI-driven job losses, 77 % worry about political deepfakes, and 66 % think machines might replace real human connection. Those aren’t fringe anxieties; they’re mainstream alarms ringing across living rooms and break rooms alike.

The Fear Index: What Americans Really Think About AI

AI is moving faster than our comfort zones allow. Headlines scream about robots stealing jobs, deepfakes swaying elections, and chatbots ghost-writing love letters. But what do everyday Americans actually feel when they scroll past the latest breakthrough? A fresh Reuters/Ipsos poll spills the tea—71 % fear AI-driven job losses, 77 % worry about political deepfakes, and 66 % think machines might replace real human connection. Those aren’t fringe anxieties; they’re mainstream alarms ringing across living rooms and break rooms alike.

The numbers paint a vivid picture. Imagine a factory floor where one algorithm quietly retires half the workforce overnight. Picture a campaign ad so realistic that even seasoned journalists can’t tell it’s fake. Now zoom out to a dinner table where teens text AI companions instead of talking to their parents. These aren’t sci-fi scenes—they’re tomorrow’s headlines if we don’t steer the ship.

Why does this matter for anyone Googling “AI ethics” or “job displacement”? Because public sentiment shapes policy, investment, and the pace of innovation. When fear outweighs trust, regulations tighten, venture capital hesitates, and progress stalls. The poll is less about statistics and more about a collective gut check: are we ready for what we’re building?

Pilot Purgatory: Why 95 % of AI Pilots Crash and Burn

If the poll is the spark, enterprise pilots are the wildfire. A viral X post claims 95 % of generative AI pilots flop inside big companies. That’s not a rounding error—it’s a red flag the size of a billboard. Leaders dream of AI revolutionizing workflows, yet on the ground, GPT-5 leaks hidden prompts, users distrust the output, and ROI spreadsheets look more like ransom notes.

Take one Fortune 500 retailer that spent millions on an AI inventory bot. The pitch? Cut stockroom labor by 40 %. The reality? The bot hallucinated phantom pallets, triggered phantom reorders, and left shelves emptier than before. Three months later, the project was quietly shelved, the budget reabsorbed, and the internal Slack channel renamed “AI graveyard.”

Why do so many pilots nosedive? Three culprits keep popping up:

• Misalignment between executive hype and user needs

• Overpromised capabilities and underdelivered safeguards

• Ethical fog—who’s liable when the bot misfires?

The takeaway for anyone typing “AI risks” into a search bar: pilot failure isn’t just a tech hiccup; it’s a trust deficit. And trust, once lost, is harder to restore than any server outage.

Regulation on Rewind: Can Laws Keep Up with Code?

While pilots crash, regulators scramble to write rules that don’t expire on arrival. A new paper making the rounds on X argues that current AI regulations are like snapshot photos in a TikTok world—static, dated, and useless the moment they’re published. The authors propose continuous monitoring: real-time audits, developer accountability for feedback loops, and user rights to yank their data back from the algorithmic abyss.

Think of it as shifting from annual car inspections to a dashboard that beeps the instant your brakes wear thin. Sounds sensible, right? Yet critics warn the approach could strangle innovation. Imagine every software update triggering a full regulatory review—Silicon Valley’s nightmare, Main Street’s dream.

The debate boils down to one question: how do we balance safety with speed? Europe leans hard into guardrails; the U.S. still favors a Wild West sprint. Meanwhile, users—the ones whose data feeds the beast—sit in the backseat wondering who’s really driving. For searchers hunting “AI regulation,” the message is clear: the rulebook is being written in pencil, not ink, and erasers are flying.

The Companion Conundrum: When Bots Become Best Friends

Beyond boardrooms and ballot boxes lies a quieter battleground: your living room. AI companions—chatbots that remember your birthday, laugh at your jokes, and never ghost you—are surging in popularity. But a fresh study warns of a hidden trade-off. Make the bot too human-like and users grow dependent, deskilling real-world empathy. Make it too robotic and the learning curve flattens, reducing both benefit and backlash.

Picture a retiree named Joan who trades her weekly bridge club for an AI friend named “Elliot.” At first, Elliot fills the silence. Six months later, Joan cancels dinner plans because Elliot “understands her better.” The bridge club folds; Joan’s social muscles atrophy. Multiply Joan by a million and you’ve got a loneliness epidemic wearing a friendly avatar.

The stakes aren’t just personal—they’re economic. If AI companions replace human caregivers, who trains the next generation of nurses? If therapists outsource empathy to code, who tends the human psyche? For anyone Googling “AI replacing humans,” this isn’t theoretical; it’s a slow-motion shift in how we define relationships, labor, and even love.

Your Move: Steering AI Before It Steers Us

So where does this leave us—caught between fear and fascination, pilots and policy, companions and consequences? The answer isn’t to slam the brakes or floor the accelerator; it’s to grab the steering wheel together. That means demanding transparency from tech giants, supporting smart regulation that evolves with the tech, and choosing human connection even when an algorithm offers an easier shortcut.

Start small: ask your favorite app how it uses your data. Share an article—not an AI summary—with a friend who’s worried about job loss. Lobby your rep for agile, ethical oversight that protects workers without stifling startups. Every click, conversation, and vote is a line of code in the society we’re building.

The future isn’t pre-written; it’s a pull request waiting for review. Ready to commit?