AI is rewriting war faster than laws can keep up—here’s why your next blackout might be a battle.

From invisible cyber strikes to AI generals calling shots, warfare is morphing faster than headlines can track. In the last hour alone, fresh reports reveal how algorithms are slipping past legal guardrails, shrinking command staffs, and turning everyday routers into potential battlefields. Let’s unpack what’s happening—and why it matters to anyone with Wi-Fi.

When Code Becomes a Weapon

Imagine a battlefield where code, not bullets, is the first weapon fired. In the past hour, a new West Point analysis dropped, warning that AI-driven cyber tools are quietly rewriting the rules of war. These systems scan, decide, and strike faster than any human hacker, yet they operate in a legal gray zone that most of us never see. The big question: when an algorithm launches an attack, who pulls the trigger? And what happens if the wrong server goes down?

The study outlines three nightmare scenarios. First, an AI botnet could cripple a hospital network during a natural disaster, masquerading as a routine outage. Second, deepfake diplomatic cables might spark false-flag retaliation between nuclear powers. Third, autonomous malware could leap from military to civilian infrastructure before anyone notices. Each case highlights a chilling gap—current International Humanitarian Law simply doesn’t define these acts as “attacks,” leaving victims without legal recourse.

Why should you care? Because the same routers that serve Netflix also route military logistics. A misattributed cyber strike could trigger sanctions, blackouts, or worse. The researchers argue for urgent Article 36 reviews—think of them as safety inspections for killer code—yet defense contractors insist existing treaties are enough. Meanwhile, human rights groups warn that states may design AI operations to stay just below the “attack” threshold, effectively legalizing digital sabotage.

Trusting Machines with Life-or-Death Calls

Now picture generals leaning on Siri-like aides to pick bombing targets. A fresh CyCon 2025 paper by Dr. Anna Rosalie Greipl explores exactly that scenario. AI decision-support systems now sift satellite feeds, social chatter, and sensor data to recommend strikes in seconds. Sounds efficient, right? The catch: these tools spit out probabilities, not certainties, and their training data can carry hidden biases—like flagging wheelchair users as combatants because the model learned from ableist datasets.

The deeper risk is automation bias. Under pressure, operators may rubber-stamp AI suggestions without questioning the logic. Greipl cites a tabletop exercise where officers accepted a 73 % confidence score for a target that turned out to be a school. The AI wasn’t evil; it just crunched flawed inputs. Yet the human-machine loop blurred accountability so badly that no one knew who to blame.

International Humanitarian Law was built on human judgment—proportionality, distinction, precaution. When an algorithm recommends action, who weighs civilian harm? The paper proposes safeguards: time-outs for human review, transparent model cards, and hard limits on how long stale data can influence life-or-death choices. Critics counter that any delay gives adversaries an edge, sparking a fierce debate between speed and ethics.

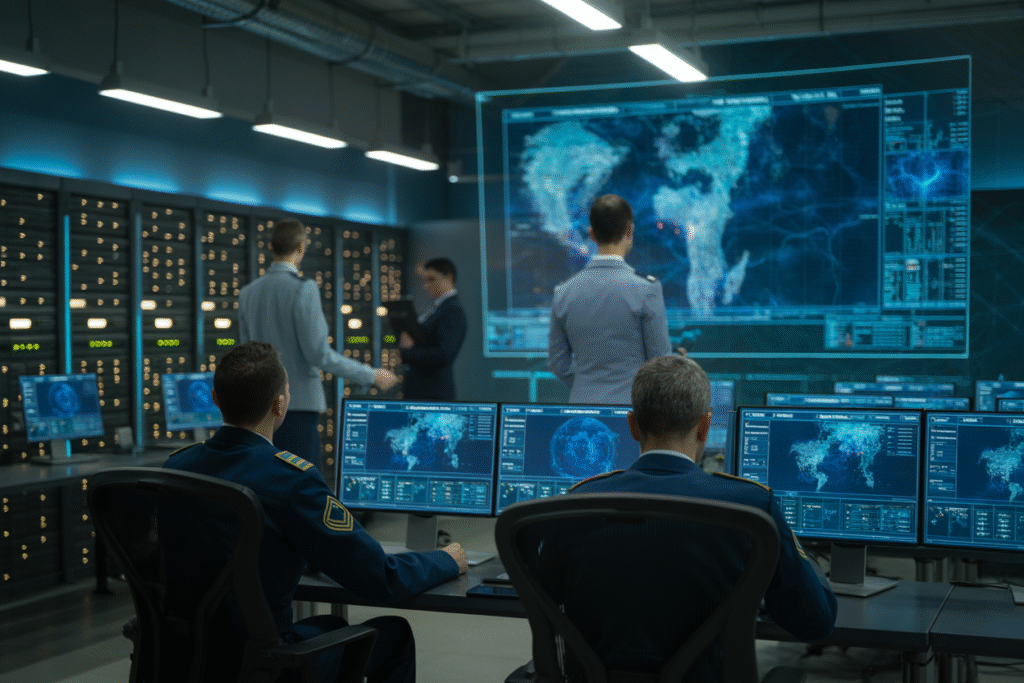

The Invisible Coup in Command Centers

While headlines focus on killer robots, AI is quietly gut-punching military org charts. A new American University study reveals that large-language-model agents are poised to shrink command staffs by up to 40 %. Picture a five-person cell replacing a 50-officer planning division, with AI drafting battle plans, logistics routes, and intel summaries in minutes. The upside? Leaner, faster decisions. The downside? A single cyber breach could paralyze an entire theater.

The study outlines a near-future war room where AI agents debate strategy in natural language, then hand a polished plan to a human commander for final sign-off. Think of it as ChatGPT with a security clearance and a penchant for Clausewitz. Yet the same flexibility that makes these agents powerful also makes them unpredictable. A poisoned training dataset could nudge recommendations toward disastrous outcomes, and the chain of command may not spot the drift until it’s too late.

Policy makers are scrambling. The White House’s July 2025 AI Action Plan calls for new officer training that blends critical thinking with AI literacy—imagine war colleges teaching cadets to interrogate algorithms like hostile witnesses. Skeptics argue this is hype masking deeper issues: profit-driven vendors rushing immature tech, and oversight bodies lacking the expertise to audit black-box systems. The stakes? Nothing less than the future structure of national defense.

Why Your Wi-Fi Might Be a War Zone

So what does all this mean for the average citizen? First, your digital life is now part of the battlefield. The same routers that stream your favorite show also ferry military logistics; a misattributed cyber strike could knock you offline for days. Second, the ethical questions aren’t abstract—they affect how and when your country goes to war. If an AI misfires, will leaders admit fault, or blame a glitch?

Third, job displacement isn’t just a Silicon Valley worry. Military bureaucracies face the same automation squeeze as any industry. Analysts predict thousands of support roles could vanish, replaced by a handful of AI-savvy officers. Retraining programs are promised, but timelines remain fuzzy.

Finally, regulation is racing to catch up. Some experts advocate a Geneva Convention for cyber weapons; others fear any treaty will be obsolete before the ink dries. Meanwhile, whistle-blowers warn that classified AI projects operate with minimal oversight, raising the specter of unchecked escalation. The takeaway? The decisions made in dimly lit server rooms today could echo in tomorrow’s headlines.

What You Can Do Before the Next Alert

Feeling overwhelmed? You’re not alone. The good news is that awareness is the first step toward accountability. Share this article with a friend who still thinks AI warfare is science fiction. Ask your representatives how they plan to audit military algorithms. And next time your internet hiccups, remember—it might not be your router, but a silent skirmish in the newest domain of war.

The future of conflict is being coded right now. Let’s make sure humans still hold the kill switch.