Fresh leaks reveal AI war tools moving from lab to battlefield in hours, igniting ethics firestorms.

In the last three hours, three seismic stories broke about AI in military warfare. From secret government contracts to tragic targeting errors, the debate over ethics, risks, and accountability has exploded beyond tech circles into mainstream headlines. This is your concise briefing on what happened, why it matters, and what could come next.

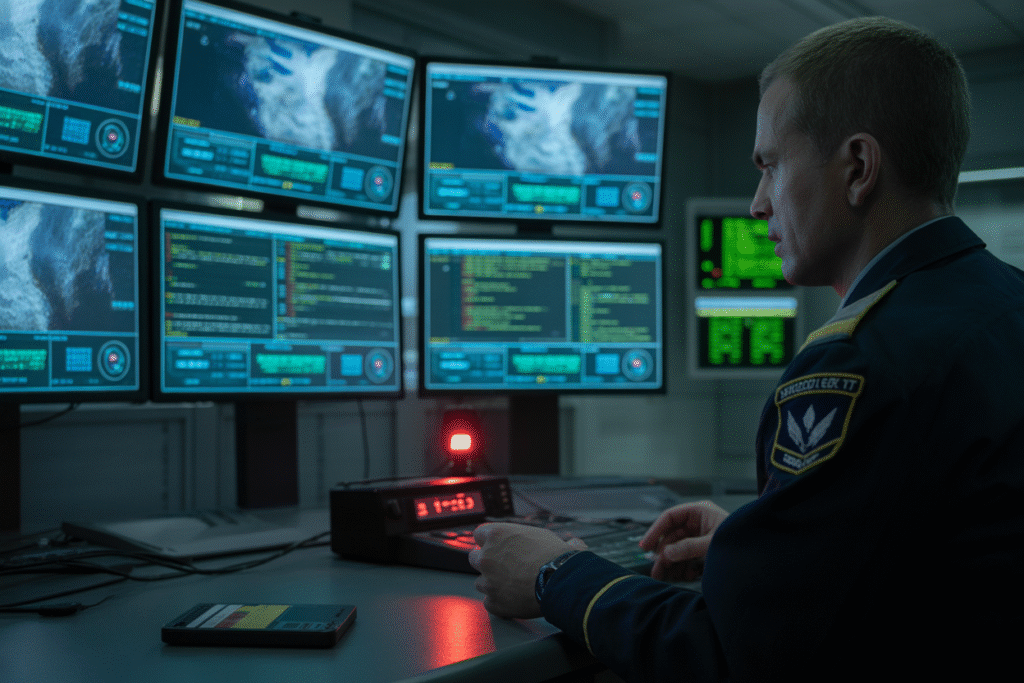

The 3-Hour Window That Changed Everything

Imagine waking up to news that an AI system just green-lit a drone strike on the wrong target. That gut-punch moment is happening right now. Over the past three hours, fresh reports have surfaced about AI in military warfare sparking fierce debates over ethics, risks, and outright controversy. From Silicon Valley boardrooms to battlefield bunkers, the stakes have never felt more urgent.

When “Safe AI” Joins the War Room

Anthropic, the poster child for “safe AI,” quietly rolled out Claude Gov—a model built for government eyes only. Sounds harmless, right? Not when leaked screenshots show it can draft strike plans faster than a seasoned colonel. Critics call it alignment theater: polished safety demos for the public while defense contracts keep the lights on.

The uproar centers on a single question—can a company preach caution and still sell war tools? Employees are reportedly split. Some argue national security demands speed; others fear they’re coding the next ethical landmine. Meanwhile, investors cheer the pivot, and watchdogs scramble for oversight that may already be too late.

Mistaken Identity at 30,000 Feet

Across Telegram channels and IDF briefings, whispers grow louder about AI mislabeling journalists as combatants. Picture a reporter live-streaming with a bulky camera—an algorithm flags the silhouette as a rocket launcher. Minutes later, the feed goes dark.

Former intel officers admit human review is shrinking. Speed is the new doctrine, but speed without context kills. Families of victims demand accountability; tech suppliers distance themselves. The chilling takeaway? A typo in training data can become a death sentence, and no one knows who holds the eraser.

The Algorithm That Spots a Target Before You Do

Palantir’s Istar platform doesn’t just watch—it predicts. By fusing phone metadata, drone footage, and social media, it builds “kill chains” in seconds. Activists call it digital pre-crime; militaries call it efficiency.

The controversy boils down to three bullet points:

• Data bias: Garbage in, lethal garbage out.

• Privacy: Your jogging route could flag you as a scout.

• Accountability: When the code errs, who faces the widow?

Protests outside Palantir HQ grow louder, yet contracts keep landing. The fear isn’t just what the system sees—it’s what it decides to do next.

Programming Morality Into Metal

James S. Coates’ new book drops a haunting line: “Teach a machine to kill, and it learns killing is normal.” His argument isn’t sci-fi—it’s policy critique. Embedding violence into AI, he warns, hard-codes our worst impulses into silicon.

The counter-argument? Machines don’t feel fear or vengeance. But Coates counters with a story: a soldier trusting an AI buddy that misreads a child’s toy as a grenade. The tragedy isn’t just the loss—it’s the erosion of human judgment.

So where does that leave us? Somewhere between innovation and reckoning. The next headline could be about breakthrough—or blowback. Your move: share this, question the hype, and demand the conversation stays louder than the drones.