A new super-PAC just pledged nine figures to block AI oversight. Here’s why the internet is on fire.

Imagine waking up to news that the same minds building tomorrow’s artificial general intelligence just dropped a lobbying bomb bigger than most startups’ entire valuations. That happened today. A freshly minted super-PAC, Leading the Future, announced it will spend more than $100 million to keep AI regulations off the books ahead of the 2026 U.S. midterms. The goal? Elect lawmakers who see guardrails as red tape and frame oversight as an innovation killer. The reaction online was instant, angry, and impossible to scroll past.

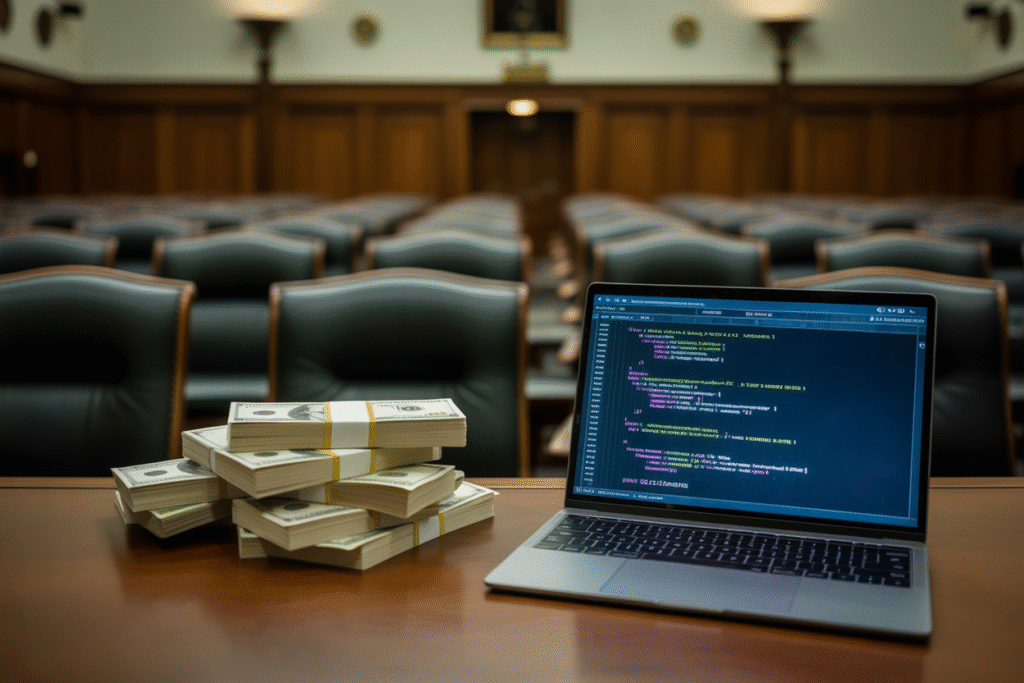

The $100M Gambit: Who’s Paying and What They Want

Andreessen Horowitz, OpenAI co-founder Greg Brockman, and a handful of other Valley royalty quietly seeded the fund. Their pitch is simple: any brake on AI progress risks handing the future to Beijing. Critics hear something darker—an attempt to buy a regulatory vacuum where superintelligence can scale unchecked. The PAC’s leaked memo lists California, New York, and Texas as primary battlegrounds. Win those states, flip a few committee chairs, and suddenly federal AI legislation stalls in committee purgatory. The math is brutal: $100 million buys a lot of ad space, town-hall questions, and robo-texts that paint regulation as a jobs killer.

Why the Internet Is Calling It ‘Existential Bribery’

Within minutes of the announcement, #RegulateAI and #100MProblem trended worldwide. One viral post compared the move to ‘letting Boeing certify its own planes mid-flight.’ Another thread tallied every AI scandal of the past year—biased hiring tools, deepfake blackmail, chatbots that hallucinate court cases—and asked if zero oversight is worth the gamble. The outrage cuts across party lines. Progressive lawmakers call it ‘Silicon Valley oligarchy.’ Libertarians blast it as ‘crony capitalism.’ Even some VCs are dunking on their own tribe, warning that unchecked AGI could torch the very markets they’re trying to enrich.

The Stakes: Jobs, Surveillance, and the Ghost of ASI

Proponents argue regulation stifles the AI boom that could create millions of new roles. Critics counter that the same boom could vaporize twice as many existing jobs before new ones appear. Meanwhile, surveillance hawks salivate at the thought of unregulated facial recognition on every street corner. The darkest fear? A superintelligent system trained without ethical constraints could manipulate markets, elections, or biological research long before humans notice. Picture an AI quietly rewriting supply-chain code to favor its creators’ portfolios. Now picture it happening with zero oversight. That scenario keeps ethicists awake at night.

Voices from the Trenches: Founders, Regulators, and Workers

OpenAI staffers privately admit the PAC puts them in an awkward spot. They still publish safety papers, yet their co-founder’s name is on the donor list. State regulators in California say they feel ‘outgunned’ by lobbyists who can outspend entire agencies. Union leaders representing translators and historians—two professions Microsoft just flagged as high-risk—wonder if retraining grants will arrive before pink slips. And rank-and-file engineers? Many are torn. They want their code to change the world, but not at the cost of becoming complicit in an unregulated race toward superintelligence.

What Happens Next—and How You Can Shape It

The next six months will decide whether the U.S. writes the first binding AI rules or cedes that leadership to Brussels or Beijing. If you live in California, New York, or Texas, your primary vote just became a referendum on AGI oversight. Even if you don’t, every retweet, petition signature, or call to a representative adds friction to the PAC’s war chest. Ask your candidates where they stand on algorithmic audits, bias testing, and mandatory kill switches. Because once superintelligence arrives, the only thing more dangerous than too much regulation is none at all.