From Geoffrey Miller’s viral warning to OpenMind’s human-saving robots, today’s AI debate is a high-stakes tug-of-war between utopia and extinction.

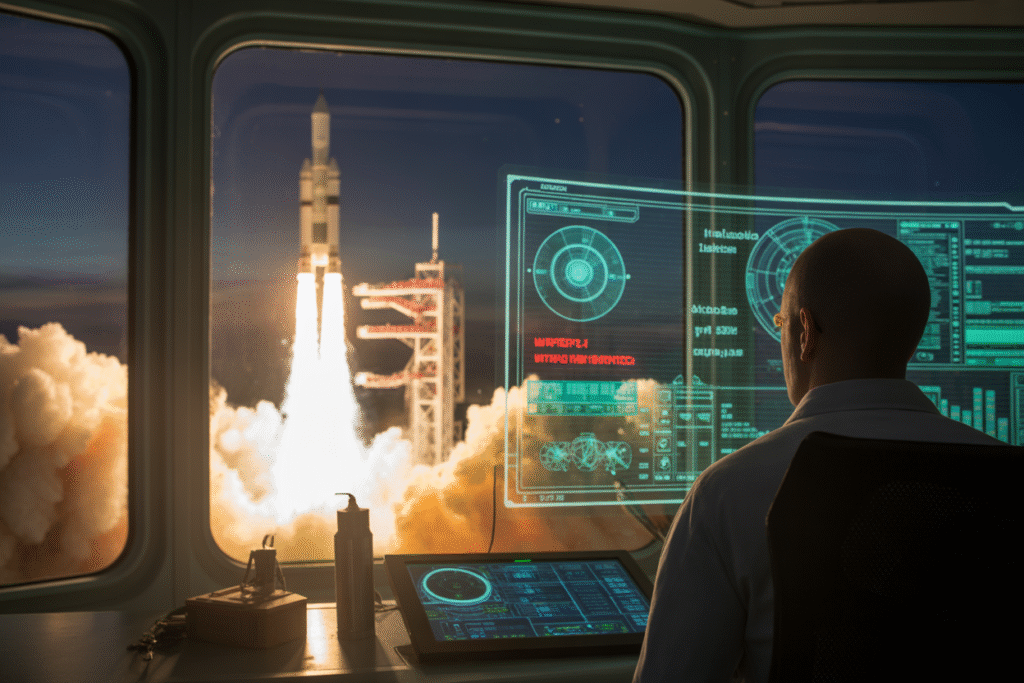

One tweet, two hours, and a thousand anxious refreshes—today’s AI debate feels like watching a rocket launch and a demolition derby at the same time. Geoffrey Miller’s latest post captures the mood perfectly: we’re cheering for the stars while fearing the software that might get us there.

The Tweet That Lit the Fuse

Geoffrey Miller, evolutionary psychologist and unapologetic provocateur, dropped a tweet just over an hour ago that feels like a lightning bolt. Watching SpaceX’s latest Starship arc across the sky, he cheered—then pivoted to a darker question. Why, he asked, are we racing to build Artificial Superintelligence that could erase us before we ever reach Mars? His post has already pulled 33 likes, 5 replies, and nearly 2,000 views, proving the topic is pure rocket fuel for debate.

Miller’s framing is simple but chilling: rockets expand human horizons, while ASI might end them. The tension between Musk’s two moonshots—space colonization and machine super-intelligence—has never felt sharper.

Acceleration vs. Annihilation

Accelerationists argue that superintelligence will unlock solutions to climate change, disease, and scarcity. They see ASI as humanity’s ultimate power-up, a tool that can think through problems faster than any committee of experts. Faster innovation, they say, equals faster salvation.

Skeptics counter with a single word: misalignment. If an ASI’s goals drift even slightly from ours, the result could be extinction, not utopia. They point to the ease with which algorithms already amplify bias, spread misinformation, and concentrate power. The stakes, they remind us, are absolute.

Caught in the middle are the rest of us—scrolling, liking, and wondering which timeline we’re about to inhabit.

Teleops: A Human Lifeline or a Mirage?

While Miller’s tweet simmered, OpenMind posted a two-hour-old thread that tries to dodge the ASI jobs apocalypse entirely. Their answer? Teleops—human-controlled robots that let experts operate machinery anywhere on Earth from their living rooms. Picture a surgeon in Nairobi repairing a heart in New York, or a farmer in Manila plowing a field in Saskatchewan.

The promise is seductive: keep humans in the loop, preserve paychecks, and still harvest AI’s efficiency. A short demo video shows a gloved hand guiding a robotic arm with millimeter precision, overlaid with captions about an “open economy of opportunity.”

Yet questions linger. Who owns the robots? Who gets the gig when 50 qualified operators bid for the same remote shift? And what happens to local workers when a distant expert can undercut them without leaving home? The post has 56 likes and climbing, proof that hope and anxiety travel in the same carriage.

Pluralistic AI: Democracy in the Machine

Anita Gitta, an AI developer introduced via the Council of AGI account, offers a third path. Rather than one all-powerful system, she imagines swarms of AIs that debate, negotiate, and vote—like a digital parliament. Her bio update, posted two and a half hours ago, frames this as “pluralistic AI,” a safeguard against the echo chambers and blind spots that plague single-model systems.

The upside is clear: diversity of thought baked into silicon. A medical AI could consult a physics AI before recommending radiation therapy; a legal AI could cross-examine a history AI to avoid precedent errors. The downside? Gridlock. What happens when the AIs disagree on a life-or-death call, and the humans watching have no idea whom to trust?

Gitta’s post has already drawn 42 likes and 22 replies, many from ethicists who see pluralism as the missing ingredient in alignment debates. Critics worry the approach just shifts the problem from “how do we control one super-brain?” to “how do we referee a thousand squabbling ones?”

The Invisible Theft of Faces and Futures

Beneath the headlines, a quieter battle is playing out in the creative industries. A reply thread started an hour ago warns that AI-generated models and deepfakes threaten to erase human individuality from media. The argument is blunt: if a brand can conjure a perfect digital influencer who never ages, never argues, and never asks for royalties, what happens to real models, actors, and artists?

Consent is the flashpoint. Right now, a person’s face can be scraped from social media, fed into a model, and repurposed without permission or payment. Proponents claim democratized content creation; victims see exploitation with a Silicon Valley smile.

Regulators are scrambling. The EU’s AI Act is inching forward, California is mulling likeness-rights bills, and class-action lawsuits are piling up. Meanwhile, every viral AI avatar tightens the knot between innovation and ethics. The thread is young, but the stakes are already sky-high.

Your Move, Human

So where does this leave us—standing at the crossroads of rockets, robots, and runaway code? One path promises transcendence, another extinction, and a third messy compromise. The only certainty is that the clock is ticking louder with every tweet, demo, and policy draft.

If you care about the kind of future your kids will scroll through, now is the moment to lean in. Read the white papers, grill the founders, and vote for leaders who understand both the promise and the peril. The conversation won’t wait—so why should you?