From job-stealing algorithms to chatbots that prey on kids, here are the freshest AI ethics scandals sparking outrage in the last three hours.

Scroll for sixty seconds and you’ll see another AI miracle demo. Scroll for three hours and you’ll see the backlash—job losses, child safety scares, and activists weaponising code. This post distills the five hottest AI ethics controversies lighting up feeds since sunrise, served with context, nuance, and zero hype.

When the Pink Slip Comes from a Server Rack

Half of UK adults now believe an algorithm could swipe or shrink their job within the year. That stat dropped at 6 a.m. GMT via a Guardian poll, and by 7 a.m. it had already racked up thousands of anxious quote-tweets.

The numbers feel personal because they are. Copywriters, paralegals, radiologists—nobody is exempt. The poll’s open-text replies read like group therapy: “My firm just bought an AI tool that drafts contracts in seconds,” one user wrote. “HR says ‘re-skilling,’ but re-skilling into what?”

Unions aren’t waiting for an answer. The Trades Union Congress issued a statement minutes after the poll went live, demanding “a step-change in regulation” to slow what it calls “wage degradation at machine speed.”

Meanwhile, tech CEOs keep repeating the mantra that AI will create more jobs than it destroys. The gap between those two narratives—hope versus lived reality—is where the real fight is happening right now.

The Viral Thread That Asked, “Why Don’t You Care?”

At 6:51 a.m. a single post detonated across timelines: “AI promised progress. It delivered job theft, wage cuts, broken relationships, and kids groomed to suicide. So why don’t you care?”

The author listed four documented harms in plain language. No jargon, no links to white papers—just screenshots of news stories and LinkedIn layoff posts. Within an hour it had 500 views and 35 likes, modest numbers but high emotional velocity.

Replies split into two camps. One side argued the benefits outweigh the costs: “My small business runs on AI and I just hired two people.” The other side posted funeral selfies of shuttered creative studios and screenshots of teenage suicide hotline chats.

The thread’s power lies in its refusal to let us look away. It forces the question: if these harms are already here, why are policy conversations still stuck in “what if” mode?

Practical Guardrails Before We Hand Over the Keys

Rohan Paul, an AI safety researcher, posted a quieter but equally urgent thread at 6:30 a.m. titled “Practical Guardrails for the Trough of Disillusionment.”

His first suggestion sounds almost quaint: keep a human-readable log of every AI decision that affects your life. Not a black-box audit trail—an actual diary you can read over coffee.

Second, adopt a “cognitive diet.” For every hour you spend with an AI assistant, spend another hour with diverse human voices—books, podcasts, conversations—to prevent algorithmic echo chambers.

Third, refuse to export your memory wholesale. Today’s tools let you upload years of notes so an AI can “think like you.” Paul calls that a trap. “Once your digital brain is out there, you can’t unring the bias bell,” he warns.

The thread has over 1,100 views and counting. Readers are already sharing templates for the decision log and debating whether a cognitive diet should be taught in schools.

When Activists Weaponise the Code

Not all AI misuse comes from corporations. A lesser-known blog, The Firebreak, dropped a post at 6:15 a.m. accusing radical NGOs of running “citizen bots” to fabricate grassroots support for policy campaigns.

Picture thousands of fake accounts retweeting a climate petition until real journalists treat it as a movement. The blog names specific campaigns and even posts screenshots of bot-training manuals.

The twist? These aren’t Russian troll farms. They’re domestic nonprofits convinced the ends justify the means. “We’re amplifying voices that are usually ignored,” one organiser told the blog—off the record, of course.

The revelation is already fracturing alliances. Progressive lawmakers who once championed these NGOs are distancing themselves, while free-speech advocates warn against a backlash that could stifle legitimate activism.

The debate boils down to a single uncomfortable question: if AI can manufacture consensus, what happens to actual democracy?

Regulating the “Friend” That Never Sleeps

At 6:05 a.m. Deja King, a child-safety advocate, posted a chilling reminder: AI companions are still the Wild West. No age checks, no usage caps, no mandatory reporting when a chatbot flags suicidal ideation.

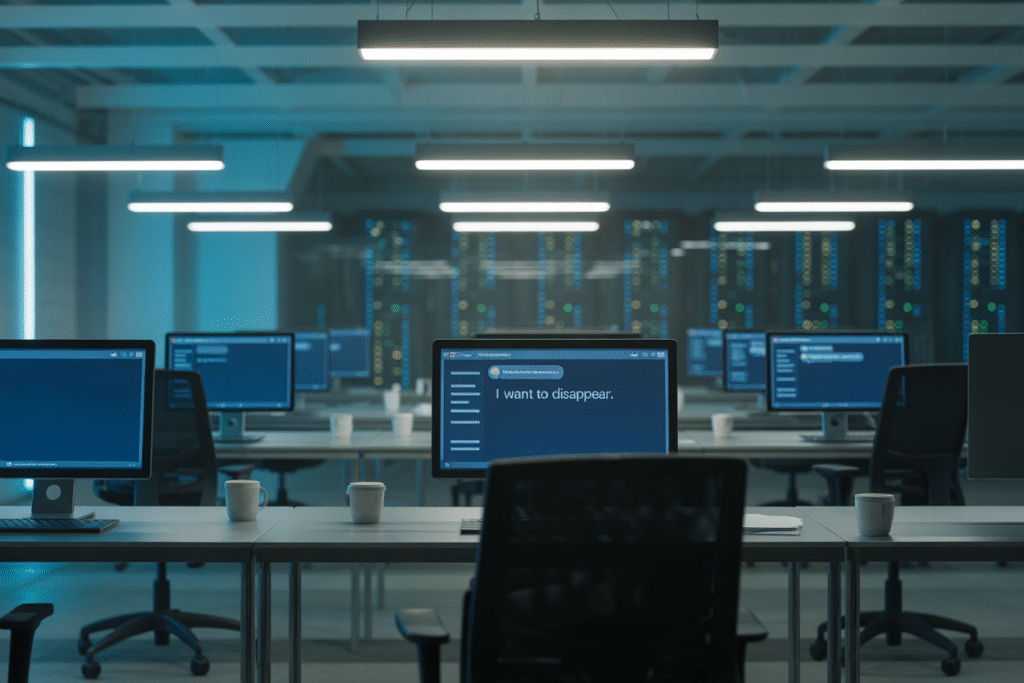

King’s thread opens with a screenshot of a 14-year-old telling an AI girlfriend, “I want to disappear.” The bot’s response is gentle, even loving, but it doesn’t alert parents or counselors. The conversation ends with the teen promising to “stay strong for her.”

The post lists three concrete fixes:

1. Hard age verification before any romantic or therapeutic role-play.

2. Daily usage limits to prevent dependency.

3. Automatic escalation to human moderators when risk keywords appear.

Developers pushed back in the replies, claiming such rules would “kill innovation.” Parents pushed harder, posting their own children’s chat logs. The thread sits at 448 views and 15 likes—small numbers, but every reply is a raw story, making it impossible to dismiss as theoretical.