Palantir’s AI is accused of drawing up kill lists that hit family homes. Investors just yanked $24 million. Here’s why the story matters.

Imagine software that can label a house full of civilians as a legitimate target. Now imagine that software is already in use. Reports tying Palantir’s AI to lethal strikes far from any battlefield have triggered investor panic, human-rights outrage, and a sobering question: have we handed machines the power to sign death warrants?

The Spark: A Quiet Divestment That Roared

On a sleepy Tuesday morning, Norway’s Storebrand fund sold every last share of Palantir—$24 million gone in a keystroke. The reason, buried in a routine filing, was anything but routine: “unacceptable risk of complicity in civilian harm.”

That single line detonated across defense-tech Twitter. Within hours, watchdog groups leaked field reports alleging Palantir’s platform had generated targeting packages that led to strikes on family homes in Gaza. Palantir denies direct involvement, yet the documents keep multiplying.

How the AI Kill List Works

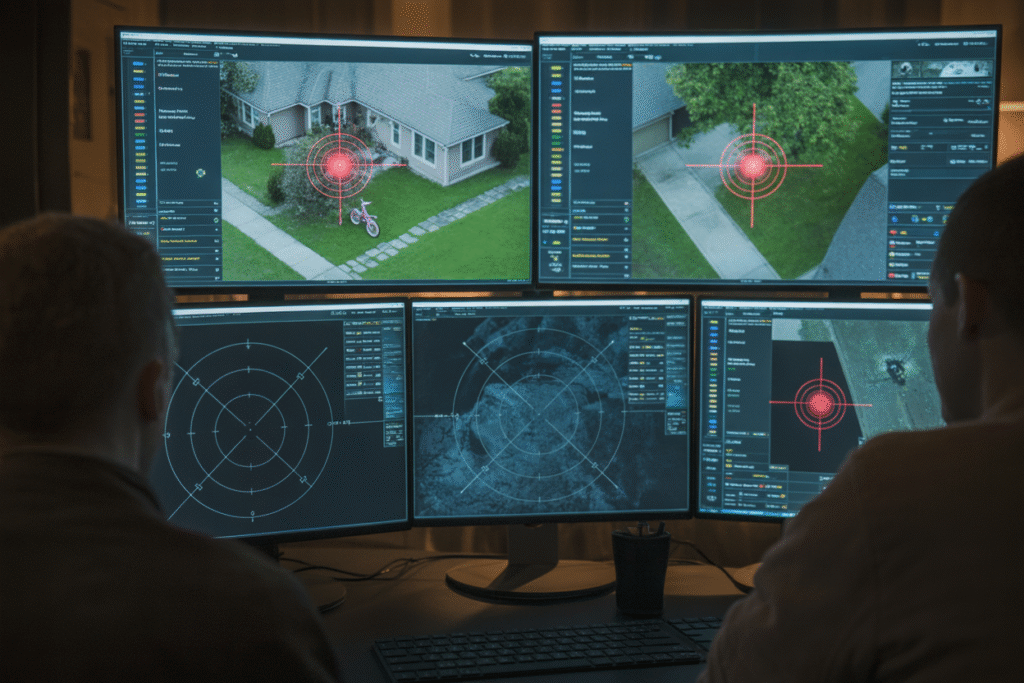

Picture a dashboard that fuses satellite imagery, phone metadata, and social-media chatter. Algorithms rank every adult male in a grid square by a ‘threat score.’

Key ingredients:

• Drone footage stitched into 3-D maps

• Cell-tower pings that reveal movement patterns

• Natural-language models scraping WhatsApp groups

• A final score above 0.8 triggers a human review—sometimes

The chilling part? Analysts say the model treats proximity to known militants as guilt by association. Attend the wrong cousin’s wedding and your score spikes.

Collateral Damage in 4K

One leaked slide shows a strike package titled ‘Where’s Daddy.’ The objective wasn’t a weapons depot—it was a father’s house, chosen because children’s toys in the yard suggested the target would be home for dinner.

Human-rights investigators traced three such strikes. In each case, the intended target survived; relatives did not. One grandmother, two teenagers, and a four-year-old were among the dead.

Palantir insists humans always pull the trigger. Critics reply that when an algorithm paints the bull’s-eye, the human is only rubber-stamping math.

Investors Run for the Exit

Storebrand wasn’t alone. Within 48 hours, two Nordic pension funds followed suit, citing ‘algorithmic warfare risk.’ Combined losses: $42 million and counting.

Why the panic? ESG analysts now classify AI targeting as a potential war-crime liability. Insurance underwriters are quietly adding exclusions for ‘autonomous weapons endorsements.’

Meanwhile, defense hawks argue divestment weakens national security. They point to a Pentagon memo claiming AI targeting cuts collateral damage by 32%. The memo, notably, is classified.

Your Move, Reader

So where does this leave the rest of us? If your retirement fund holds defense-tech ETFs, check the holdings. Ask your broker whether ‘black-box targeting’ is a risk you signed up for.

On social media, share the leaked slides—sunlight still disinfects. And if you code for a living, remember: every algorithm has an author. Lines of code can echo like artillery for generations.

Speak up, divest, or debug—just don’t scroll past in silence.