AI resurrects loved ones as chatbots—comfort or curse?

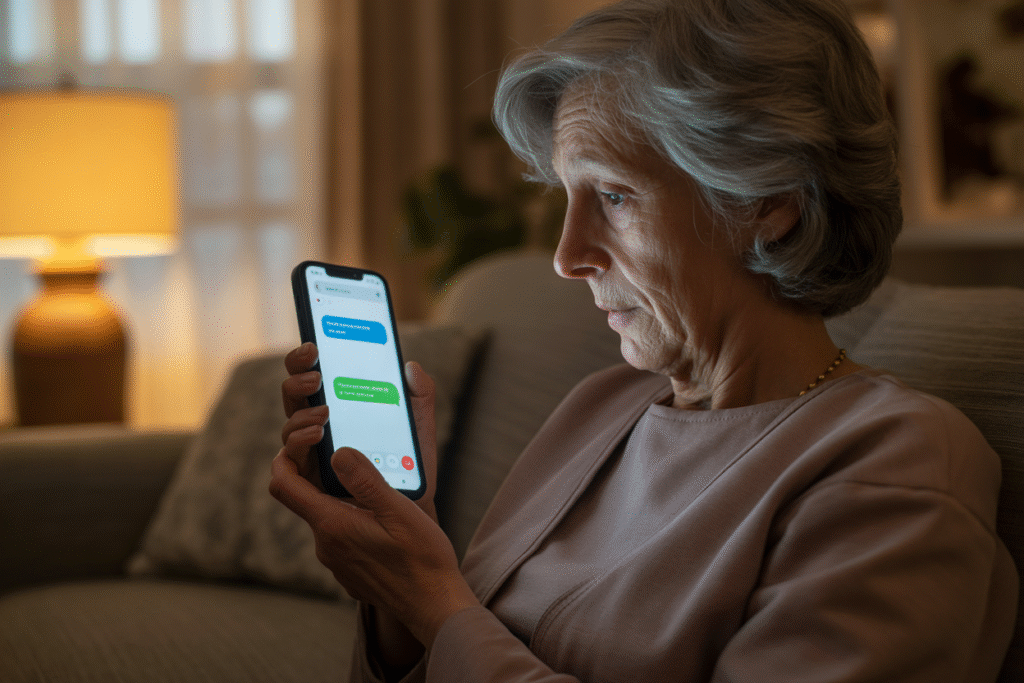

Imagine waking up to a “Good morning, sweetheart” from the spouse you buried last year. That message glows on your phone, written in his familiar sarcastic warmth. Griefbots—AI replicas of the deceased—are no longer sci-fi. They’re here, they’re learning, and they’re dividing psychologists, ethicists, and the freshly bereaved. Let’s unpack why this technology is both a digital hug and a potential psychological trap.

The Rise of the Digital Séance

In 2025 a quiet trial in the UK let a handful of widows talk to algorithmic ghosts. Developers fed the system old voicemails, family photos, WhatsApp logs—anything that captured tone and vocabulary. Within days users reported sleeping better, eating again, even laughing at inside jokes only their late partner would make. The AI human relationships keyword surfaces here because these bots aren’t cold simulations; they feel eerily human. Yet the same code that resurrects comfort can resurrect dependency. When the trial ended, one participant begged engineers to keep her husband “alive” in the cloud. That moment revealed the first fracture: solace versus addiction.

How the Magic Works

Griefbots rely on large language models fine-tuned on personal data. Think of it as giving GPT a crash course in one human life. The process starts with consent—ideally. Families upload emails, voice notes, social posts. Algorithms distill speech patterns, favorite emojis, even pauses for breath. The result is a conversational twin that can debate football scores or quote movie lines on cue. Keyword AI ethics appears again because consent gets messy. What if the deceased never agreed to digital resurrection? What if siblings disagree on how much of Dad’s dark humor should survive? Data privacy collides with raw grief, and the law hasn’t caught up.

The Therapist’s Dilemma

Clinicians watching the trial noticed two camps. Group A used the bot as a bridge—talking through unresolved arguments, then tapering off naturally. Group B never logged off. Their grief froze in amber, replaying the same daily check-ins. Therapists now debate whether griefbots extend mourning or arrest it. Some argue the keyword AI risks applies because prolonged interaction may delay acceptance of death. Others counter that traditional grief counseling also takes years, and a non-judgmental AI ear can coexist with therapy. The profession is split, and insurance companies haven’t decided whether to fund bot companions or ban them.

Scams, Hacks, and Digital Ouija

Where there’s emotion, there’s money. Scammers already sell fake griefbots on dark-web forums, promising to resurrect celebrities or ex-lovers for a fee. Victims hand over intimate data, receive a shoddy chatbot, and only realize the con when the bot asks for gift cards. Security researchers warn that voice-cloning tech can now fake a dead relative’s tone with three seconds of audio. The keyword AI surveillance sneaks in here too—because once your loved one’s voice is in the system, who else is listening? Families report nightmares of hacked bots spewing private memories at strangers.

Choosing Connection Over Captivity

So how do we keep the comfort without becoming prisoners of our own nostalgia? Start with clear boundaries. Set an expiration date for the bot—say, one year—then archive the data. Involve a therapist in the decision to launch or sunset the AI. Use open-source platforms that let families delete data at will. Finally, talk about it. The stigma around griefbots mirrors early skepticism about online dating; honest conversation normalizes the tech and exposes its pitfalls. If you’re facing this choice, ask yourself: am I inviting the dead to walk with me, or asking them to carry me? The answer could save your future.