A school shooting sparks a rush to deploy Israeli AI threat detection—raising urgent questions about privacy, ethics, and who watches the watchers.

Two children lost. A nation stunned. And within hours, a sleek new AI platform named GIDEON was pitched on cable news as the silver bullet to stop the next shooter. Is this the future of public safety—or the quiet birth of a surveillance state? Let’s unpack what’s happening, why it matters, and what could go wrong.

The Pitch: GIDEON Lands on Fox News

Minutes after the Minneapolis school shooting, former Israeli commando Aaron Cohen sat across from a prime-time host and unveiled GIDEON—an AI system that never sleeps, never blinks, and claims it can spot a killer before he pulls the trigger.

Cohen described a 24/7 web-scraping engine that sifts social media, forums, and chat rooms for threat language using what he called an “Israeli-grade ontology.” When the algorithm senses danger, it pings local cops in real time. A dozen agencies, including a 2,700-officer department in the Northeast, are already signed up for next week’s rollout.

The timing felt almost cinematic. Grief was fresh, fear was raw, and the promise of a digital guardian angel landed like a lifeline. But Cohen’s confident smile didn’t answer the obvious question: who decides what counts as a threat, and what happens when the machine gets it wrong?

The Backlash: Privacy Advocates Sound the Alarm

Civil-liberties groups didn’t wait for the commercial break to push back. The ACLU fired off a warning that mass data scraping without warrants is a Fourth Amendment gut punch. False positives, they argue, could brand angry teenagers—or political activists—as would-be shooters.

Critics also point to the foreign-tech angle. An Israeli firm holding sensitive data on American citizens raises national-security eyebrows. What if the algorithm carries baked-in biases? What if it’s hacked? And once the system is live, what’s to stop mission creep from school safety to protest monitoring?

Online, the debate split into two camps: parents desperate for any tool that might save their kids, and privacy hawks who see Minority Report playing out in real life. The hashtag #StopGIDEON started trending within hours, alongside #ProtectOurKids. Same tragedy, opposite fears.

Voices of Dissent: Greenwald, Rossmann, and the Grassroots

Investigative journalist Glenn Greenwald announced a live episode of System Update devoted to what he calls the “problem-reaction-solution playbook.” His thesis: tragedies grease the wheels for surveillance expansions that would never pass in calmer moments. Expect deep dives into dark-money lobbying and the Online Safety Act.

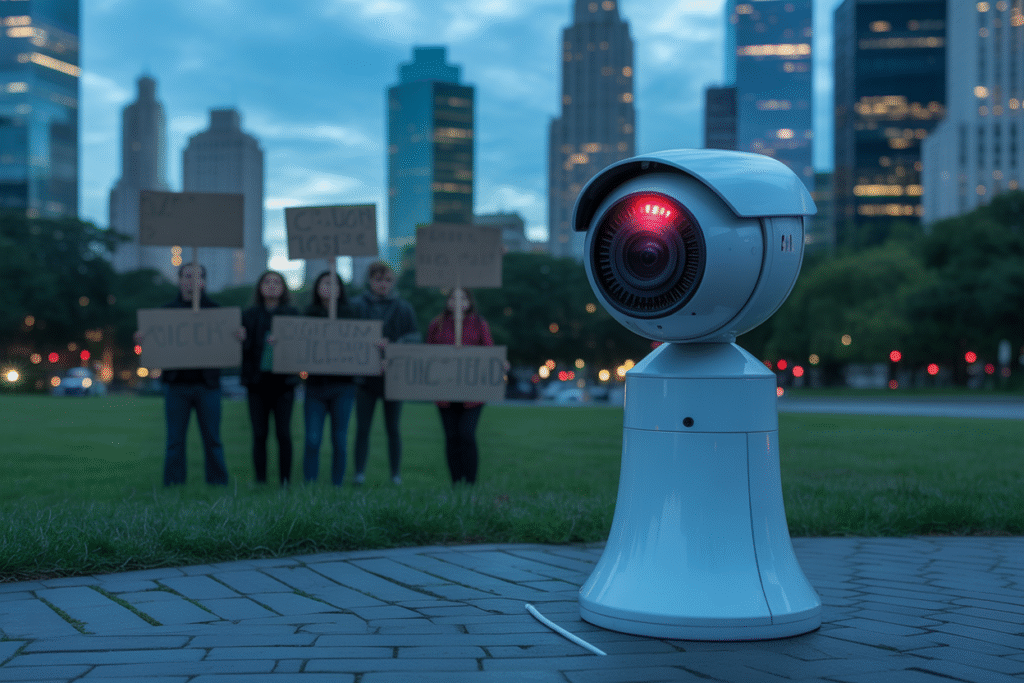

Meanwhile, in Austin, YouTuber Louis Rossmann led a sweaty, sign-waving protest outside City Hall. The target: a $2 million plan to install AI camera towers in public parks. Protesters waved #Clippy signs—half joke, half warning—arguing that warrantless tracking in green spaces normalizes a culture of suspicion.

Rossmann’s livestream drew thousands of viewers who peppered the chat with questions about data storage, facial recognition, and whether the cameras were sourced from Chinese manufacturers. The council vote is still pending, but the optics were clear: everyday citizens are no longer willing to trade privacy for promises of safety without a fight.

What Happens Next: Risks, Regulations, and Your Role

So where does this leave us? GIDEON’s pilot launches next week, Austin’s cameras could be approved any day, and Congress is quietly drafting bills that would fund even broader AI threat-detection grants. The stakes feel personal because they are.

Here’s what to watch:

• Transparency reports: Will agencies publish false-positive rates?

• Oversight boards: Who audits the algorithm—and how often?

• Data retention: How long does a teenager’s angry tweet stay in the system?

• Scope creep: Will the same tech that hunts shooters start flagging protest plans?

Your voice still counts. Call your city council, ask your police chief the hard questions, and demand that any AI surveillance comes with iron-clad safeguards. Because once the cameras go up, they rarely come down.

Ready to dig deeper? Share this article, tag your reps, and keep the conversation alive.