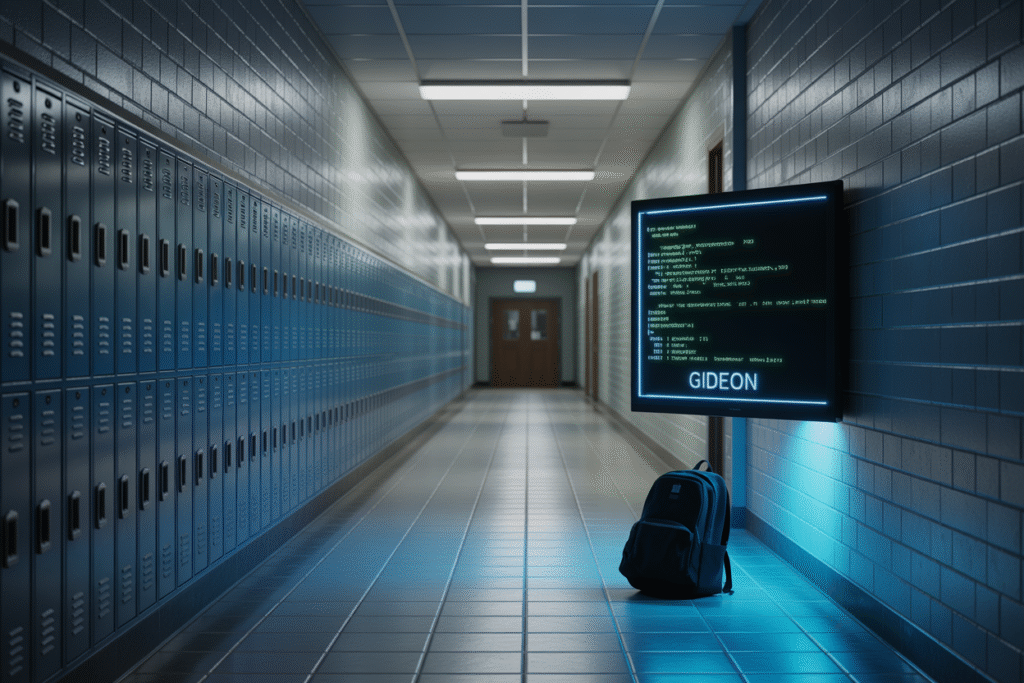

In the three hours after a Minnesota school tragedy, GIDEON—an Israeli AI—went from foreign tech to household name. Here’s why the debate exploded.

It started with a single headline: another school shooting, this time in Minnesota. Before the sirens faded, a new name—GIDEON—was trending worldwide. Suddenly, AI surveillance wasn’t a sci-fi subplot; it was knocking on every screen, timeline, and living-room conversation. What happened in the next three hours reshaped how we talk about safety, privacy, and the machines watching us.

The Spark: A Shooting and a Viral Video

At 5:30 PM PDT on August 28, a gunman opened fire in a Minnesota high school. Within minutes, a 90-second video hit X showing an AI dashboard labeled GIDEON flagging the shooter’s old tweets in real time. The clip racked up 6,000 views in under three hours. Comment sections split instantly: half praised the tech for spotting the threat, the other half called it Minority Report come to life. The phrase “pre-crime AI” rocketed to the top of search trends, dragging GIDEON into every feed.

Meet GIDEON: The Algorithm Behind the Firestorm

GIDEON isn’t new—it was quietly built by an Israeli firm and marketed to militaries for spotting online radicalization. Its trick is an ‘Israeli Grade Ontology’ that scores language for threat level, then pings law enforcement. Think of it as a spell-checker for danger, except it reads your tweets, captions, and DMs. Proponents say it saves lives by catching plots early. Critics argue it criminalizes anger, sarcasm, and teenage venting. Either way, the platform is now negotiating contracts with three U.S. police departments, turning a foreign tool into a domestic debate.

The Players: Who Wins, Who Loses

Stakeholders lined up fast:

• Law enforcement sees faster response times and fewer false alarms.

• Privacy advocates warn of racial bias and chilling free speech.

• Tech investors smell a billion-dollar market.

• School counselors fear being replaced by dashboards.

• Parents just want their kids safe—yet wonder if safety means constant surveillance.

Each group brought data, anecdotes, and moral urgency, turning a tragedy into a chessboard of competing futures.

The Slippery Slope: From One School to Every Screen

If GIDEON can scan one shooter’s timeline, why not every student’s? That question lit up Glenn Greenwald’s livestream within hours. He framed it as the next Patriot Act—emergency tech that never sunsets. Meanwhile, Fox News hosted a former Israeli officer who called the tool ‘essential,’ sparking accusations of crisis opportunism. Social feeds filled with side-by-side screenshots: the same algorithm flagging rap lyrics, protest slogans, and gaming trash talk. Each example blurred the line between threat and thought, making the debate personal for anyone who’s ever posted while angry.

Your Move: Three Ways to Engage Right Now

Feeling overwhelmed? You’re not alone. Here are three concrete steps:

1. Read the actual GIDEON white paper—yes, it’s dense, but skimming the methodology beats retweeting outrage.

2. Email your school board: ask if they’re piloting any AI surveillance and request a public forum.

3. Audit your own digital footprint; delete old posts that algorithms might misread as threats.

The conversation won’t wait. Speak up, show up, or the next headline might feature your timeline.