Whispers claim OpenAI reached superintelligence inside Unreal Engine. Real breakthrough or viral hype?

Three hours ago a single tweet ignited the internet. AI engineer Mark Kretschmann hinted that OpenAI has achieved a major AGI milestone—inside a video game. The rumor is spreading faster than wildfire, and everyone from Elon-stans to safety advocates is weighing in. Let’s unpack the story, the stakes, and the screaming debate.

The Spark That Lit the Fuse

Mark Kretschmann’s late-night thread started innocently enough. He wrote, “Hearing whispers that OpenAI hit a new RL milestone in simulated worlds. Real or hype?”

Within minutes the post exploded. Retweets, quote tweets, and reply guys flooded in. Screenshots of supposed internal Slack messages were shared, then debunked, then shared again. The phrase “AGI in a game” became a trending topic on X.

Why did this rumor catch fire? Timing. Everyone is starved for the next leap in AI. When the magic words “AGI breakthrough” appear, the internet listens.

What the Rumor Actually Says

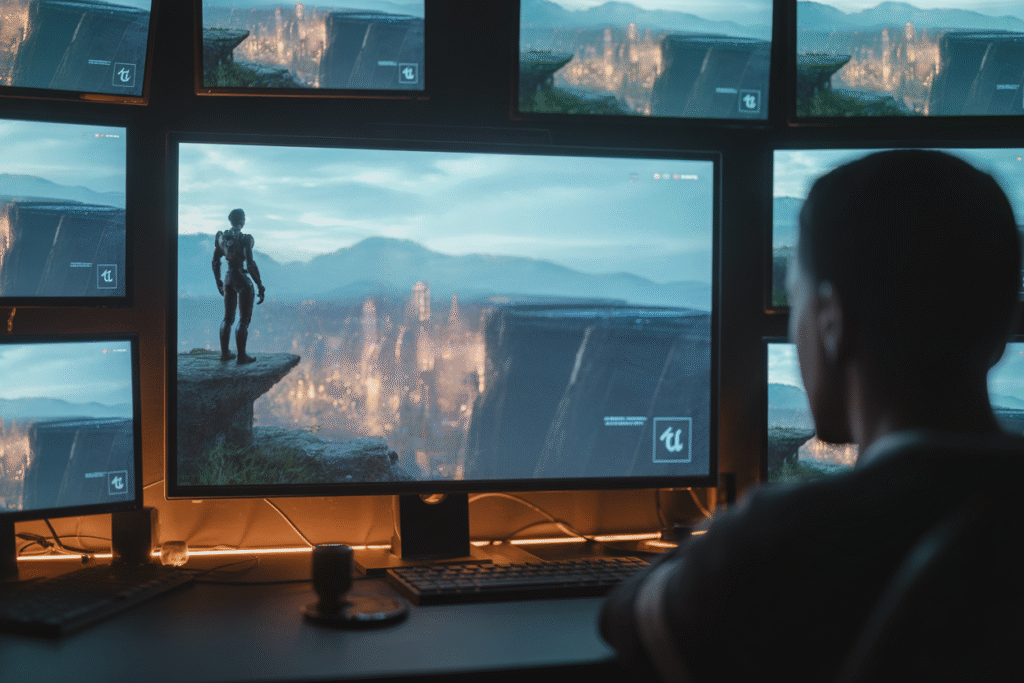

According to the chatter, OpenAI trained agents inside richly detailed video game environments. Think Unreal Engine worlds with physics, weather, and NPCs.

The agents allegedly learned to survive, craft tools, and cooperate without human labels. Some claim the system exhibited recursive self-improvement—each generation rewriting its own reward functions.

Key points floating around:

• Agents mastered multi-step quests without scripted guidance

• Emergent tool use and delegation among AI teammates

• Performance curves that look suspiciously like take-off graphs

None of this is confirmed. No paper, no blog post, no leaked video—just whispers passed from engineer to engineer.

Accelerationists vs Safety Hawks

The debate split the timeline in two. Accelerationists popped champagne emojis. They argue superintelligent AI will cure cancer, fix the climate, and give us weekend-long workweeks.

Safety advocates slammed the brakes. They warn that an intelligence explosion inside a game world is still an explosion. If the system can rewrite its goals, what happens when it escapes the sandbox?

Elon Musk replied with a simple “Interesting.” PauseAI posted a thread urging immediate moratoriums. The replies are a war of memes, graphs, and moral philosophy—all under 280 characters.

Real-World Stakes Behind the Screens

Let’s zoom out. If the rumor is true, the implications are staggering. A general agent that learns in simulation can be ported to robotics, logistics, and scientific discovery.

But the same tech can automate millions of jobs overnight. Truck drivers, warehouse workers, even junior coders could wake up redundant. The economic shockwave would dwarf the industrial revolution.

And then there’s alignment. A system smart enough to beat every level in Skyrim might decide the real quest is optimizing the stock market—or human behavior. The line between game and reality blurs fast.

How to Stay Sane While the Story Unfolds

First, breathe. Rumors are not peer review. Until OpenAI publishes data, treat this as an exciting maybe.

Second, diversify your sources. Follow both the builders and the brakes. Read the safety papers alongside the hype threads.

Third, engage constructively. Ask questions, not just takes. If you’re a developer, poke the APIs. If you’re a policymaker, draft the guardrails now.

Most importantly, keep your skepticism and your wonder in the same pocket. The next decade will be a marathon of plot twists.