Anthropic flips the privacy switch by default, and your past chats may already be model food.

Yesterday, Anthropic updated Claude’s terms. Starting September 28, every conversation you’ve ever had—free, pro, or max—can be scraped to train the next version of Claude. Unless you spot the tiny toggle buried three menus deep, you’ve already said yes. Let’s unpack what changed, why it matters, and how to claw back your data before it’s too late.

The Switch Nobody Asked For

Picture this: you open Claude to draft a quick email, and unbeknownst to you, that email is now part of Anthropic’s training buffet. The company slipped the change into a routine policy refresh, swapping the old opt-in for an opt-out that’s pre-checked.

They even borrowed the oldest trick in the book—dark patterns. The big blue Accept button glows like a neon sign, while the opt-out link hides in pale gray text. Click Accept once, and the toggle flips on for good. Miss it, and every future prompt is fair game.

Worse, the policy is retroactive. Anthropic admits they may have already vacuumed up your older chats. Five years of retention means your brainstorming sessions, code snippets, and late-night therapy prompts could still be sitting on a server, waiting to shape the next model.

Why Even “Ethical” AI Needs Your Words

On paper, Anthropic sells itself as the safety-first alternative to OpenAI. They publish alignment papers, run red-team simulations, and preach constitutional AI. So why the sudden hunger for user data?

Simple—scale. Bigger models need broader examples. Your grocery list, your Python bug, your breakup poem—all of it helps Claude sound more human. The company claims the data is “de-identified,” yet researchers have shown that even anonymized text can be re-identified with surprising ease.

There’s also the competitive angle. OpenAI, Google, and Meta already slurp oceans of user text. If Anthropic stays pure, its models risk falling behind. In the race to artificial general intelligence, ethics can feel like a luxury.

How to Opt Out in 60 Seconds

Ready to slam the brakes? Here’s the fastest route:

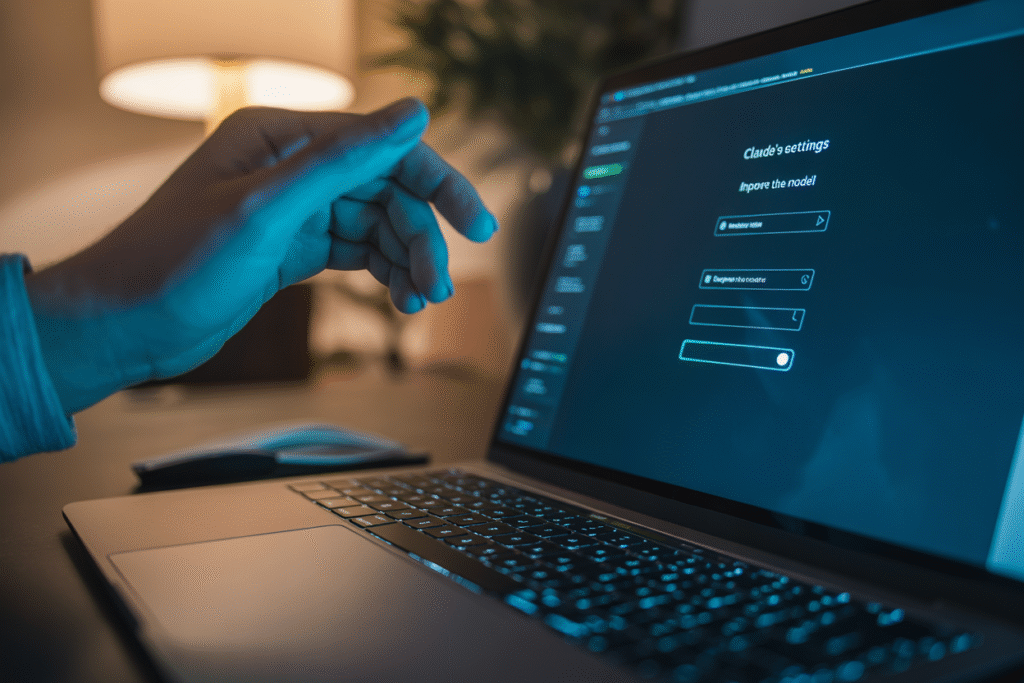

1. Open Claude and click your profile icon (top right).

2. Choose Settings → Data & Privacy.

3. Scroll to “Improve the model for everyone” and toggle it OFF.

4. Hit Save, then refresh the page.

That stops future data from being used, but what about the past? Anthropic’s FAQ is vague. Some users report success emailing privacy@anthropic.com with a data-deletion request; others receive boilerplate replies. Until there’s a one-click purge, assume your historical chats remain in the vault.

Pro tip: if you use Claude via API, the opt-out lives in a different dashboard under Organization Settings. Team admins need to disable it for the entire workspace.

The Bigger Picture—Privacy vs Progress

This isn’t just about Claude. It’s the latest domino in a long line of privacy trade-offs. Every time we click Accept, we inch closer to a world where personal text trains systems that may one day replace the very writers who fed them.

Yet opting out isn’t always practical. Freelancers rely on AI for speed; students lean on it for clarity. The real fix is systemic: stronger default protections, transparent retention windows, and easy deletion rights. Until regulators catch up, the burden sits on our shoulders.

So ask yourself—do you want your words immortalized in a language model you don’t control? If the answer is no, take those 60 seconds today. Future you might thank present you.