Activists just used AI to name masked ICE officers—sparking cheers, death threats, and a national shouting match over privacy, power, and the future of surveillance.

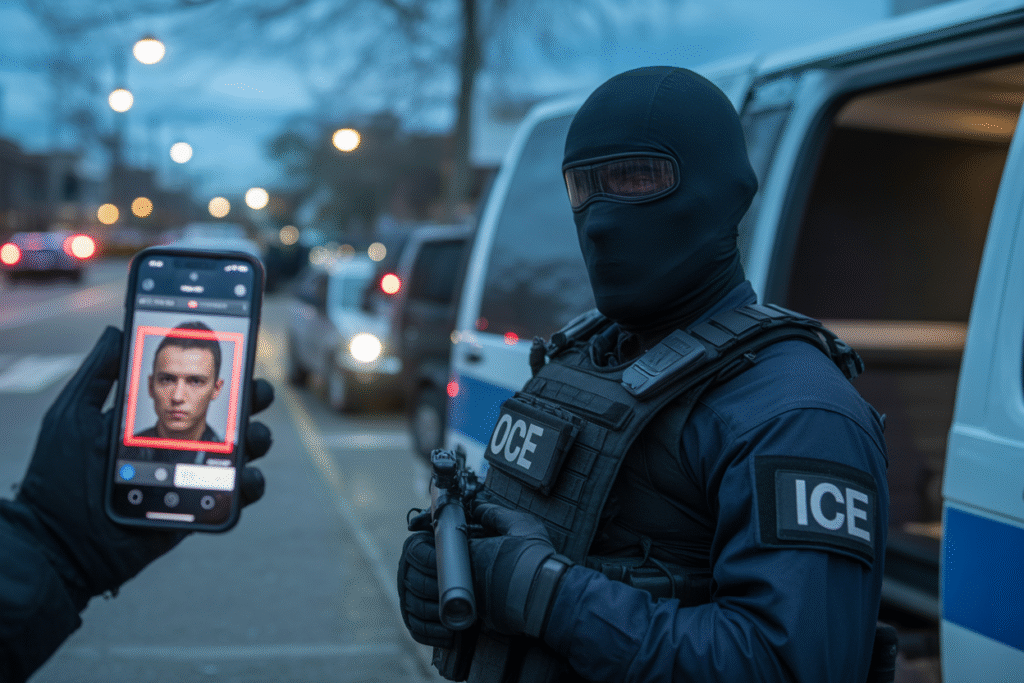

Imagine scrolling your feed and seeing a neighbor’s face labeled “ICE Agent #12.” That’s not dystopian fiction—it happened yesterday. A Dutch activist fed arrest footage into facial-recognition AI and published the matches. Now lawmakers, officers, and privacy hawks are in open combat. Below, we unpack how a weekend experiment turned into the most explosive AI ethics story of the year.

The Spark: How One Tweet Lit the Fuse

Dominick Skinner, a Netherlands-based coder, watched a viral ICE arrest clip and wondered why the officers wore balaclavas. He ran the footage through PimEyes, a consumer-grade facial search engine, and within minutes had a probable name. Instead of keeping it private, he posted the match on X with the caption “Accountability starts here.” The tweet hit 50k likes in three hours. By morning, activists had crowdsourced enough donations to spin up a site called ICE List. Twenty officers were named before lunch. The story rocketed from fringe forums to POLITICO’s front page before most people had their second coffee. What began as a lone coder’s curiosity became a national referendum on AI-powered doxing.

Inside the Tech: Cheap AI, Big Consequences

PimEyes isn’t some shadow-military software—it costs $29.99 a month and advertises to stalkers and HR recruiters alike. Skinner simply uploaded still frames, let the algorithm chew on them, and cross-checked the top hits against LinkedIn profiles and driver-license databases. The process is shockingly low-tech: crop, click, confirm. Accuracy hovers around 60%, meaning four out of ten names could be wrong. Yet the margin of error hasn’t slowed the momentum. Each new match feels like a magic trick to supporters and a loaded gun to critics. When the barrier to outing someone is a subscription and a hunch, the old rules of privacy evaporate faster than you can say “terms of service.”

Voices in the Crossfire: Who’s Cheering, Who’s Terrified

Supporters call it digital whistleblowing. They argue masked agents can hide excessive force behind anonymity, and AI simply levels the field. Civil-rights lawyers see a goldmine of evidence for wrongful-deportation suits. Meanwhile, ICE families are receiving death threats within minutes of publication. Officers describe pulling into their driveways to find protest drones overhead and children asking why strangers are screaming at the door. Republican lawmakers label the project “domestic terrorism,” while progressive Democrats file bills demanding every federal agent wear body cams—ironically increasing the footage pool future activists could mine. Tech ethicists warn of a feedback loop: the more outrage, the more clicks, the more incentive to keep unmasking.

Legal Minefield: Can the Law Catch Up?

Right now, no federal statute explicitly bans AI-powered doxing of government employees. The closest analog is 18 U.S.C. § 119, which criminalizes publishing home addresses of law-enforcement families—but proving intent to threaten is tricky. Enter the VISIBLE Act, introduced last week, which would make publishing an officer’s identity a felony if it leads to harm. Critics call it a gag order disguised as safety. First-Amendment scholars counter that public servants acting in public spaces have diminished privacy expectations. Courts have yet to rule whether algorithmic identification is speech or a weapon. Until precedent arrives, every new post sits in a gray zone where legality depends on the judge’s morning coffee.

Your Move: How to Engage Without Adding Fuel to the Fire

Feeling torn? That’s normal. Start by asking three questions before sharing any unmasking post: Is the ID verified by at least two sources? Does the publication prevent harm or merely invite it? Am I amplifying evidence or spectacle? If you’re uncomfortable, redirect energy toward policy—call your reps about facial-recognition moratoriums or donate to legal defense funds for wrongly deported migrants. Tech workers can push employers to audit AI tools for bias. Parents can demand schools teach digital consent the same way they teach stranger danger. The genie is out of the bottle, but we still choose how loudly we cheer—or how quickly we build safeguards. Your next click shapes the story.