While we argue about rogue superintelligences, real-world AI weapons are already reshaping war — and nobody’s talking about it.

Scroll through any AI ethics thread and you’ll find heated warnings about runaway superintelligences. Yet a quieter, more urgent story is unfolding on battlefields and in defense labs. Nathan J. Robinson’s viral post just yanked the spotlight onto this blind spot, and the internet can’t look away.

The Sci-Fi Distraction

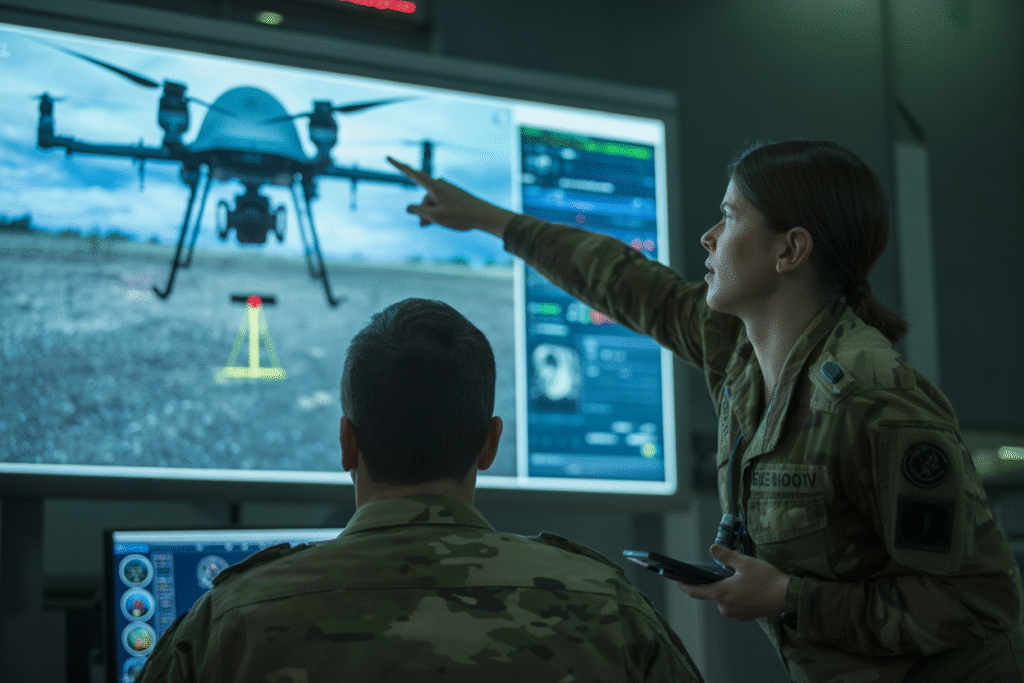

Picture two researchers on a podcast. One frets about paper-clip maximizers; the other asks, “But what about the drone that already decides who lives or dies?” That second question is the one most panels dodge.

Robinson’s argument is simple: every hour spent debating far-future alignment is an hour not spent regulating autonomous weapons that exist today. He calls it “ethics theater” — dramatic, well-funded, and conveniently removed from current battlefields.

The result? A public that fears Skynet while militaries quietly deploy AI targeting systems. The gap between perception and reality has never been wider.

Real Weapons, Real Stakes

Israel’s Lavender system reportedly flagged 37,000 bombing targets in Gaza in under two weeks. Turkey’s Kargu drones can autonomously track and strike. These aren’t prototypes; they’re operational.

Key risks:

• Lowered threshold for conflict — when machines bear the moral load, leaders pull triggers faster.

• Accountability vacuum — who faces trial when an algorithm misidentifies a wedding as a weapons convoy?

• Escalation spiral — adversaries race to match autonomy, shrinking decision windows from minutes to milliseconds.

Meanwhile, defense contractors brand these tools as “precision” and “cost-saving,” language that echoes Silicon Valley pitch decks more than military doctrine.

What Happens Next

The next 24 months will decide whether military AI becomes normalized or stigmatized like chemical weapons. Three forces are colliding:

1. Regulation: The EU’s AI Act exempts defense, but pressure mounts for a binding global treaty.

2. Whistleblowers: Engineers inside major firms are leaking memos showing internal alarm over lethal autonomy.

3. Public Opinion: Viral clips of drone strikes spark outrage faster than press releases can spin them.

So, what can you do? Start by demanding transparency from elected officials. Ask which AI systems your tax dollars fund. Share verified reports. The loudest voice in this debate shouldn’t be a general or a CEO — it should be yours.