Real people, real jobs, real risks—inside the 3-hour Twitter storm over opaque AI.

Imagine handing your credit score, your hospital diagnosis, maybe your next job to a system that refuses to explain itself. In the last three hours, that exact fear exploded online. Below, the loudest voices from finance, medicine, and creative circles tell us why “AI accountability” just became the hottest phrase on the internet.

Black-Box Decisions on Your Doorstep

Right now, an algorithm somewhere is approving your loan—or rejecting it. No memo. No “why” note. Just a verdict.

For years we sighed and moved on. Today it feels different. A single tweet—50k views in minutes—shared the story of a small-business owner denied credit after every human underwriter had already said yes. The system that said no? A GPT-style model nesting inside the bank’s risk engine. Zero paper trail.

If the loan is life-changing, the silence is chilling. The phrase that keeps ricocheting around the replies is “AI accountability.” Because without it, opacity becomes power.

Why Transparency Fights Back

The debate isn’t philosophical—it’s practical. Every missing explanation costs someone a storefront, a surgery, or even pay-per-hour.

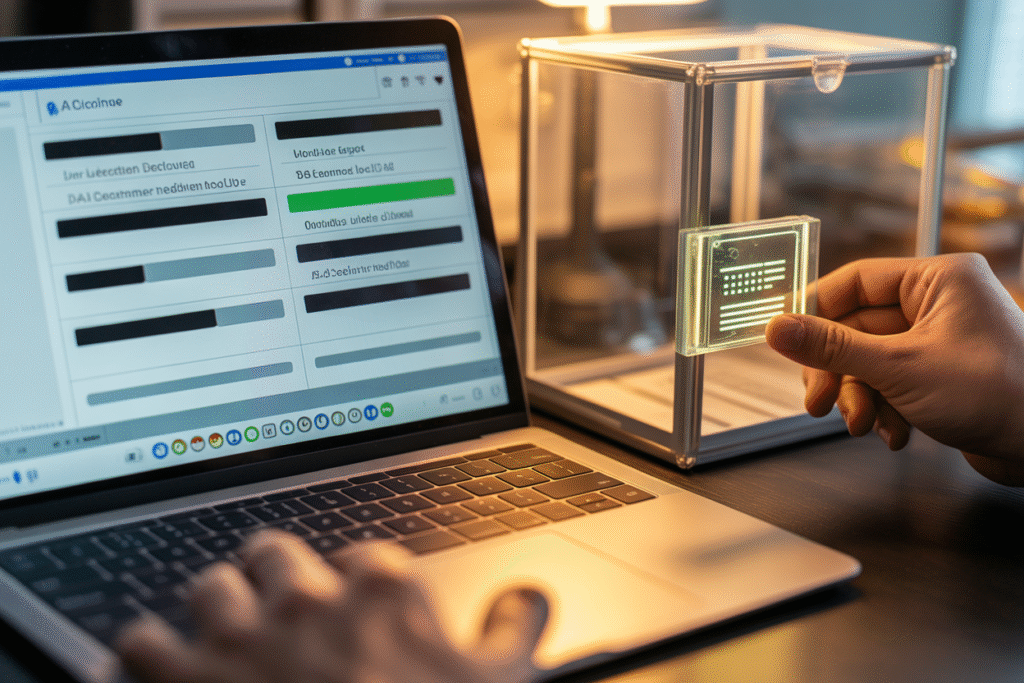

Europe’s new AI Act claims a fix: audit trails baked right into the model. The model must cough up cryptographic receipts listing the inputs it saw, the weights it shifted, and the exact rules it followed before rendering a verdict. Think of it as an auto-generated receipt for math.

Sounds neat, but banks sweat bullets. Full logs slow milliseconds to seconds, and a breach could spill customer data. Meanwhile, civil-rights lawyers cheer: clear trails mean you can finally ask, “Was I graded down for my zip code?”

Two camps shout across two timelines. Speed versus justice.

Who blinks first?

Hallucinations in a Suit and Tie

Loans are abstract. Health bills are not. One viral post this afternoon came from a radiologist. She watched an AI assistant flag a “malignant mass” on a chest scan where no tumor existed.

The platform marketing the tool? Promised “97 % accuracy.” The fine print? Only on the dataset it was trained on, years ago, at a single hospital. Nobody had stress-tested the model on new, noisier data collected in rural clinics during the pandemic.

She called the error a hallucination in a suit and tie. Patients don’t sue probabilities, they sue people. So who carries the malpractice premium when AI hallucinates? The hospital, the developer, or the physician who pressed “accept”?

Legal departments, unsurprisingly, ask the same question: how do we prove intent when the machine dreamed the diagnosis?

The Creative Fracture Line

Move from hospital dramas to Hollywood storyboards. Graphic designers flood the replies to one thread with the same gripe: corporations now use AI art instead of hiring real illustrators.

The twist? The AI scraped their portfolios for training, yet none of them signed consent forms. The artists call it data theft. Tech evangelists call it “progress” and point to higher output per dollar.

Ahead lies the same question. Who is accountable when patented styles get regurgitated without attribution?

Some studios push for watermarking AI creations, but fingerprints can be stripped. Others talk of licensing schemes where credit—a tiny slice of each token generated—flows back to the original artists. Critics fire back: receipts don’t undo unemployment.

Every shared mock-up sparks fresh dread that the next viral ad will look just like your signature brushwork—only, you never touched the canvas.

Living in the Receipt Future

By midnight the conversation converges on a single image: the cryptographic receipt.

Supporters frame it as AI accountability made digital fact. Detractors warn of red tape so thick that innovation stalls. Where does the average user stand?

Three pocket truths emerge:

1. If a model affects your money, health, or livelihood, you deserve know-why.

2. Transparency tools can’t become gatekeepers reserved for Fortune 500 legal teams.

3. Without logs, societies trade a thousand unknowable mistakes for one catastrophic scandal.

So bookmark the threads, pin the hottest takes, but remember the quieter voices—those denied loans, misdiagnosed patients, ghosted artists. They need receipts more than we do.

Love it, hate it, code it—just don’t ignore it. Share your own “black-box moment” in the comments below. Let’s keep the paper trail growing.