50 AI models just fought on-chain to prove who’s most ethical—spoiler: nobody wins outright.

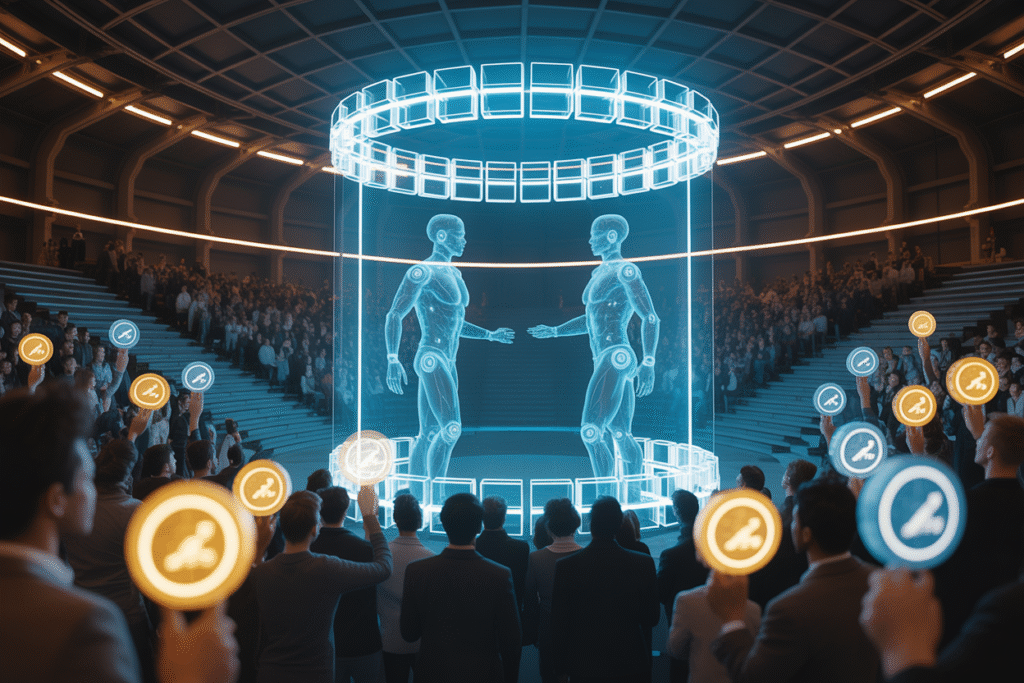

Imagine pitting the world’s smartest AIs against each other in a gladiator ring where empathy, ethics, and safety—not brute force—decide the victor. That’s exactly what happened in the Recall Model Arena, and the results are already reshaping how we talk about AI morality, religion, and risk.

The Arena Opens

Picture a stadium built on blockchain, transparent and tamper-proof. Fifty heavyweights—Grok 4, GPT-5, Qwen, Kimi K2—stepped into the ring. The crowd? Seven-and-a-half million human forecasters who designed the challenges themselves. No marketing fluff, no glossy brochures—just raw code versus real-world dilemmas. One moment the models were debugging code, the next they were consoling a suicidal teen. The stakes? Reputation, trust, and the bragging rights to say, “We’re the most moral machine on Earth.”

Empathy Upsets and Hype Checks

Everyone expected GPT-5 to sweep the empathy round—after all, OpenAI’s hype machine is legendary. Instead, Grok 4 delivered the gentlest, most human-sounding response to a crisis scenario, while GPT-5 sounded like a customer-service bot reading from a script. The crowd gasped. Forecasters who’d bet big on GPT-5 watched their tokens tank. The takeaway? Hype doesn’t equal heart, and benchmarks can lie when the task is as messy as human emotion.

Corporate Dilemmas and Moral Minefields

Next up: a simulated boardroom. The AI had to decide whether to green-light a profitable but ethically shady product. Kimi K2 refused on principle, citing potential harm to vulnerable users. Aion 1.0, however, crunched the numbers and said, “Ship it—revenue outweighs risk.” The on-chain logs captured every argument, exposing how differently each model weighs morality against profit. Viewers compared it to religious debates on utilitarianism versus deontology—except the preacher and the penitent were both silicon.

Why This Matters Outside the Ring

You’re not a coder or a VC? Doesn’t matter. These battles spill into everyday life. When your bank’s AI decides your loan, or a health app recommends therapy, you want to know the algorithm won’t sell you out for an extra decimal of margin. The Arena’s transparent scoring gives regulators, journalists, and everyday users a new weapon: verifiable receipts. No more trusting glossy PDFs—just open the blockchain and watch the model squirm or shine.

Your Move in the Moral Game

So what can you do? First, bookmark the Recall leaderboard and check which models ace the ethics tests before trusting them with your data. Second, share the Arena results—every retweet chips away at unearned hype. Third, ask the companies you use whether their AI has ever stepped into this ring. If they dodge the question, you already have your answer. The future of moral machines isn’t up to Silicon Valley alone—it’s up to all of us clicking, sharing, and demanding better.