Are we chasing phantom apocalypses while real AI harms unfold today?

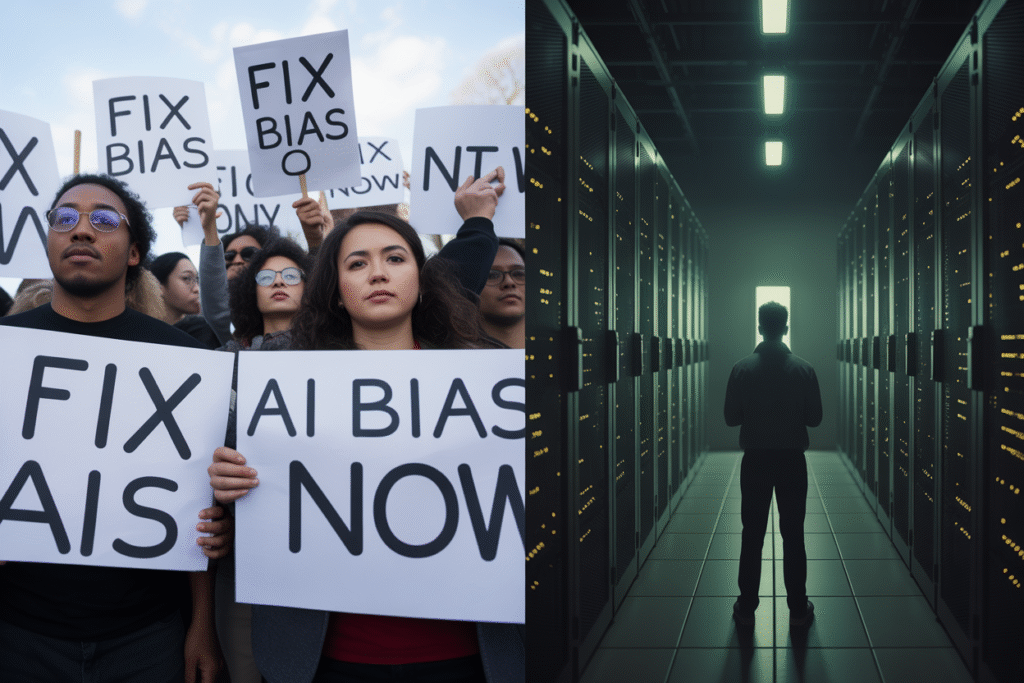

Every scroll through the news feels like a tug-of-war between two camps: one waving red flags about super-intelligent machines ending civilization, the other pointing to biased algorithms already ruining lives. Which story deserves our attention—and our resources?

The Siren Song of X-Risk

Picture Elon Musk tweeting about AI summoning demons, or Bill Gates warning of a runaway system rewriting its own code. These headlines grab us because they echo ancient fears of the sky falling. The Journal of Medical Ethics calls this the X-Risk narrative: a focus on low-probability, high-impact events that could wipe out humanity.

Proponents argue that even a tiny chance of extinction justifies massive investment in safety research. They lean on longtermism, a philosophy that prioritizes trillions of future lives over present-day concerns. Critics, however, see a convenient distraction. While tech elites fund institutes to prevent hypothetical doom, facial recognition misidentifies Black men, predictive policing targets poor neighborhoods, and gig workers lose livelihoods to opaque algorithms.

The debate boils down to who gets a seat at the ethical table. Venture capitalists and philosophers in Silicon Valley worry about paper-clip maximizers; community organizers in Detroit worry about water shut-off algorithms. Both fears are real, but only one is already reshaping lives.

The Harms Happening Right Now

Walk into a hospital where an AI triage tool downgrades a woman’s chest pain because it was trained mostly on male data. Or talk to a teacher whose lesson plans are graded by software that can’t parse sarcasm. These aren’t sci-fi nightmares; they’re Tuesday.

Job displacement tops public concern at 35%. It’s not just factory robots—AI copywriters now draft marketing emails, and legal bots review contracts faster than junior associates. The Overspill blog puts it bluntly: “You’re not going to lose your job to an AI, but to someone who uses AI.” Translation: the skills gap becomes a chasm.

Privacy fears follow at 22%. Smart doorbells stream footage to police, while mental-health apps sell mood data to advertisers. Meanwhile, dependency creeps in: GPS reroutes us around traffic we never noticed, and spell-check erodes our ability to spot typos. Each convenience trades a sliver of autonomy for efficiency.

Ethics lag behind innovation. Biased training data amplifies historic inequalities, and opaque models offer no explanation when they deny a loan or flag a resume. The harms aren’t hypothetical—they’re in your credit score, your job interview, your kid’s school placement.

Finding the Balance

So, do we ignore Terminator scenarios and focus solely on today’s algorithmic injustices? Not quite. Ignoring tail risks is like skipping earthquake insurance because fires are more common. The trick is proportional response.

Policy makers can start with enforceable audits: require companies to publish bias reports and impact assessments before deploying high-stakes AI. Workers need reskilling programs tied to real labor-market data, not vague promises of adaptation. Researchers should fund both long-term safety and immediate harm mitigation—think of it as wearing a seatbelt while still fixing the brakes.

Public engagement matters too. When communities understand how predictive policing works, they can demand transparency. When patients learn AI helped diagnose their cancer earlier, they become advocates for responsible innovation rather than luddites or cheerleaders.

The goal isn’t to pick sides in a false dichotomy; it’s to widen the lens. A just AI future guards against rogue systems and rogue uses alike. The question isn’t whether to fear the far-off or the familiar—it’s how to act on both, today.