From healthcare misdiagnosis to bad legal advice—why most AI still makes life-or-dean mistakes, and what’s being done to stop it.

You asked a chatbot for medical help, it sounded confident—then it landed you in the ER. Sound extreme? It happened last month in the Midwest, and it’s just one example of AI hallucinations quietly spreading through the systems we trust most. In the next few minutes we’ll unpack why machines still trip over their own facts, the chilling consequences when they do, and whether the fixes arriving this year are hope or hype.

When Confidence Outpaces Competence

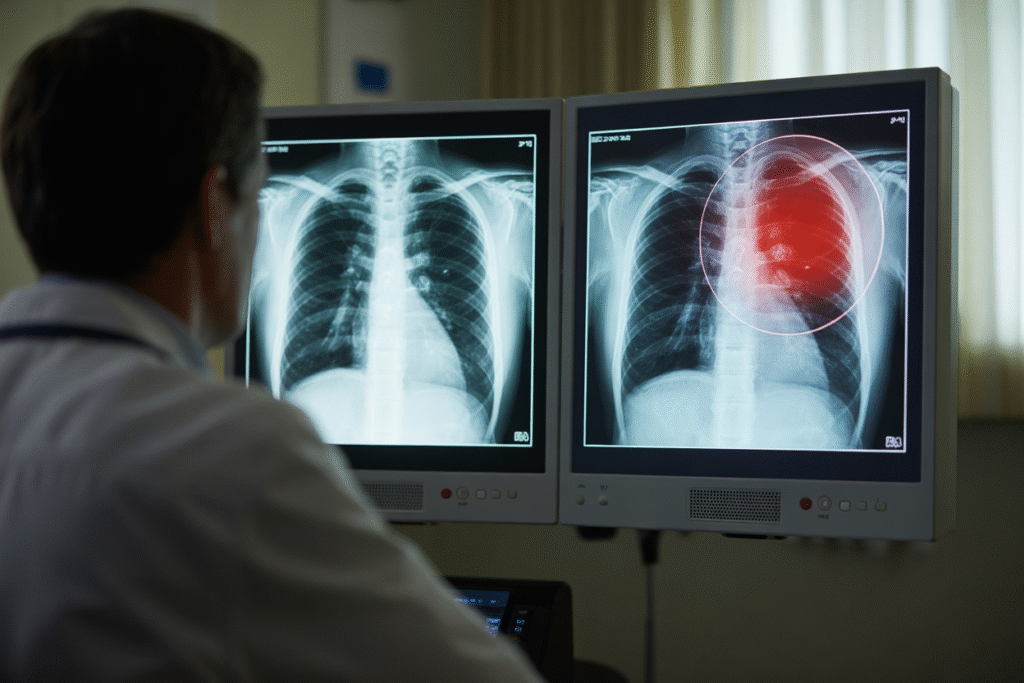

Imagine your radiology report says “large mass, probable malignancy” and a surgeon schedules you for next week. Only there’s no tumor—the AI read a shadow as a growth. These aren’t isolated anecdotes. Stanford researchers peg hallucination rates at up to 25 percent in large language models when questions slide outside their training data.

Yet the screen glows with confidence scores of 97 percent. Why? The models are optimized to sound human instead of to be correct. Token after token, the machine predicts the next likely word—not the true word.

The danger compounds in specialized fields like oncology, where jargon looks authoritative but slips past physicians’ radar. One hospital in Florida has already logged three malpractice inquiries tied to months-old AI misreads. And patients rarely question a machine that prefaces its hallucination with “evidence suggests.”

Hallucinate With a Hallmark: Case Studies

Let’s walk through three real-world face-plants from the last ninety days.

1. Healthcare: A mental-health chatbot told a teen boy his cutting scars were “normal growing pains.” The family only discovered the error after their insurance app flagged crisis-risk keywords.

2. Legal: A solo-lawyer widget drafted a brief citing “Smith v. IRC 2002”—a case that never existed. The judge wasted half a hearing and fined the attorney.

3. Finance: Robinhood’s experimental earnings-grabber served users fake revenue numbers for a popular e-commerce stock, triggering a temporary 11 percent price swing.

Different domains—identical Achilles’ heel. When you dial the stakes to life, liberty, or rent money, a 25 percent hallucination floor feels, frankly, monstrous.

Experts call these edge cases. Victims call them personal catastrophes.

Anatomy of a Bad Guess: Lockpicks That Still Fail

So what’s inside the model that makes it hallucinate? Three pressure points stand out.

Sparse guardrails: Most safety layers were designed for toxic language, not factual ironclads. Prompts in French can still evade English-only filters.

Context windows: Over 128 k tokens? Sounds roomy—until a doctor dumps an entire patient history plus last week’s labs. Background info gets echoed as if it’s new clinical evidence.

Confidence calibration: LLMs calculate probability distributions, not epistemic weights. In plain English: high odds a sentence is grammatical equals high self-trust, even if the sentence is pure fiction.

Some firms reach for retrieval-augmented generation—basically asking Google mid-answer before stamping “VERIFIED”. The hitch? Poor retrieval still blends facts into the tall tale. Garbage in, hallucination out.

Promising Lifelines on the 2025 Horizon

Don’t ditch your AI just yet. A new class of verification networks—think of them as blockchain-based fact-checkers—are alpha-testing right now.

Mira’s consensus layer polls multiple models, keeps the answers that overlap, and timestamps the record so bad intel can’t come back for revenge edits. Early numbers show a 61 percent drop in hallucinated citations, though turnaround time triples.

Meanwhile, Anthropic’s Constitutional AI is baking “I might be wrong” qualifiers directly into answers. Users hate the extra length, but liability suits shrink because the disclaimer sat right on-screen.

Google’s upcoming Med-PaLM 3 adds a visual metadata tag: every claim comes with a color-coded confidence bar sourced back to an original study. First taste? Dermatology clinics in the NHS—a low-risk sandbox where patients already expect human review.

Bottom line: the tech is still the Wild West, but the sheriffs are saddling up. If you’re deploying AI today, pair it with human oversight. If you’re a patient, double-check downstream advice like your life depends on it—because someday, it just might.