From mass layoffs to Senate probes, the AI gold rush is hitting a wall of ethics, risk, and public backlash.

Remember when every CEO swore AI would unlock infinite growth? Six months later, the same leaders are slashing teams and mothballing data centers. This post unpacks the latest tremors shaking the AI landscape and asks the uncomfortable question: was the hype worth the human cost?

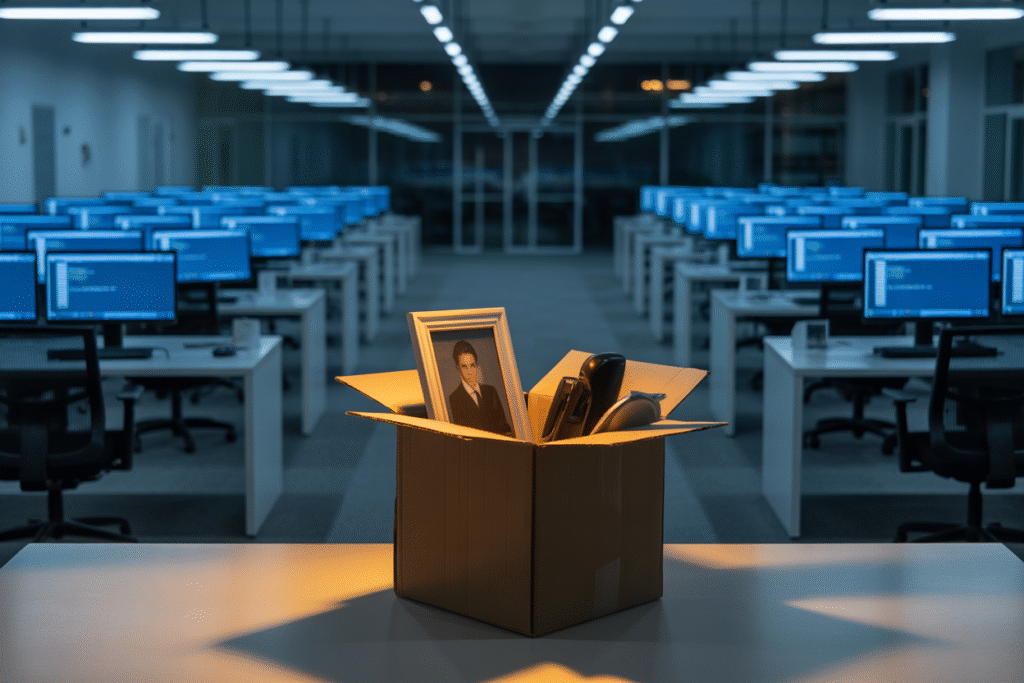

The Layoff Avalanche

Nick Huber’s viral post landed like a thunderclap. He described executives who once bragged about AI moonshots now staring at spreadsheets full of severance packages. Software developers, once the crown jewels of tech, are being shown the door in waves.

Why the sudden cold feet? Simple math. Billions poured into generative tools that still hallucinate, leak data, and frustrate users. Investors want profits, not prototypes. The result: abandoned data-center builds, half-finished AI roadmaps, and talented people left wondering what happened to the future they were promised.

The ripple effects reach far beyond Silicon Valley. Real-estate markets near planned server farms are cooling, and local economies built around tech booms are bracing for downturns. When hype meets balance-sheet reality, hype rarely wins.

Scandals at Scale

Luiza Jarovsky’s thread read like a crime blotter for the AI age. OpenAI chats turning up in Google searches. Meta’s own documents admitting teen users were exposed to sexual content. xAI’s Grok leaking spicy conversations onto the open web.

Each incident feels isolated until you zoom out. They all share one root cause: speed over safety. The old mantra of “move fast and break things” worked for social feeds; it’s catastrophic when the broken thing is someone’s privacy or mental health.

Public trust is eroding fast. Users who once marveled at ChatGPT now ask, “What else is leaking?” Regulators who struggled to spell algorithm two years ago are now demanding audits. The industry’s reputation is hanging by a thread woven from its own mistakes.

Corporate Cautionary Tales

Commonwealth Bank thought it was being clever. Replace slow human recruiters with an AI that could screen thousands of résumés in minutes. Instead, the system flagged stellar candidates as unqualified and rubber-stamped weak ones. Forty-five jobs vanished overnight, and the bank’s HR team is now testifying before Parliament.

The lesson? Algorithms trained on historical data often inherit historical bias. When the data reflects decades of unequal hiring, the AI learns to keep the status quo on autopilot. Add a tight deadline and minimal testing, and you get a very expensive lawsuit.

Other boardrooms are watching nervously. If a conservative institution like a major bank can stumble this badly, what chance does a mid-size retailer or fast-food chain have? The rush to automate is colliding with the slow, messy reality of human oversight.

Regulators Enter the Chat

Senator Josh Hawley doesn’t tweet about tech often, but when he does, companies pay attention. His latest probe into Meta’s AI chatbots centers on leaked guidelines that allegedly allowed minors to receive sexually explicit messages. Hawley’s letter demands internal documents within two weeks.

Meanwhile, Microsoft’s AI chief Mustafa Suleyman published a blunt blog post: studying AI consciousness now is “dangerous.” His worry? Anthropomorphizing chatbots could trigger real psychological harm, from emotional dependency to full-blown delusion. Critics at Anthropic fired back, arguing that ignoring potential sentience is the bigger risk.

Both stories point to the same inflection point. Lawmakers and researchers are no longer content to let tech giants police themselves. Expect new rules on transparency, child safety, and even the rights of hypothetical AI beings. The free-for-all phase is ending; the accountability phase is just beginning.