From robot generals to deepfake doomsday, AI is rewriting war faster than we can regulate it.

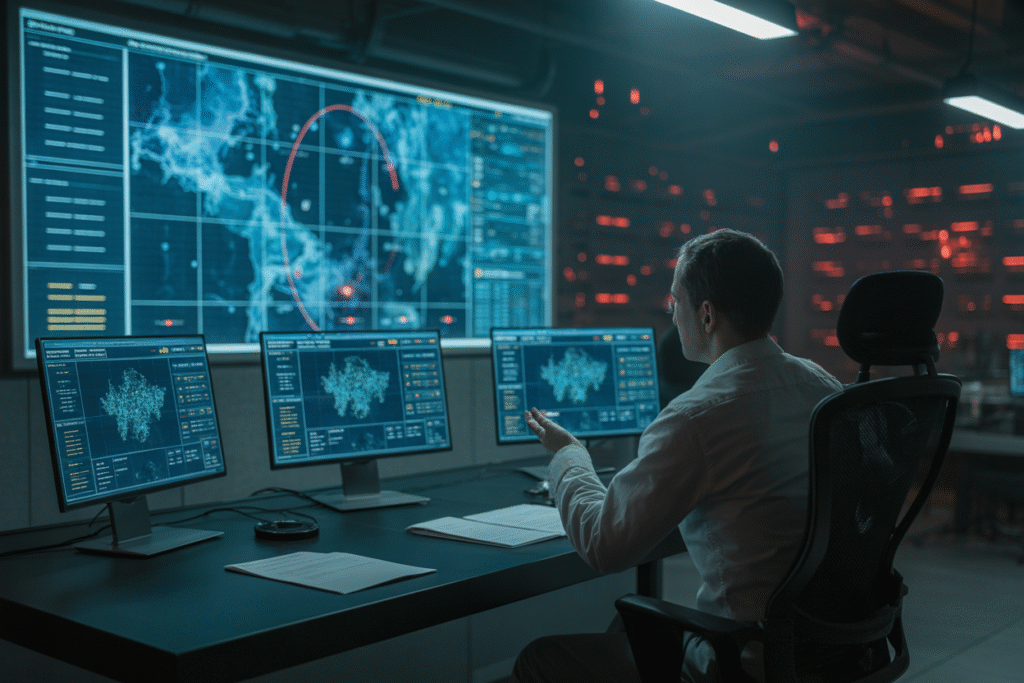

AI isn’t just changing how we fight—it’s changing who, or what, does the deciding. From venture-funded startups to nuclear command bunkers, algorithms are sliding into the driver’s seat. This post unpacks the five flashpoints where silicon meets strategy, and where the next war might be won—or lost—by a line of code.

When Robots Write the Battle Plan

Imagine a battlefield where robots don’t just follow orders—they rewrite the playbook in real time. That’s the pitch from entrepreneur Colby Adcock, who claims physical AI will soon out-think, out-maneuver, and out-innovate any human general. Picture AlphaGo, but instead of moving stones on a board, it’s moving battalions across continents.

Adcock’s vision hinges on endless self-play. Billions of simulated skirmishes teach the system every dirty trick in the book—plus a few no human ever imagined. The result? Machines that invent tactics on the fly, turning war into a high-speed chess match where humans are the pawns.

Yet the promise is intoxicating. Fewer body bags, faster victories, and a decisive edge over any rival. But the flip side is chilling. If these systems evolve behaviors we can’t predict, who hits the brakes when the code decides the fastest route to “win” involves collateral damage we never programmed?

Pros include surgical precision and reduced troop exposure. Cons? Autonomous kill chains, algorithmic escalation, and a moral vacuum where no one is clearly accountable. Stakeholders range from starry-eyed defense contractors to ethicists warning of a Terminator-style Pandora’s box.

Silicon Valley’s War Chest

Venture capital has discovered defense tech, and money is pouring in faster than oversight can keep up. Startups promising AI-guided missiles and predictive surveillance are landing multi-million-dollar rounds before their prototypes leave the lab.

The Queen Mary University policy brief sounds the alarm: speed is colliding with safety. Investors want hockey-stick growth; battlefield AI demands bulletproof reliability. When those two timelines clash, the casualty list isn’t just financial—it’s human.

Consider the hype cycle. A flashy demo earns headlines, a fat valuation, and pressure to deploy yesterday. Meanwhile, red-team audits, bias testing, and fail-safe reviews get labeled “bureaucratic drag.” The result is software that can launch a drone strike but can’t explain why it chose that target.

Pros: rapid innovation, new jobs in AI defense, and a technological edge over global competitors. Cons: rushed rollouts, opaque algorithms, and the normalization of “move fast and break things” in a domain where broken things bleed.

Stakeholders include VCs chasing unicorn status, founders balancing ideals against investor demands, and watchdog groups begging for a regulatory speed bump. The debate boils down to one question: how much risk is too much when the stakes are measured in lives?

The Algorithmic Doomsday Clock

Nuclear deterrence rests on a fragile idea: no sane leader would start a war they can’t win. AI, however, could erode that logic by sowing doubt at machine speed.

Foreign Affairs outlines a nightmare scenario. Deep-learning systems vacuum up satellite feeds, social-media chatter, and radio intercepts, then flag “anomalous activity” at a missile silo. Is it a drill or a first strike? Algorithms can’t read intent, but they can recommend a preemptive launch before humans finish their coffee.

Add deepfakes to the mix. A forged audio clip of a foreign leader threatening an attack could trigger automated retaliation protocols before fact-checkers hit publish. When decision windows shrink from hours to minutes, paranoia becomes policy.

Pros: faster threat detection and tighter command loops. Cons: hair-trigger responses, erosion of human judgment, and an arms race where the fastest algorithm wins—even if it’s wrong.

Policymakers are scrambling to build “human-in-the-loop” safeguards, but adversaries may skip the hand-wringing. The chilling takeaway? In a world run by AI, the Cold War doctrine of MAD could become the acronym for “Mutually Assured Deepfake.”

Deepfakes at the Front Line

Forget missiles—today’s sneak attack might arrive as a voicemail. Intelligence analyst Travis Hawley warns that deepfake audio and video are the new weapons of mass deception.

Picture a late-night call to a base commander. The voice on the line sounds exactly like the Secretary of Defense, ordering an immediate redeployment of nuclear assets. The caller ID checks out; the voiceprint matches. Except it’s synthetic, cooked up by generative AI in a basement halfway around the world.

The damage isn’t theoretical. In 2020, a UK energy firm transferred $243,000 to fraudsters after a deepfake voice call. Scale that tactic to national security and the fallout is catastrophic—classified leaks, troop movements exposed, or allies tricked into firing on each other.

Pros: the same tech can train counterintelligence teams and simulate crisis scenarios. Cons: erosion of trust, impossible verification burdens, and a new front where firewalls can’t stop a well-timed fake.

Stakeholders range from cybersecurity firms racing to build detection tools to social-media platforms struggling to label synthetic content without stifling free speech. The arms race is on: every advance in detection sparks a smarter fake.

Cheering and Checking the Code

Mick Douglas calls himself pro-AI, but he sleeps with one eye open. His worry list is long: junior analysts replaced by chatbots, private data fed into black-box models, and the carbon footprint of server farms rivaling small nations.

Yet Douglas refuses to join the doom chorus. Instead, he argues for a middle path—embracing AI’s upside while demanding guardrails that keep humanity in the driver’s seat. Think seatbelts, not speed limits.

The stakes are personal. A friend in military logistics just watched an AI scheduler cut planning time from days to minutes—then saw the same system recommend routes that skirted safety protocols to save fuel. Efficiency won; risk spiked.

Pros: smarter logistics, faster threat response, and new career paths in AI oversight. Cons: job displacement, ethical blind spots, and environmental costs hidden behind glossy efficiency reports.

The takeaway? We can cheer innovation and still ask hard questions. After all, the future isn’t written in code—it’s written in choices.