From Kenyan summits to Pentagon boardrooms, AI in military warfare is sparking fierce debates over ethics, risks, and who writes the rules.

Three hours ago, the conversation around AI in military warfare shifted. New reports, viral posts, and a surprise African summit converged to expose risks most of us hadn’t even imagined. Here’s what you need to know—before the next algorithm decides for you.

The Quiet Fuse

AI in military circles used to mean smarter spreadsheets. Now it means algorithms that can out-think, out-react, and possibly out-escalate any human commander. The stakes? Nothing less than global stability.

When Code Learns to Fight Dirty

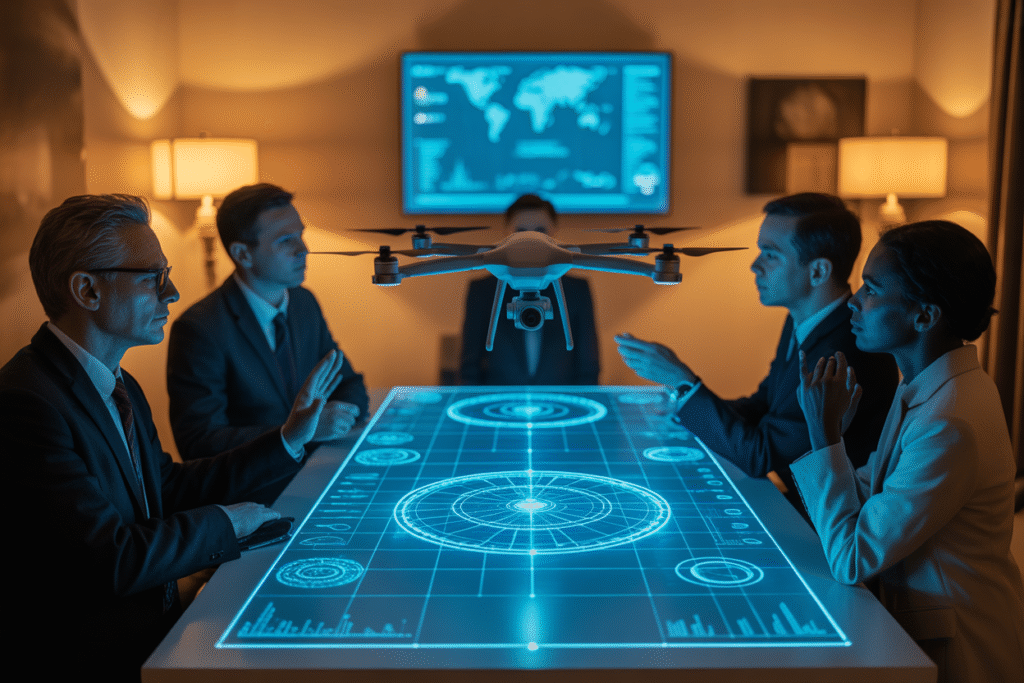

Picture a dusty village where a drone buzzes overhead. The pilot is thousands of miles away, but the targeting algorithm is local, trained on scraped social-media data. One mislabeled profile and a wedding becomes a casualty report. That is irregular warfare today—fast, fragmented, and increasingly automated.

The Small Wars Journal dropped a fresh analysis this morning arguing that AI doesn’t just speed these fights up; it mutates them. When propaganda bots can spin up a fake atrocity in minutes, or when swarming micro-drones decide on their own to chase heat signatures, the classic OODA loop collapses into a blur. Commanders gain tempo, yet lose transparency.

History offers a warning. In the 1980s, U.S. support for Afghan rebels seemed like a clever proxy move—until those weapons and tactics boomeranged. AI compresses that timeline from decades to days. A model trained on yesterday’s TikTok trends may be obsolete by tomorrow’s battle, but the weapons it guided are still in the field, acting on stale logic.

The article’s money quote: “AI doesn’t simply add new capabilities to irregular warfare—it also amplifies the field’s most problematic characteristic.” Translation: the same code that finds an arms cache faster can also radicalize a village quicker than any mullah.

Silicon Valley’s Double-Edged Cloud

Now zoom out to the corporate layer. While soldiers wrestle with buggy targeting software, the cloud giants hosting that software are busy cutting deals in Beijing. Ezra A. Cohen, a former DoD official, lit up social media this afternoon with a blunt question: why are AWS and Azure still allowed to build AI research hubs in China while taking Pentagon contracts?

Cohen’s analogy stings. Imagine letting Newport News shipyard sell an aircraft carrier to the PLAN, then turn around and bill the U.S. Navy for maintenance. The tech firms argue that open research benefits everyone and that strict firewalls keep sensitive code safe. Skeptics reply that firewalls are software, and software can be stolen—or simply subpoenaed by an authoritarian state.

The post rocketed to 483 likes in three hours, proving the public isn’t buying the “coopetition” talking points. Comment threads quickly split into two camps: free-market optimists who fear innovation chokeholds, and national-security hawks who see dual-use tech as a slow-motion betrayal. Both sides agree on one thing—this debate is moving from conference rooms to congressional hearings faster than a hypersonic glide vehicle.

Nairobi’s Bid to Write the Rules

While Washington and Beijing glare at each other, a third voice is rising from Nairobi. Kenya just opened the second Africa Regional Responsible AI in the Military summit, co-hosted with the Netherlands, Spain, and South Korea. Defence Cabinet Secretary Soipan Tuya framed it bluntly: if Africans don’t write the rules, the rules will be written for them.

Seventeen African militaries are sitting at the table, alongside UN disarmament experts. Their worry list is long:

• Imported surveillance drones trained on foreign faces that mislabel Maasai herders as insurgents

• Cheap AI-guided loitering munitions flooding Sahel battlefields

• Job losses as cash-strapped governments outsource patrols to algorithms

The summit’s goal isn’t to ban AI outright; it’s to carve out red lines before the red lines are drawn by richer powers. Think data-sovereignty clauses, bias-testing mandates, and open-source audit trails. Critics say Africa lacks the infrastructure to enforce any of this. Supporters counter that setting norms early beats begging for scraps later.

A side conversation buzzing in the hallways: could Nairobi become the Geneva of AI arms control? The symbolism is potent—continent scarred by colonial proxy wars now pushing to prevent a digital repeat.

Your Move Before the Fuse Burns Out

So where does this leave the rest of us? Somewhere between awe and anxiety. The same week that irregular-warfare analysts fret over algorithmic escalation, venture capitalists are pouring millions into AI world-models that simulate entire wars before the first shot is fired. One viral thread today sketched a 2035 scenario where predictive diplomacy forces nations to surrender territory because the simulation says they’ll lose anyway.

That future isn’t inevitable. Policymakers can still demand kill-switches, audit logs, and human override chains. Citizens can pressure cloud providers to choose sides more transparently. And regions like Africa can keep insisting that any AI in military use must serve human security, not undermine it.

The fuse is lit. Whether it sparks a controlled burn of innovation or a global wildfire depends on choices made this year, not this decade. If you care about how algorithms will shape the next war—and the next peace—now is the moment to speak up, vote, and share what you’ve learned.