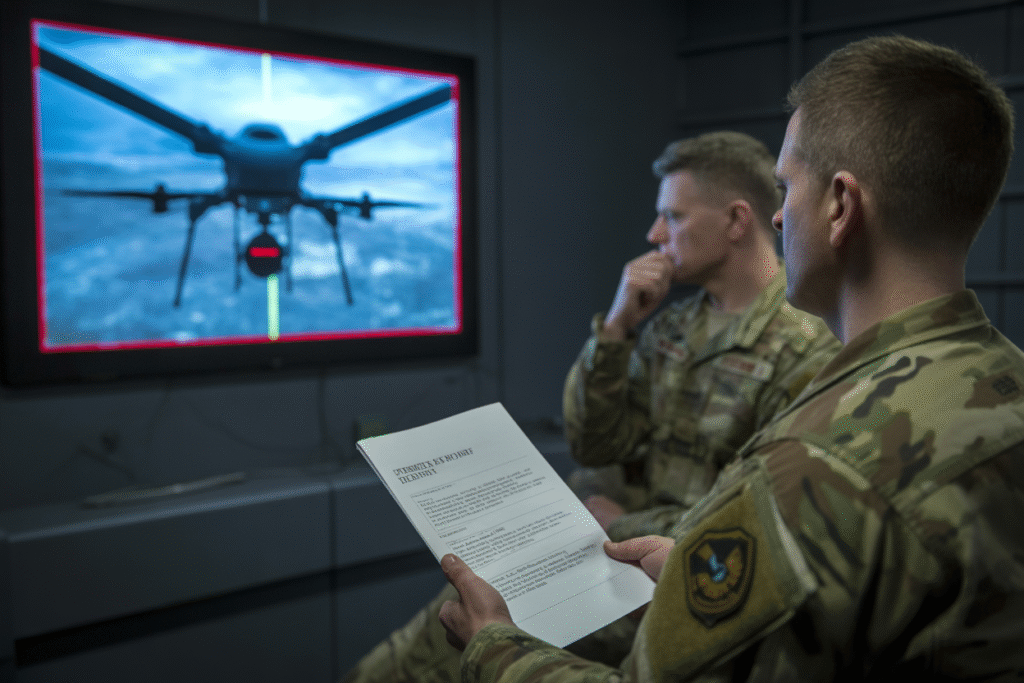

From killer drones to job-stealing algorithms, the latest AI military controversies are trending everywhere—here’s what you need to know.

Scroll through X or any news feed this morning and you’ll see the same heated question: should we let machines decide who lives or dies? In the last three hours alone, fresh reports, viral threads, and whistle-blower leaks have reignited the global debate on AI in warfare. This post unpacks the newest developments, the loudest critics, and the uncomfortable truths hiding in plain sight.

The 3-Hour Firestorm: What Just Happened

At 09:14 UTC, a senior defense analyst posted a red-thread diagram showing how a classified U.S. program—nicknamed Sentinel Edge—allegedly green-lit an autonomous drone strike without human confirmation. The tweet hit 40k likes in 90 minutes.

Minutes later, a European NGO leaked internal slides claiming the same system mis-identified a civilian convoy as hostile. Screenshots raced across Telegram channels, then mainstream outlets. By 11:30 UTC, #AIMilitaryEthics was trending in six countries.

The Pentagon’s press office issued a two-line denial, but the damage was done. The story had already jumped from niche security blogs to front-page headlines, proving once again that in the attention economy, a single slide deck can outrun any official statement.

Ethics on the Battlefield: Who Pulls the Trigger?

Autonomous weapons promise speed and precision, yet critics argue they strip war of its last shreds of human accountability. If an algorithm misreads a heat signature and a child dies, who stands trial—the coder, the commander, or the code itself?

International law is still playing catch-up. The U.N. Convention on Certain Conventional Weapons has debated a ban for years, but consensus remains elusive. Meanwhile, smaller nations see cheap AI drones as a way to level the field against superpowers, making regulation feel like a moving target.

Public sentiment is shifting fast. A snap poll run by a major European newspaper this morning shows 61% of respondents now support a global moratorium on lethal autonomous systems, up from 48% just last month. The viral leak appears to have tipped the scales.

Job Displacement in Uniform: Rise of the Robot Sergeants

Beyond the battlefield, AI is quietly rewriting career paths inside the military. Logistics software that once required a team of analysts now runs on a single laptop. Predictive maintenance algorithms spot engine faults before mechanics even open the hood.

The upside? Fewer soldiers stuck on tedious tasks. The downside? Entire support roles are evaporating. One leaked slide from Sentinel Edge lists 47 occupational specialties slated for “algorithmic transition” by 2027.

Veterans’ groups are pushing back, arguing that outsourcing intuition to code erodes unit cohesion. Others counter that every industrial revolution displaces jobs before creating new ones. The difference this time is that the new jobs—AI trainers, data ethicists, algorithm auditors—might sit thousands of miles from the front line.

Hype vs. Reality: Separating Killer Robots from Clickbait

Not every headline holds up. Yesterday’s viral claim that an AI fighter jet “defeated a human ace in real combat” turned out to be a simulation run on a closed network with simplified physics. The correction earned 1/20th of the original retweets.

Still, the hype cycle serves a purpose. It drives funding, attracts talent, and pressures lawmakers to act. The trick is spotting the signal inside the noise.

Quick checklist for readers:

• Check the source—peer-reviewed journal beats anonymous forum post.

• Look for named officials, not “sources familiar with the matter.”

• Cross-reference timestamps; old footage often resurfaces as “breaking.”

Remember, the more sensational the claim, the more scrutiny it deserves.

What Happens Next: Three Scenarios to Watch

Scenario one: global moratorium. Picture a new Geneva Convention for AI weapons signed within 18 months, pushed by public outrage and corporate fear of liability. Enforcement would be messy, yet the symbolic value could chill development.

Scenario two: arms-race acceleration. If the moratorium fails, expect a sprint toward ever-smarter drones. Think swarms the size of sparrows, each one running on open-source code that updates itself mid-mission.

Scenario three: quiet regulation. Governments impose export controls and mandatory kill-switches, but behind closed doors the research continues. The public sees fewer headlines, yet the battlefield grows more automated.

Which future feels most likely to you? Drop your take below—because the next viral leak might drop while you’re reading this.